https://deepwiki.com/

Turn code repositories into structured, searchable documentation with AI diagrams, conversational search, and enterprise-grade security controls.

DeepWiki transforms code repositories into structured, interactive documentation that simplifies understanding for humans and AI. It integrates with platforms like GitHub and GitLab to generate architecture diagrams, module descriptions, and conversational search tools using Retrieval-Augmented Generation (RAG). With over 30,000 repositories indexed and support for 48+ programming languages, DeepWiki accelerates workflows, reduces onboarding time, and improves AI-driven automation.

Key Highlights:

- Automated Code Documentation: Converts repositories into searchable, structured knowledge hubs.

- AI Integration: Supports AI agents like GPT-4 through its Model Context Protocol (MCP).

- Efficiency Gains: Cuts .NET migration timelines from months to weeks.

- Customization:

.devin/wiki.jsonenables tailored documentation for business priorities. - Security: SOC2 and ISO27001 certified with fine-grained permission controls.

DeepWiki helps enterprises save time, reduce costs, and scale AI solutions by providing instant access to well-organized technical knowledge.

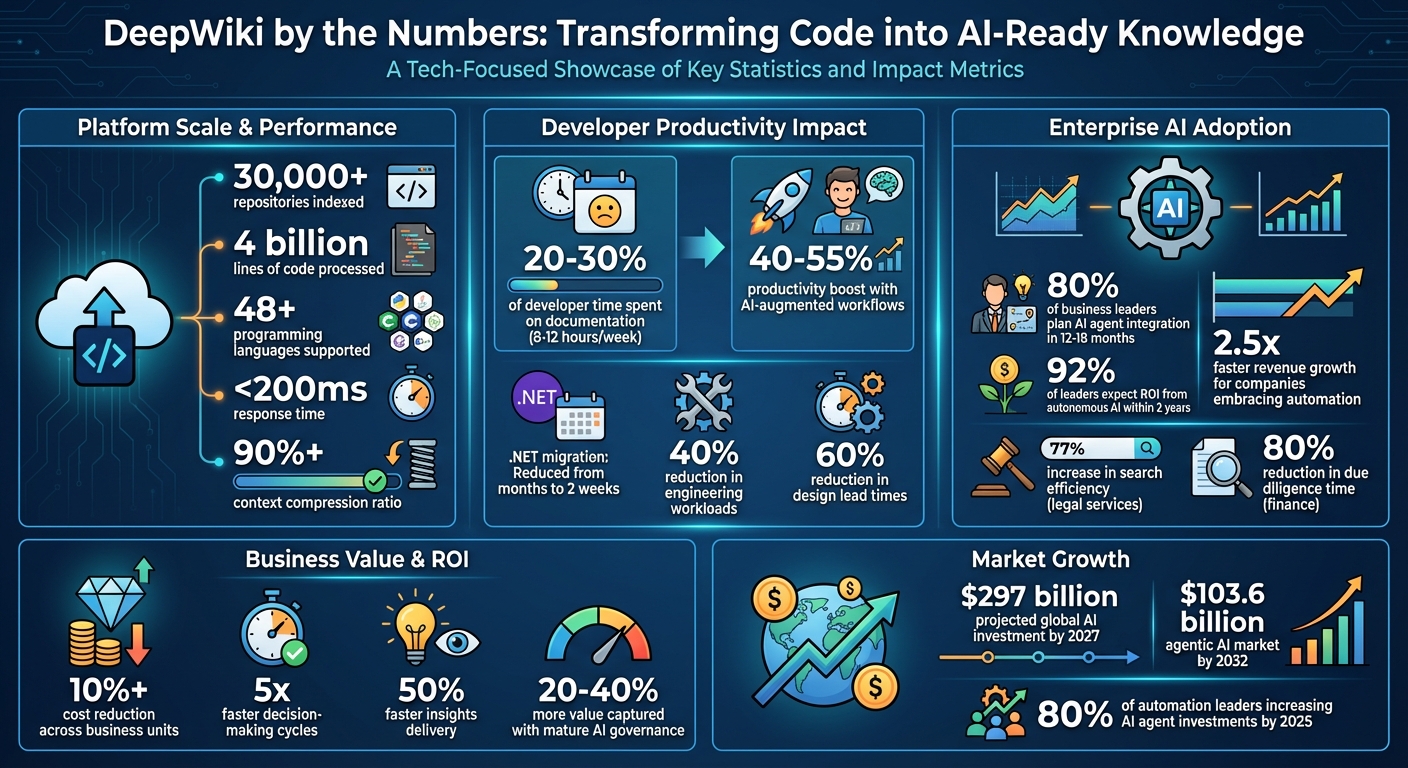

DeepWiki Enterprise AI Impact: Key Statistics and Performance Metrics

DeepWiki's Core Functions and Benefits

What is DeepWiki and How Does It Work?

DeepWiki is an AI-powered documentation engine designed to turn GitHub repositories into structured, searchable knowledge hubs. By analyzing code, configuration files, and metadata, it automatically generates detailed documentation, including architecture diagrams, module descriptions, and dependency graphs. Unlike traditional documentation tools that rely on manual updates and maintenance, DeepWiki leverages Retrieval-Augmented Generation (RAG) to deliver context-aware answers to technical questions through its conversational AI assistant.

With over 4 billion lines of code processed from more than 30,000 repositories, DeepWiki operates with a response time of under 200ms. Supporting 48+ programming languages, it also creates interactive visualizations using Mermaid diagrams, making complex code structures easier to understand. For teams with unique documentation needs, DeepWiki offers a customizable .devin/wiki.json file, enabling them to prioritize folders, define hierarchies, and ensure critical components are thoroughly documented.

DeepWiki’s Model Context Protocol (MCP) eliminates the need for fragmented custom integrations by creating a unified knowledge layer that AI agents can query. Its context compression technology achieves a 90%+ compression ratio, retaining essential information while significantly cutting costs for high-volume AI interactions.

Key Benefits of DeepWiki for Enterprises

DeepWiki addresses the inefficiencies of traditional documentation methods, which consume 20–30% of developer time - equivalent to 8–12 hours per week. By automating documentation, it frees up developers to focus on higher-value tasks. It also simplifies onboarding by offering natural language query tools and exploration features, helping engineers quickly familiarize themselves with new projects or systems.

For AI-driven automation, DeepWiki bridges the context gap that often limits large language models. By integrating through MCP, AI tools like Claude or GPT-4 can access the contextual information they need to perform complex technical tasks more accurately. During community testing in April 2025, developers working with a React code repository found that DeepWiki’s auto-generated module dependency graphs and detailed API descriptions significantly sped up the process of locating key function implementations.

| Feature | Benefit |

|---|---|

| Semantic Search | Speeds up onboarding by allowing natural language queries of codebases. |

| Architecture Diagrams | Provides clarity on system design with auto-generated visual maps. |

| Steering (.json) | Ensures critical business logic is documented thoroughly and accurately. |

| MCP Support | Creates a unified knowledge layer compatible with various AI tools. |

| Context Compression | Cuts operational costs by reducing token usage by over 90% in AI interactions. |

DeepWiki also prioritizes security with features like SOC2 and ISO27001 certifications, audit logging, and fine-grained permission controls. While 84% of developers express interest in using AI tools, 46% report concerns about the accuracy of AI-generated outputs. DeepWiki addresses these trust issues by providing transparent documentation that allows teams to validate AI-generated insights against the actual code.

These capabilities enable NAITIVE to deliver precise and efficient AI solutions for its clients.

How NAITIVE Uses DeepWiki in Client Projects

NAITIVE leverages DeepWiki’s automated documentation and real-time context capabilities to accelerate enterprise AI initiatives. When assessing client systems, DeepWiki’s advanced research mode performs semantic analysis similar to a senior engineer’s audit, allowing NAITIVE to conduct thorough technical evaluations and quickly identify automation opportunities. This is particularly useful when building autonomous agent teams that need to understand unfamiliar codebases before tackling complex tasks.

For clients implementing specialized AI solutions, NAITIVE uses DeepWiki’s steering features to ensure critical business logic is well-documented. It also generates Mermaid.js architecture diagrams to visualize legacy systems, helping pinpoint integration opportunities for new AI frameworks. By connecting DeepWiki to AI-native development environments through MCP, NAITIVE ensures seamless access to codebase context throughout the development process, aligning AI solutions with the client’s technical infrastructure.

The combination of DeepWiki’s automated knowledge synthesis and NAITIVE’s expertise in AI development creates a powerful advantage. Instead of spending weeks on manual documentation and code analysis, NAITIVE teams can focus on strategic decisions that drive measurable results. This approach highlights NAITIVE’s dedication to turning AI into a real competitive edge for its clients, delivering outcomes that matter.

AI Code Documentation Tools 2024

Building an Enterprise AI Strategy with DeepWiki

DeepWiki’s advanced documentation and retrieval tools offer enterprises a solid foundation for crafting tailored AI strategies.

Aligning Business Goals with AI Use Cases

DeepWiki turns repositories into structured, actionable assets, empowering AI agents to break down tasks and execute multi-step plans that align with business objectives. This goes beyond simply retrieving documentation - it’s about leveraging Agentic RAG (Retrieval-Augmented Generation), where AI actively deconstructs complex tasks and delivers results based on structured knowledge. By integrating AI into existing systems, businesses can map goals like cost reduction or faster onboarding directly to the codebase areas that matter most.

The .devin/wiki.json configuration file plays a key role in this process. Teams can direct DeepWiki’s focus to high-priority areas, such as compliance folders or critical API layers. This targeted strategy transforms AI from a general-purpose tool into a business-driven asset tied to measurable outcomes. For example, enterprises using AI-augmented workflows have reported productivity boosts of 40–55% among knowledge workers. Additionally, 80% of business leaders plan to integrate AI agents into their strategies within the next 12 to 18 months.

To ensure success, it’s crucial to define metrics early. Goals like reducing the time it takes new developers to understand systems or increasing the efficiency of automated workflows can guide implementation. NAITIVE helps ensure that DeepWiki delivers measurable value from day one by providing the structured data needed to combine multiple AI models for solving complex challenges.

Assessing Readiness for DeepWiki Implementation

Before rolling out DeepWiki, it’s important to evaluate documentation quality and ensure stable APIs to support its integration. Teams should also be prepared to provide strategic guidance through the .devin/wiki.json file, using repo_notes to clarify relationships between components like frontend, backend, and infrastructure.

Without proper data readiness, up to 60% of AI projects are at risk of failure.

NAITIVE advises following a "Crawl, Walk, Run" approach. Start with Level 1 maturity (basic knowledge retrieval), then progress to Level 3 (orchestrating complex workflows across departments). Running AI agents in shadow mode - where the AI provides recommendations but humans remain in control - can help identify errors without jeopardizing live systems. Keep in mind the wiki’s validation limits: 30 pages for standard users, 80 for enterprise users, and notes capped at 10,000 characters each.

Once readiness is confirmed, strong governance practices ensure that DeepWiki-powered AI solutions remain secure and aligned with business needs.

Governance and Risk Management in AI Strategies

DeepWiki’s transparent documentation framework supports robust governance and risk management. The .devin/wiki.json file allows teams to set clear guidelines, providing up to 10,000 characters of context per note to ensure AI outputs align with business logic and safety standards. This Human-in-the-Loop (HITL) model is critical for high-stakes decisions, regulatory compliance, and culturally sensitive content. With a maximum of 100 notes (combining repo and page notes), teams can provide detailed human oversight.

"Applying Zero Trust principles to agents, giving only the necessary access and adjusting it as responsibilities evolve, grounds responsible innovation." - Charles Lamanna, Microsoft Corporate President

DeepWiki also minimizes risks like AI hallucinations by generating architecture diagrams and linking directly to source code, creating a clear audit trail. The repo_notes field can be used to highlight essential areas like security, infrastructure, or compliance, ensuring no critical documentation is overlooked. A well-organized wiki hierarchy further simplifies audits and navigation.

NAITIVE emphasizes shared accountability between CIOs/CTOs (technical infrastructure) and business leaders (outcome ownership). This ensures AI strategies align with both technical capabilities and business goals. The focus on AI TRiSM (AI Trust, Risk, and Security Management) addresses reliability, privacy, and explainability, which are increasingly important as regulations like the EU AI Act come into play. By combining DeepWiki’s transparent documentation with NAITIVE’s governance frameworks, enterprises can confidently deploy AI agents while maintaining control over vital business processes.

Implementing DeepWiki for Industry-Specific AI Solutions

DeepWiki's core functionality becomes even more powerful when tailored to meet the specific needs of different industries. By aligning its capabilities with targeted AI strategies, businesses can streamline operations, meet regulatory demands, and drive meaningful transformation.

With DeepWiki, enterprises can customize documentation and workflows to fit their industry. Whether it's managing patient records, ensuring compliance in finance, or automating supply chain tasks, the platform adapts to support a wide range of operational needs.

Industry-Specific Use Cases for DeepWiki

Industries with unique requirements - like strict regulations or specialized workflows - can benefit from fine-tuning elements like repo_notes and pages. For example, financial services teams can configure DeepWiki to prioritize regulatory compliance modules, while healthcare organizations might focus on patient data frameworks and schemas. The pages array allows businesses to override automatic indexing, creating explicit hierarchies that mirror their operational structure.

Some real-world examples highlight this flexibility. In December 2025, Power Design launched "HelpBot", an AI system designed to handle IT support tasks like password resets and device monitoring. This automated over 1,000 hours of repetitive work. Similarly, Ciena integrated AI to streamline over 100 HR and IT workflows, cutting approval times from days to mere minutes by connecting the system to existing HRIS and IT platforms. These examples showcase how DeepWiki's structured knowledge layer can deliver measurable results. In fact, 92% of leaders anticipate seeing returns from autonomous AI within two years. This adaptability sets the stage for the next step: creating autonomous AI agents.

Building Autonomous AI Agents with DeepWiki

Beyond customization, DeepWiki plays a key role in developing autonomous AI agents that link documentation to operational tasks, improving efficiency and accuracy.

The platform's Model Context Protocol (MCP) server provides three critical functions - read_wiki_structure, read_wiki_contents, and ask_question - which allow AI systems to access and interact with documentation programmatically. Tools like Claude and Cursor can use this interface to query industry-specific content while maintaining accuracy grounded in context.

Take Zota, for example. In 2025, this global payments company implemented an AI-powered merchant FAQ agent using Salesforce's Agentforce. The system managed 180,000 annual support inquiries and scaled to support 500,000 merchants around the clock - all without increasing their 300-person workforce. Similarly, NAITIVE helps companies build multi-agent systems using DeepWiki's structured knowledge. These systems include "Superior" agents that delegate tasks to "Subordinate" agents, enabling a 40% boost in productivity across various operations.

Tracking Performance and Continuous Improvement

To ensure success with DeepWiki-based solutions, businesses must track key metrics like automation rates (tasks completed without human input), response accuracy, and reductions in manual work hours. Enterprises using autonomous AI systems have seen decision-making cycles speed up by as much as five times and insights delivered 50% faster through natural language queries on structured data.

The read_wiki_structure tool can be used to audit industry-specific topics and monitor performance metrics like automation rates and accuracy. For larger repositories, Enterprise DeepWiki supports up to 80 pages and 100 notes (each up to 10,000 characters), ensuring AI has the context it needs. To maintain compliance and security, NAITIVE advises implementing policy-based restrictions and human oversight for sensitive tasks, especially in regulated industries like healthcare and finance. Regular updates to the .devin/wiki.json configuration allow companies to refine their AI systems for better outcomes while staying compliant and secure.

Scaling and Governing DeepWiki Across the Enterprise

After achieving success with pilot projects and client rollouts, expanding DeepWiki across an entire organization requires consistent standards and measurable outcomes. This approach is especially critical as global AI investments are projected to reach $297 billion by 2027.

Standardizing Knowledge Practices with DeepWiki

The road to standardization starts with configuration. The .devin/wiki.json file serves as the central control hub, dictating how DeepWiki generates documentation across repositories. By committing this file to version control, organizations can maintain consistent documentation practices across all teams.

DeepWiki Enterprise supports up to 80 pages per wiki and 100 total notes, each with a 10,000-character limit, providing ample room for large organizations to document their complex systems. The pages array allows teams to establish clear parent-child relationships that reflect their operational structure. Meanwhile, the repo_notes feature offers high-level insights into how frontend, backend, and infrastructure components interact. For even greater consistency, the Model Context Protocol (MCP) server provides programmatic access to documentation across AI tools like Claude, Cursor, and Windsurf. This ensures all teams receive consistent, contextually relevant answers.

To keep documentation accurate and avoid "documentation debt", establish a cross-functional team to audit wiki pages quarterly. This team can identify content that needs updating, archiving, or expansion. Following this, track the impact of these standards on productivity and cost savings.

Measuring ROI and Business Impact

Once documentation is standardized, the next step is to measure the business value of DeepWiki. Begin by establishing benchmarks before implementing DeepWiki, such as how much time developers currently spend on onboarding and maintaining documentation.

Measure both quantity and quality metrics. Quantity metrics include time saved per developer, the number of repositories indexed, and API response times. Quality metrics focus on the accuracy of architecture diagrams, user satisfaction, and error rate reductions. For executives, translate these metrics into financial terms - calculate the cost per indexed page, developer hours saved in dollars, and overall ROI by comparing productivity gains against implementation costs.

Real-world AI use cases provide compelling examples. For instance, AI-powered research tools in legal services have increased search efficiency by 77%, while automated credit memo preparation in finance has reduced due diligence time by 80%. Additionally, businesses using AI for core operations report cost reductions of over 10% across various units. However, scaling remains a challenge - over 70% of organizations have implemented only a fraction of their planned Generative AI projects. The solution? Scale incrementally. Start with a single team or repository, then refine and measure results before expanding further.

"AI delivers ROI when projects are aligned to clear business goals, measured against meaningful metrics, and scaled with purpose." - The deepset Team

Long-Term Governance and Compliance

Sustained success with DeepWiki depends on robust governance. Organizations with mature AI governance frameworks can capture 20-40% more value from their models, yet only 35% of C-suite leaders can fully explain their AI systems to stakeholders.

Adopt a six-pillar framework that includes Policy & Standards, Risk Management, Transparency, Accountability, Monitoring & Evaluation, and Continuous Improvement. For DeepWiki, implement Task-Based Access Control (TBAC) to manage permissions. For instance, grant all users access to read_wiki_structure but restrict read_wiki_contents to authorized personnel. Assign unique identities to every agent (production, development, and test environments) and centralize logging via tools like OpenTelemetry or Log Analytics to ensure comprehensive audit trails.

Before deploying DeepWiki at scale, conduct red team exercises to simulate potential attacks like prompt injection or data poisoning. This is especially critical in regulated industries, where penalties for non-compliance can be steep. For example, the EU AI Act imposes fines of up to 7% of global turnover for prohibited AI practices. A recent case in January 2025 saw Block Inc. (Cash App) pay $80 million due to inadequate automated Anti-Money Laundering controls, underscoring the risks of weak governance.

"Good AI governance accelerates revenue, de‑risks regulatory exposure and earns stakeholder trust." - Soumendra Kumar Sahoo, Executive Playbook for AI Governance

NAITIVE offers a structured 90-day governance plan to help clients scale DeepWiki safely and effectively. The process begins with a gap analysis in the first 30 days, followed by policy drafting and proof-of-concept monitoring in the next 30 days, and concludes with a full rollout and training in the final 30 days. This phased approach ensures compliance, safety, and measurable business impact as DeepWiki scales across the enterprise.

Conclusion

DeepWiki is redefining how businesses approach AI by shifting from scattered tools to intelligence grounded in context - bringing domain-specific knowledge to the forefront and enabling AI to deliver tangible results. By addressing the "context gap" that often limits AI's potential, it offers the infrastructure necessary for autonomous agents to handle complex tasks at scale.

The impact is clear. Engineering teams using DeepWiki-powered agents have slashed .NET Framework to .NET Core migration timelines from several months to just two weeks. Companies embracing automation are growing their revenue 2.5 times faster than those that don’t. Agentic AI adoption has reduced engineering workloads by 40% and design lead times by 60%. Looking ahead, 80% of automation leaders plan to ramp up investments in AI agents by 2025, with the global agentic AI market expected to hit $103.6 billion by 2032.

"What once took months now delivers results swiftly - with Devin." - The Cognition Team

These examples highlight the importance of a strategic approach to adoption. Success hinges on more than just technology - it’s about redesigning processes and managing organizational change. In fact, while technology accounts for 20% of automation value, the remaining 80% comes from these critical efforts. DeepWiki’s context-driven intelligence, paired with NAITIVE's expertise in governance and process redesign, ensures meaningful outcomes. Their phased approach - from identifying gaps to full-scale implementation - builds trust through ongoing improvement.

The evolution from basic chatbots to fully autonomous agents is already happening. Organizations that standardize knowledge practices, rigorously track ROI, and govern AI with intention will unlock the full potential of this transformation. With DeepWiki and NAITIVE leading the way, AI can move beyond the buzzword stage and become a true competitive advantage.

FAQs

How does DeepWiki streamline onboarding and save developers time on documentation?

DeepWiki takes the hassle out of onboarding by turning your codebase into an AI-driven knowledge center. It scans your repository, creating clear, context-aware documentation like module summaries, API references, and practical usage examples. Plus, it stays in sync with your code as it evolves. This means new engineers can get up to speed faster by simply asking natural-language questions - no more digging through scattered READMEs or buried comments.

For enterprise teams, DeepWiki works seamlessly with private repositories, offering a centralized, always-updated knowledge hub. By automating documentation updates, it frees developers to focus on creating solutions instead of spending time writing or maintaining reference materials. The outcome? New hires ramp up quickly, and your team saves valuable time.

How does the Model Context Protocol (MCP) help integrate AI with DeepWiki?

The Model Context Protocol (MCP) serves as a standardized communication framework, allowing AI models to interact with external resources in a structured way. DeepWiki uses MCP to connect AI systems with enterprise data effortlessly, enabling access to current, domain-specific resources like code repositories, documentation, and datasets.

Here's how it works: when an AI agent in the DeepWiki system needs context - say, to understand a codebase - it sends an MCP request to the DeepWiki server. The server gathers the relevant information, formats it according to MCP standards, and sends it back to the AI. This streamlined process ensures the AI has precise, up-to-date context for tasks like navigating code, conducting security reviews, or assisting with onboarding. MCP transforms raw data into actionable insights, making enterprise resources more accessible and useful for AI-driven tasks.

How does DeepWiki ensure the security and accuracy of its AI-generated documentation?

DeepWiki places a strong emphasis on security and accuracy, offering a platform designed specifically for medium- and large-scale organizations. Built on a closed-source cloud infrastructure, it uses an enterprise-level, compliance-driven architecture to safeguard its operations. By isolating proprietary AI models, DeepWiki ensures they remain protected from external interference. Secure data exchanges are further reinforced through its Model Context Protocol (MCP), providing a dependable layer of protection for customer applications.

When it comes to accuracy, DeepWiki leverages advanced large language models combined with continuous analysis of repository-level code. By indexing entire source trees and capturing both syntactic and semantic details, the platform creates dynamic, wiki-style documentation that stays current with real-time updates. To make things even more efficient, the platform includes a “talk-to-Devin” assistant. This tool enables users to refine and verify content instantly, minimizing the chances of outdated or incorrect information slipping through.