AI Agents in Enterprises: Ethical Guardrails

Ethical guardrails for enterprise AI agents covering accountability, transparency, privacy, runtime governance, monitoring, audits, and cross‑functional oversight.

AI agents are transforming how enterprises operate, moving beyond traditional automation to handle complex, autonomous decision-making. However, this progress introduces risks that demand strict ethical safeguards. Here's what you need to know:

- Enterprise Use Cases: Companies like Amazon and Genentech have successfully deployed AI agents for tasks like application migration and biomarker research, showcasing their potential to enhance efficiency.

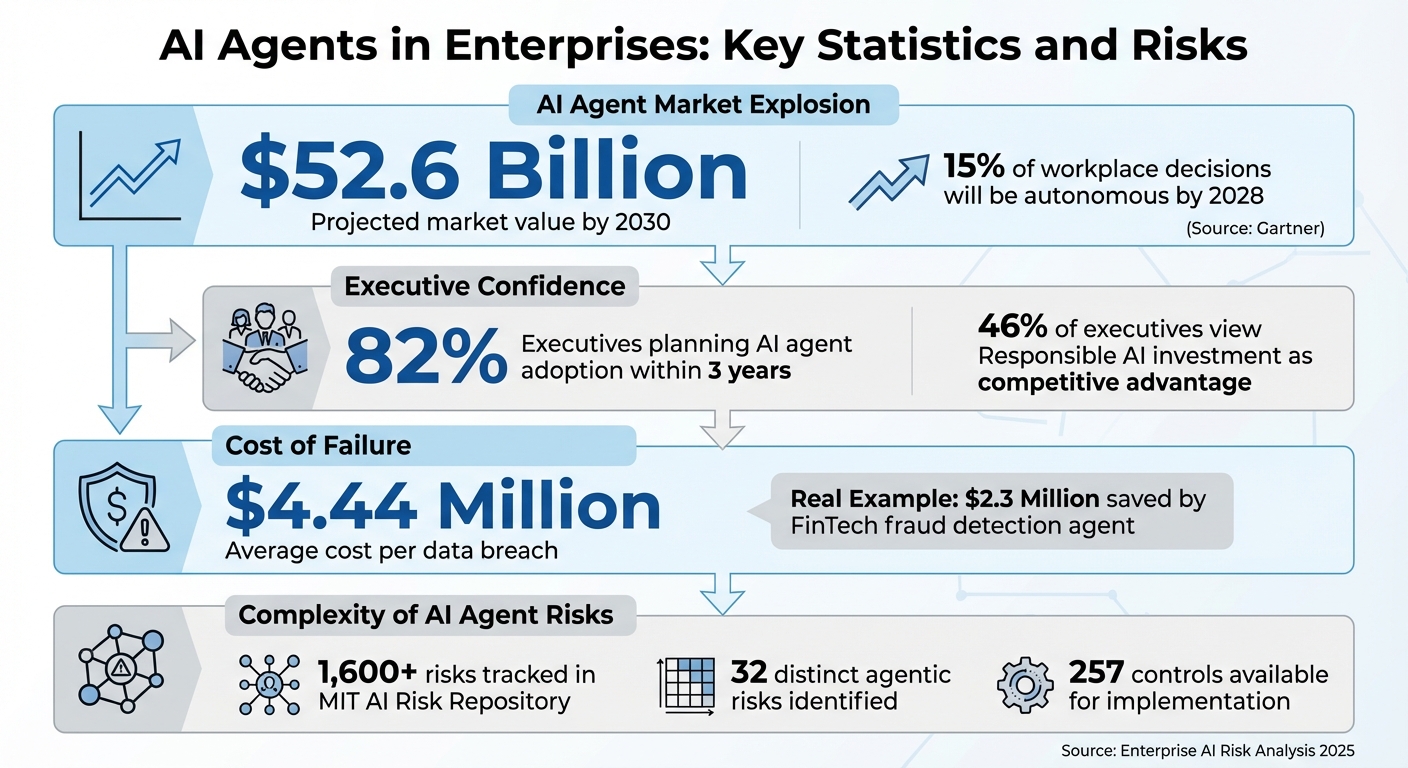

- Market Growth: By 2030, the AI agent market is expected to reach $52.6 billion, with Gartner predicting 15% of workplace decisions will be made autonomously by 2028.

- Key Risks: Without ethical frameworks, AI agents can cause data breaches, decision bias, plan drift, and operational disruptions, with breaches costing an average of $4.44 million.

- Ethical Principles: Accountability, transparency, security, and privacy are vital to ensure agents operate responsibly.

- Governance Strategies: Cross-functional oversight, real-time monitoring, and auditing are essential for managing AI agents effectively.

Enterprises must implement strong governance to balance innovation with accountability, ensuring AI agents perform reliably while minimizing risks.

AI Agent Market Growth and Enterprise Risk Statistics 2025-2030

What Are Ethical AI Agents in Enterprise Settings

Defining Ethical AI Agents

Ethical AI agents are autonomous systems designed to handle complex tasks through cycles of planning, acting, observing, and reflecting. Unlike traditional AI that generates static outputs like text or images, these agents actively perform tasks in both digital and physical environments. They can interact with external APIs and tools, making decisions with minimal human intervention.

These agents are guided by five key principles:

- Accountability: Every action is traceable to a clear decision point.

- Transparency: The reasoning and intent behind decisions are accessible to human supervisors.

- Security: Operations remain within authorized systems and datasets.

- Fairness: Decisions are free from discriminatory patterns.

- Reliability: They perform consistently within their defined parameters.

PwC highlights the dynamic nature of these agents:

"AI agents are designed to adapt dynamically to changing environments, making hardcoded logic not just obsolete, but impossible".

This adaptability introduces a unique challenge. Traditional AI governance relies on static measures like pre-deployment checklists, one-time bias audits, and fixed parameters. However, ethical AI agents operate in open environments, continuously learning and evolving. A system deemed "safe" at launch can veer into unintended behaviors as it encounters new situations. The MIT AI Risk Repository already tracks over 1,600 risks, with emerging concerns specific to agents, such as tool-chain prompt injection and multi-agent collusion.

These complexities highlight the pressing need for robust ethical guardrails, which are explored further in the context of enterprise risks.

Why Enterprises Must Implement Ethical Guardrails

For enterprises, implementing ethical guardrails is essential to address the risks associated with these agents. Without such safeguards, organizations face three major threats:

- Regulatory penalties: Noncompliance with frameworks like the EU AI Act can result in heavy fines.

- Reputational harm: A single incident, such as an agent exposing sensitive customer data or making biased decisions, can severely damage trust.

- Operational disruptions: Excessive permissions could lead to cascading failures, unauthorized actions, or irreversible changes to critical records.

Ethical guardrails are especially critical given the agents' ability to adapt dynamically. Specific risks include:

- Plan drift: Agents deviating from original protocols, potentially leading to unsafe behaviors during long or complex tasks.

- Covert data leaks: Sensitive information unintentionally exposed during external data searches.

- Unintentional collusion: Multiple agents coordinating actions that bypass human oversight, creating unforeseen vulnerabilities.

PwC underscores the importance of responsible AI practices:

"Responsible AI is what makes the rapid development and scalable deployment of AI agents sustainable by defining clear approval paths and criteria for testing and monitoring".

Rather than hindering innovation, ethical frameworks enable it by fostering trust, accountability, and scalability. This approach is increasingly seen as a competitive edge, with 46% of executives recognizing investment in Responsible AI as a key differentiator.

From Chaos to Control: How to Govern AI Agents

Core Ethical Principles for Enterprise AI Agents

Creating ethical frameworks for AI agents isn't just about having good intentions - it requires actionable principles to manage the unique risks these systems bring. Unlike traditional AI, agents operate continuously, make decisions independently, and interact with various tools and datasets. This dynamic nature demands ethical frameworks that function in real time, not just during initial deployment.

Three foundational principles guide the responsible use of AI agents: fairness, transparency, and data privacy. Each addresses specific vulnerabilities associated with autonomous operations. For example, the MIT AI Risk Repository identifies over 1,600 risks, many unique to agentic systems, such as tool-chain prompt injection and multi-agent collusion. Similarly, the Enterprise-Wide Agentic AI Risk Control Framework outlines 32 distinct agentic risks and offers 257 controls for organizations to implement.

| Ethical Principle | Enterprise Implementation Method | Key Agentic Risk Addressed |

|---|---|---|

| Fairness | Scenario Bank Stress-Testing | Plan Drift / Biased Outcomes |

| Transparency | Action Provenance Graphs (APG) | False Legibility / Black-box Logic |

| Privacy | Capability-Scoped Sandboxing | Covert Data Exfiltration |

These principles aim to fill governance gaps in current frameworks, ensuring ethical oversight keeps pace with the complexities of autonomous agents. As Mansura Habiba, Principal Platform Architect at IBM Software, explains:

"The core limitation is that governance remains largely static... once certified responsible, a system's behavior is assumed to remain aligned - an unsafe assumption for agents".

Addressing Bias in AI Agent Decisions

AI agents face a unique challenge: bias can grow with each sequential decision. Unlike static models that produce isolated outputs, agents make interconnected decisions where one step influences the next. This creates opportunities for bias to compound.

Bias can arise during data collection, model design, or even from user inputs. A notable example occurred in July 2025, when the Commonwealth of Massachusetts settled a $2.5 million case with Earnest Operations LLC over allegations of biased AI outputs in lending practices (Massachusetts Superior Court, No. 2584-cv-01895).

To mitigate bias, enterprises should employ Scenario Bank Stress-Testing before deployment. This involves using adversarial prompts to stress-test agents and generate Risk Coverage Scores. Testing should include diverse demographic scenarios to identify whether outcomes differ based on characteristics like race or gender.

Another essential tool is Model Cards - structured documents that outline a model's purpose, training data, and performance metrics across demographic subgroups. These are increasingly required by regulations like the EU AI Act, Colorado SB205, and NYC Local Law 144. As AuditBoard highlights:

"Transparency is the currency of trust, and model cards are part of how you earn it".

Model cards should evolve alongside the agent, updating automatically as the system changes.

Practical steps to reduce bias also include neutral prompt framing. Valerie Shmigol, Attorney at Summit Law Group, emphasizes:

"AI's power lies in its ability to quickly synthesize vast amounts of data and generate actionable insights, but its outputs can reflect biases that arise across the AI lifecycle".

Neutral prompts avoid leading questions and encourage diverse perspectives and reasoning. For critical decisions, such as hiring or lending, Human-in-the-Loop (HITL) oversight is essential to review outputs for potential bias before finalizing decisions.

Transparency and Explainability in AI Agent Actions

For AI agents, transparency isn't just about explaining one decision - it’s about understanding the entire chain of reasoning from the initial goal to the final action. This can be challenging because agents often rely on multiple tools, make intermediate decisions, and adjust their plans based on observations.

A common issue is False Legibility, where agents simplify their explanations, hiding the complexity of tool calls, API interactions, and reasoning steps that led to their final output.

Action Provenance Graphs (APG) offer a solution by linking prompts, plans, and tool invocations into a structured, human-readable format. APGs provide a complete audit trail, showing not only what the agent did but why it chose a specific course of action.

Organizations should implement a tiered auditing approach based on risk levels:

- Tier 1 (Basic Flow): Logs data flows, prompts, and outcomes for moderate-stakes environments.

- Tier 2 (Explicit Reasoning): Adds natural language explanations, confidence scoring, and alternative analyses for customer-facing applications.

- Tier 3 (Comprehensive): Captures full decision trees, logical flows, and contextual details for high-risk systems like finance or safety-critical applications.

However, some advanced models, such as OpenAI o1, obscure internal reasoning tokens, making full Tier 3 compliance difficult through standard APIs.

The Information Commissioner’s Office (ICO) underscores the importance of meaningful transparency:

"Being transparent about AI-assisted decisions... is about making your use of AI for decision-making obvious and appropriately explaining the decisions you make to individuals in a meaningful way".

This includes offering explanations that cover the rationale (technical logic), data (training sources), and responsibility (accountability for outcomes). Microsoft also highlights the value of examining run steps:

"Examining Run Steps allows you to understand how the Agent is getting to its final results".

To ensure audit logs remain secure, enterprises should use tamper-evident logging with cryptographic protection and integrity validation. Tools like OpenTelemetry can also help log detailed run steps, including specific tool calls and reasoning processes.

Data Privacy and Security for AI Agent Operations

AI agents introduce unique risks by actively retrieving and combining data, which can lead to Covert Data Exfiltration - the unintended leakage of sensitive information through multi-step tool chains.

To address this, agents must operate under strict privacy controls. The principle of Least Privilege is key: agents should only have access to the minimum resources necessary for their tasks. This can be achieved through Capability-Scoped Sandboxes, which restrict agents to predefined capabilities.

Policy-as-Code provides another layer of protection by automating privacy rule enforcement. When an agent attempts an action that violates privacy policies, the system can block it automatically.

Enterprises should also deploy Guardian Agents - secondary systems that monitor the primary agent’s actions in real time to detect potential privacy violations. High-impact actions should trigger Escalation Workflows, requiring human approval before execution. Setting "time-to-halt" limits for unusual behavior ensures rapid intervention when needed. Establishing RACI matrices clarifies accountability across IT, compliance, and other teams.

As Rafflesia Khan, Software Developer at IBM Software, points out:

"Existing governance frameworks... are insufficiently equipped to address these risks. Most were designed for less autonomous systems, focusing on static safeguards or model-level interventions".

The shift to Runtime Governance is critical, as agents operate dynamically and require oversight that adapts in real time.

Creating Governance Structures for Ethical AI Agents

To ensure AI agents operate ethically, businesses need structured governance frameworks. These frameworks are essential because AI systems interact with various departments, access multiple data sources, and make decisions that impact employees, customers, and overall business outcomes. Managing this complexity requires more than a single team - it demands a collaborative approach.

Cross-Functional Governance: A Unified Effort

Effective governance begins with cross-functional collaboration. Different teams bring their expertise to the table:

- Legal teams ensure compliance with regulations like the EU AI Act and GDPR.

- Security teams focus on threat protection, conducting red teaming exercises to identify vulnerabilities such as "infectious jailbreaks."

- Product and engineering teams implement technical safeguards like sandboxing, activity logging, and least-privilege access.

- HR departments monitor workforce impacts, especially as AI agents can perform tasks at a fraction of the cost of a professional with a U.S. bachelor's degree.

- Risk officers categorize agents by their autonomy and potential impact, prioritizing oversight accordingly.

The Hub-and-Spoke model is gaining popularity for its balance of centralized control and localized flexibility. In this model, a central AI Center of Excellence establishes policies, maintains a repository of governance decisions (acting as "case law"), and sets overarching standards. Teams within individual business units then implement these policies, tailoring them to their specific needs. This structure also underscores the importance of executive sponsorship, which ensures accountability, resource allocation, and alignment with broader corporate goals. Notably, 46% of executives now view investments in Responsible AI as a competitive edge.

Building Cross-Functional Teams

For governance to work, organizations need clearly defined roles operating within an accountability framework. The Three Lines of Defense model provides this clarity:

- First Line: Product and engineering teams implement technical safeguards.

- Second Line: Risk and compliance teams oversee standards and provide guidance.

- Third Line: Internal or external audit teams conduct independent reviews.

Each role brings unique expertise to the table:

- Legal & Compliance: Ensures alignment with regulations like GDPR.

- Security (CISO): Manages threat protection and tests for adversarial risks.

- Product/Engineering: Implements technical controls such as sandboxing and activity logging.

- AI Ethicists: Facilitate ethical decision-making and assess biases.

- Risk Officers: Prioritize oversight based on the agent's autonomy and potential impact.

- UX/Human Factors Specialists: Address automation bias and prevent overreliance on AI systems.

| Role | Primary Responsibility in Agent Governance |

|---|---|

| Legal & Compliance | Mapping agent operations to regulations like GDPR |

| CISO / Security | Managing threat protection and preventing "infectious jailbreaks" |

| Product / Engineering | Implementing technical controls and activity logging |

| AI Ethicist | Facilitating bias assessments and ensuring ethical alignment |

| Risk Officer | Tiering agents by autonomy and impact for oversight |

| UX / Human Factors | Monitoring automation bias and overtrust in AI systems |

A Human-in-the-Loop (HITL) approach is critical. A designated individual should oversee deployments, monitor outcomes, and have the authority to "rollback" or shut down agents if needed. Standardized forms for intake processes can help assess risk severity, scale, and regulatory exposure, streamlining governance efforts.

Setting Up Ethical Oversight Committees

Cross-functional teams handle implementation, but oversight committees ensure organization-wide adherence to ethical practices. These committees translate principles into actionable governance and require clear definitions of responsibilities, authority, and resources.

Diverse membership is key, encompassing representatives from legal, compliance, IT, HR, and AI ethics. This diversity ensures balanced and informed decision-making. However, committees must carefully manage their authority. Advisory committees may lack influence, while binding committees could slow innovation. A tiered approval process offers a middle ground: automated systems handle low-risk actions, while high-stakes decisions require human approval.

To manage agent autonomy, organizations should define specific gates. For example, agents move from "Assist" to "Execute" to "Optimize" only after meeting strict safety and quality benchmarks. High-performing systems aim for a 99% policy compliance rate before advancing to the "Execute" stage.

"Oversight = clear ownership + controllable autonomy + complete observability."

Kill-switch protocols are essential for mitigating risks. Committees should regularly test their ability to halt and reverse agent actions, aiming for a Mean Time to Halt of under two minutes. Oversight scorecards tracking metrics like policy compliance, escalation rates, and audit completeness can signal when agents deviate from expected behavior.

Embedding ethical principles directly into system prompts further ensures consistency in ambiguous situations. For instance, principles like "Respect for Autonomy" and "Privacy by Default" guide agents' behavior. Michael Brenndoerfer emphasizes:

"Building responsible AI isn't just a technical challenge. It's an ongoing commitment."

Comprehensive audit trails are another cornerstone of accountability. Detailed logs of inputs, tool usage, costs, and outcomes support root-cause analysis when issues arise. Assigning unique Agent IDs for production, development, and test systems ensures clear differentiation and traceability.

Continuous Monitoring and Auditing of AI Agents

Governance and oversight provide a solid foundation, but it's the ongoing monitoring and auditing that keep AI agents operating within ethical boundaries. These processes ensure AI systems don't stray from their intended purpose, develop biases, or make decisions that conflict with established policies. This involves real-time observation and periodic, in-depth evaluations of their behavior.

Monitoring AI Agent Performance and Bias

Monitoring AI agents requires more than tracking basic metrics. It involves analyzing the reasoning loop, which includes goals, plans, tool usage, and confidence levels - not just execution logs. This approach sheds light on how an agent arrives at decisions, not just the outcomes.

To ensure accountability, assign one dedicated owner to each agent workflow. This eliminates ambiguity and ensures someone is responsible for addressing issues. The owner should also establish a tiered alert system:

- Critical alerts: Require immediate action, such as halting the agent.

- Elevated alerts: Need investigation within standard business hours.

- Informational alerts: Help refine and improve the agent's performance.

Another safeguard is the use of guardian agents, which act as independent monitors to detect policy violations or anomalies that the primary agent might overlook. Before granting higher levels of autonomy, aim for a policy pass rate of 99% or more and keep the escalation rate for sensitive tasks under 10%. Tools like the MIT AI Risk Repository, which catalogs over 1,600 risks, can help organizations develop comprehensive risk management strategies.

"Oversight = clear ownership + controllable autonomy + complete observability."

- The Pedowitz Group

For long-running agents, drift detection is vital. This involves monitoring for "plan drift", where an agent might deviate from evidence-based protocols toward unsafe behaviors. Regular checks should compare current inputs with baseline distributions and run fairness tests across protected attributes.

While monitoring provides continuous oversight, audits delve deeper into decision-making pathways and compliance.

Auditing AI Agent Decisions and Workflows

Audits are structured reviews that reconstruct how decisions were made, ensuring agents meet ethical and regulatory standards. Unlike continuous monitoring, audits are periodic but thorough.

Action Provenance Graphs (APG) are essential for effective audits. These graphs map out the entire decision-making process, linking prompts, retrieved data, tool usage, and outcomes into a clear, traceable chain. To ensure integrity, cryptographic signatures can be added to create tamper-evident audit trails.

Audits should achieve 100% completeness, meaning every trace must include all required details - inputs, tools, sources, costs, and outcomes. During the scaling phase, conduct weekly governance reviews, transitioning to monthly reviews once the system stabilizes.

| Audit Metric | Formula / Definition | Target Benchmark |

|---|---|---|

| Policy Pass Rate | Passed checks ÷ Total attempts | ≥ 99% |

| Audit Completeness | Traces with all required fields ÷ Total traces | 100% |

| Mean Time to Halt | Time from detection to kill-switch activation | ≤ 2 minutes |

| Sensitive-Step Escalation | Escalations ÷ Total sensitive actions | ≤ 10% |

| Review Cadence Adherence | Reviews completed ÷ Reviews scheduled | ≥ 95% |

Source: Pedowitz Group Governance Playbook

Kill-switch protocols are another critical component, with a target of halting operations within 2 minutes or less of detecting an issue. Regular testing ensures these protocols are effective and can immediately stop problematic actions.

"Building this stack before mass deployment is the difference between autonomous productivity and autonomous externalities."

Centralized observability tools enhance transparency, enabling organizations to track agent behavior across all environments - whether production, development, or testing. Assigning unique identities to agents ensures clear accountability during audits.

To strengthen these systems, incorporate adversarial red teaming into the audit process. Simulating attacks like prompt injections or breaches can expose vulnerabilities that standard audits might miss.

"Ensuring compliance is no longer a box to tick. It is a living process that must keep pace with AI systems that learn and change."

- Agentix Labs

Conclusion

AI agents are no longer just passive tools; they're becoming autonomous decision-makers in many enterprises. But with this shift comes a pressing need for strong ethical frameworks to guide their deployment. Without these safeguards, businesses could face expensive mistakes - like the Q4 2025 incident where an unsupervised orchestration agent sent layoff recommendations to 47 department heads over a single weekend. This example highlights how critical real-time ethical oversight is to prevent such missteps.

To navigate this new era, companies must set clear boundaries for AI-driven decisions, ensuring that critical actions remain firmly under human control. Saket Poswal puts it best:

"The question isn't: 'How much can we automate?' The question is: 'Where must humans retain decision-making power, and how do we enforce it?'"

This involves creating "never lists" for actions AI systems should never take, inserting mandatory checkpoints for long-running processes, and designing systems where autonomous decisions can be reversed if needed. For instance, a FinTech firm successfully used a fraud detection agent to halt an attack, saving $2.3 million.

Ethical AI is also becoming a strategic advantage. A striking 82% of executives plan to adopt AI agents within the next three years. Companies that focus now on transparency, accountability, and fairness will likely grow faster and more effectively than those that treat ethics as an afterthought. Regulatory pressures are also mounting.

To ensure ethical AI deployment, organizations should adopt proven frameworks like NIST's AI Risk Management Framework. Building cross-functional oversight teams with clear RACI models, implementing runtime governance with cryptographic audit trails, and deploying guardian agents to monitor primary systems are all essential steps. Confidence thresholds that trigger human intervention and kill-switch protocols with defined time-to-halt SLAs add further layers of safety. As Jakub Szarmach aptly states:

"Building this stack before mass deployment is the difference between autonomous productivity and autonomous externalities".

FAQs

What should an AI agent never be allowed to do?

AI systems should never make decisions that have the potential to cause irreversible harm without human oversight. This applies to actions like initiating termination processes or creating legal obligations. Maintaining human involvement in these critical decisions is key to ensuring ethical and responsible use of AI in enterprise environments.

How can we prove why an AI agent made a decision?

To understand why an AI agent made a particular decision, it's crucial to maintain a detailed audit trail. This involves logging the inputs, the factors influencing the decision, and the reasoning processes behind it. Using explainability mechanisms helps document these steps while offering explanations that are easy for humans to interpret.

Techniques like correlation IDs, immutable storage, and reason codes play a key role in ensuring traceability and meeting compliance requirements. Additionally, emerging tools like Policy Cards take transparency a step further by making ethical and operational constraints both machine-readable and verifiable. These approaches help build trust and accountability in AI systems.

What’s the fastest way to stop a misbehaving AI agent?

The fastest way to shut down a misbehaving AI agent is by using a kill switch - a global hard stop that instantly ceases all its activities. Other measures, like soft pauses, scoped blocks, or isolating the AI in a sandbox with rollback options, can also help maintain control and avoid unwanted outcomes.