How to Prepare AI Systems for Audits

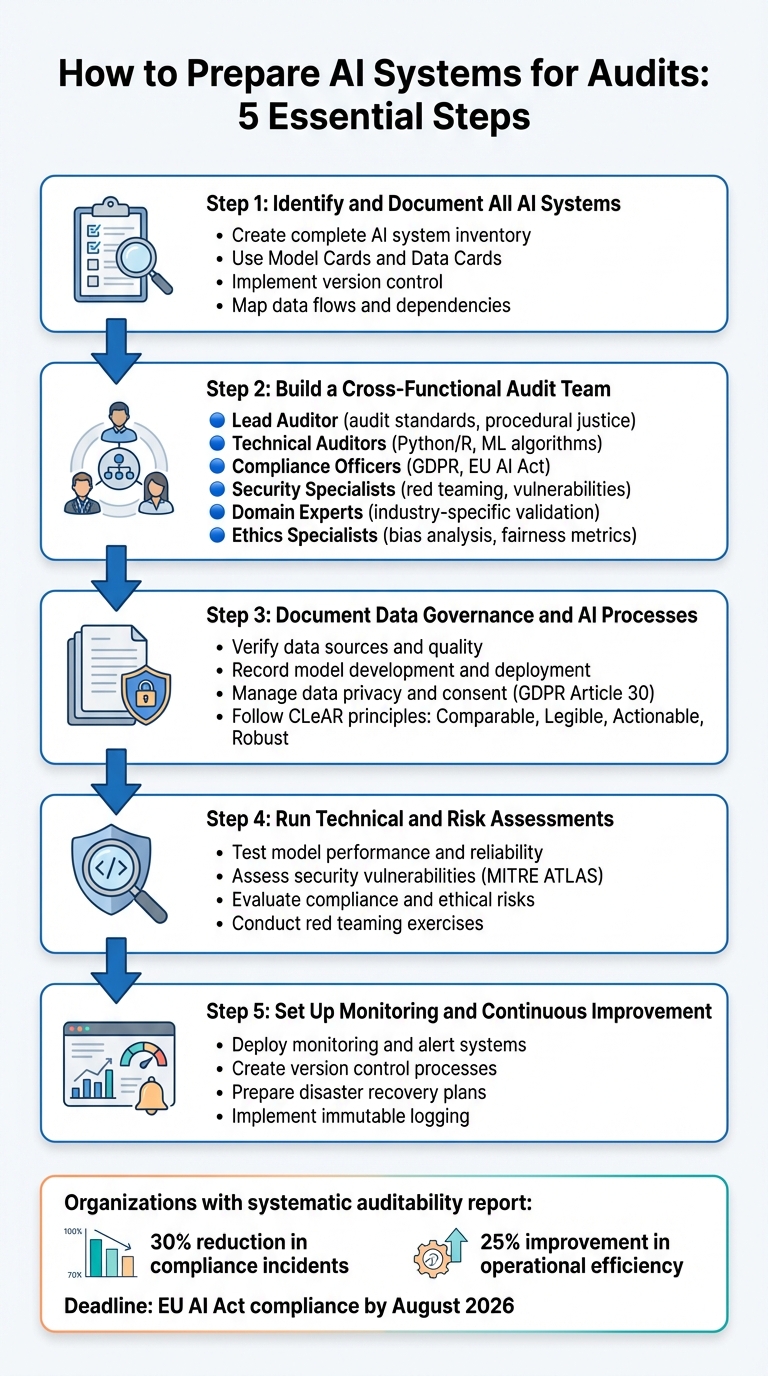

Practical five-step guide to inventory, document, test, and monitor AI systems to meet audits and regulatory requirements.

Getting AI systems ready for audits is no longer optional - it’s a requirement. With regulations like the EU AI Act introducing stricter rules (and deadlines like August 2026), organizations must ensure their AI systems are safe, compliant, and transparent. This isn't just about avoiding fines or meeting legal standards - it's about proving your AI systems can be trusted.

Here’s what you need to do:

- List all AI systems: Create a detailed inventory of every AI tool in your organization. Include their purpose, how they are used, and any risks involved.

- Build a specialized audit team: Include technical experts, compliance officers, and industry professionals to review systems from all angles.

- Document everything: Track data sources, model details, decision-making processes, and compliance measures. Use tools like Model Cards and Data Cards for clarity.

- Test performance and risks: Evaluate model accuracy, identify biases, and run security checks to ensure reliability.

- Monitor continuously: Set up systems to track changes, detect issues, and maintain audit readiness over time.

With over 35 countries drafting new AI laws and only 27% of organizations reviewing all AI-generated content, the urgency to act is clear. Following these steps will help you navigate audits, reduce risks, and build confidence in your AI systems.

5-Step Process to Prepare AI Systems for Audits

Developing an AI Audit Plan | Exclusive Lesson

Step 1: Identify and Document All AI Systems

Before starting an audit, you need a full inventory of every AI system in your organization. This includes everything from customer-facing chatbots to internal risk assessment tools. The goal is to give auditors a clear and detailed overview of your AI landscape.

Start by defining the scope and purpose of your audit. Determine which AI applications need evaluation and the specific risks or impacts you’re trying to address. Assemble an internal audit team with representatives from IT, legal, risk management, and business units to ensure no AI use case slips through the cracks. As Kashyap Kompella, CEO of RPA2AI Research, puts it:

"AI audits are an essential part of the AI strategy for any organization, whether an end-user business or an AI vendor".

Your documentation should be more than just a list of systems. Use Model Cards to record key details like training data, decision logic, human oversight processes, and redress mechanisms. For datasets, Data Cards can help track origins, quality, and potential biases. These tools provide auditors with the transparency they need to understand how your systems operate.

Version control is another critical piece. Make sure you’re tracking model artifacts, prompts, outputs, and pipeline code in detail. For vendor-supplied systems, request documentation on model architecture, training data, and known limitations. Don’t assume third-party systems are audit-ready.

Regulations make this process unavoidable. For example, New York City Local Law 144 mandates independent audits for AI systems used in hiring, while the EU AI Act requires audits for certain AI applications. Standardizing your documentation using frameworks like the NIST AI Risk Management Framework or ISO/IEC 42001 ensures your inventory aligns with regulatory expectations.

To break it down further, your process should include building an inventory, mapping data flows, and aligning with specific regulations.

Build a Complete AI System Inventory

Your inventory should answer key questions: What AI systems do you have? What are they used for? Who interacts with them? Document each system's purpose, deployment history, and user base. Pay special attention to features that might act as proxies for sensitive attributes like race or gender.

For generative AI systems, include details about how you detect and address hallucinations or factually incorrect outputs. Input from IT, legal, risk, and business teams is crucial for creating a thorough inventory that lays the foundation for effective risk assessments.

Map Data Flows and Dependencies

Once you’ve listed your systems, map out how data flows between them. AI systems rarely operate in isolation. You need to track data inputs, outputs, system connections, and third-party dependencies to give auditors a full picture. Create system maps that show how each AI model interacts with broader technical and organizational systems. Include details about external vendors, cloud services, APIs, and software updates.

If multiple models interact, look for risks that might emerge from these connections. For generative AI, document Retrieval-Augmented Generation (RAG) and fine-tuning contexts and prompt versions, as these external data sources can heavily influence outputs.

For black-box models, where internal logic isn’t accessible, use tools like LIME or SHAP to analyze how input features correlate with predictions. This helps you uncover system dependencies even if you can’t see inside the model.

Match Audit Scope to Regulatory Requirements

Finally, align your documentation with relevant regulations to tailor your audit scope.

Different regulations have different requirements. For example, GDPR, HIPAA, and the NIST AI Risk Management Framework each demand specific types of documentation. The NIST framework, developed with input from over 240 organizations, is a widely used resource for managing AI risks across the lifecycle.

| Documentation Type | Focus Area | Key Elements |

|---|---|---|

| Model Card | Technical Details | Architecture, training data, performance metrics, limitations |

| Data Card | Data Governance | Dataset origins, quality, privacy measures, biases |

| System Map | Integration Insights | Software interactions, organizational embedding, dependencies |

| Audit Card | Compliance Tracking | Regulatory requirements, risk assessments, past audit findings |

To implement the NIST AI RMF, focus on its four key functions: Govern, Map, Measure, and Manage. Create use-case profiles tailored to your organization’s specific risks, resources, and goals. As the NIST framework explains:

"The AI RMF is intended for voluntary use and to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems".

Go beyond basic compliance checks. Conduct both compliance and performance audits to evaluate whether your AI systems are operating efficiently, effectively, and transparently. Make sure your auditors have specialized skills - certifications like ISACA’s AAIA indicate expertise in assessing machine learning systems. If your AI relies on cloud-based solutions, include the underlying IT infrastructure and cloud services in your audit scope.

Step 2: Build a Cross-Functional Audit Team

Once you've documented your processes, the next step is assembling a team that can analyze your AI systems from multiple perspectives - technical, legal, security, and societal. A good audit team doesn't just evaluate systems; it ensures independence from the development process. As Deb Raji from the Health AI Partnership explains:

"Even when done by internal teams, it's important for such teams to operate completely separately from the engineering team that built the AI tools to provide independent oversight on the built solution".

Think of this team as similar to an internal financial audit department. They handle in-house reviews while preparing for external scrutiny. Between 2017 and 2025, several countries, including Brazil, Finland, and the UK, collaborated to create an AI auditing framework. Their efforts highlighted the importance of combining technical, compliance, and IT expertise with specialized training in machine learning and cloud systems.

Blending technical skills with organizational insight ensures that your team can uncover risks that a purely technical review might overlook.

Define Team Roles and Responsibilities

Start by outlining the essential roles for your audit. Each role brings a unique perspective that's critical for a thorough evaluation.

- Lead Auditor: Oversees the entire process, ensuring the methodology is followed and the audit maintains its integrity. This role requires knowledge of audit standards like ISO 13485 and expertise in procedural justice.

- Technical Auditors: Focus on performance testing, adversarial audits, and code reviews. They need hands-on experience with Python or R, a deep understanding of machine learning algorithms, and familiarity with model weights.

- Compliance Officers: Ensure alignment with regulations such as GDPR, the EU AI Act, and your organization's AI principles.

- Security Specialists: Identify vulnerabilities like data poisoning and unauthorized access, often through red teaming exercises.

- Domain Experts: Provide industry-specific insights to validate outputs. For instance, healthcare audits might include clinicians, while HR professionals might be involved in hiring system reviews.

- Ethics Specialists: Address social harms, identify bias, and select fairness metrics.

- Data Scientists: Offer independent technical validation and perform bias testing, especially if they're not part of the development team.

| Role | Primary Responsibility | Required Expertise |

|---|---|---|

| Lead Auditor | Audit integrity and methodology | Audit standards (ISO 13485), procedural justice |

| Technical Auditor | Performance testing and audits | Coding (Python/R), ML algorithms, model weights |

| Compliance Officer | Regulatory alignment | GDPR, EU AI Act, organizational AI principles |

| Security Specialist | Vulnerability assessment | Red teaming, cryptography, cloud security |

| Domain Expert | Contextual validation | Industry-specific knowledge (e.g., medical, finance) |

| Ethics Specialist | Bias and social harm analysis | Fairness metrics, socio-technical harm analysis |

These roles ensure your audit covers every angle of the AI lifecycle. Expertise levels should range from a baseline understanding of AI concepts to in-depth skills in coding and model implementation, and infrastructure knowledge of cloud systems. Clearly document each team member's responsibilities throughout the AI lifecycle, from development to decommissioning.

Your team must have full access to personnel, records, and systems for a thorough evaluation. This includes running AI products on demand, accessing training data and code, and interviewing key stakeholders like developers or end-users. Confidentiality agreements are crucial, especially when dealing with third-party vendor products.

At NAITIVE AI Consulting Agency, we recommend assembling a diverse team with expertise spanning technical, regulatory, and domain-specific areas. If you need help tailoring your audit process, our experts are ready to assist.

Set Up Communication and Reporting Processes

Once roles are defined, establish clear reporting channels to ensure audit findings translate into action. The audit team should have direct access to leadership to ensure recommendations are addressed promptly.

Structure your reporting into two categories:

- Internal process reports: Detailed technical findings and recommendations for development and operations teams.

- Public accountability reports: Transparent summaries that demonstrate your organization's commitment to responsible AI.

Standardize your documentation using tools like Model Cards, System Cards, and Data Cards. These provide a single source of truth that bridges gaps between technical and non-technical stakeholders. Automated pipelines integrated into your CI/CD framework can continuously monitor model performance, data drift, and compliance issues, reducing manual review time by 70%.

Leverage real-time monitoring systems (e.g., Vanta, MetricStream) for immediate anomaly alerts. You can also implement a "LLM-as-a-judge" approach, where large language models evaluate outputs from other AI systems, improving auditor efficiency by 40%.

Set up trigger-based audits alongside regular reviews. Define events that require immediate audits, such as software updates, hardware changes, or unexpected performance issues. Maintain robust version control for all assets - code, data, models, and outputs - to ensure reproducibility.

Step 3: Document Data Governance and AI Processes

Creating thorough documentation is essential for demonstrating audit readiness and meeting regulatory standards. Once your audit team is established, the next step is to build a clear record showing that your AI systems comply with governance requirements. Be sure to document every phase of the AI lifecycle, as delays in this process can lead to higher remediation costs.

Follow the CLeAR principles when preparing documentation: ensure it is Comparable (uses standardized formats), Legible (easy to understand), Actionable (provides practical value), and Robust (kept up to date). The goal is to create evidence that demonstrates accountability:

"Documentation should be mandatory in the creation, usage, and sale of datasets, models, and AI systems".

In addition to technical details, include the reasoning behind key decisions - such as the original motivations, involvement of impacted communities, and ethical considerations. Begin by verifying your data sources and assessing their quality before moving on to model development.

Verify Data Sources and Quality

Start by recording the origins and quality of your data. Document why the dataset was created, who funded it, how the data was collected, and any filtering or cleaning processes used. Verify collection methods, labeling accuracy, and potential biases. For each dataset, ask questions like: Who funded it? What are the demographics of the annotators? What labeling guidelines were followed?

If the data involves personal information, make sure to specify whether consent was obtained, any restrictions on data use, and the legal basis for processing the data. Documenting data lineage - including its source, version, and licensing or consent status - provides a clear trail regulators can follow. Integrate these tasks into your technical workflows and MLOps processes to ensure consistency.

Once you’ve confirmed the data’s validity, move on to documenting how your models are built and deployed.

Document Model Development and Deployment

Keep detailed records of your model’s architecture, key parameters, and deployment processes. Auditors should be able to understand the reasoning behind your model choices, architecture details, version history, and important configurations. Document the training and fine-tuning processes, including benchmarks used for evaluation.

For autonomous systems, maintain logs that capture critical details like reasoning steps, tool selection logic, and input/output flows. This allows for forensic reconstruction of decisions. Use version control to ensure reproducibility. Given the complexity of AI systems, thorough documentation is crucial to address issues like data bias and transparency.

Establish Architecture Decision Records (ADR) to document risk analyses and the rationale for decisions, such as data retention policies and auditing tiers. Additionally, record investments in specialized hardware (like GPUs) used for training deep-learning models, as this information may be required for environmental impact audits.

Manage Data Privacy and Consent Practices

Privacy documentation must align with audit requirements and regulations like GDPR. Maintain a Record of Processing Activities (ROPA) that outlines the purposes of data processing, the categories of individuals and data involved, and the recipients of that data, as required under GDPR Article 30. Clearly document the legal basis for processing - whether it’s consent, contractual necessity, or legitimate interests.

Conduct Data Protection Impact Assessments (DPIAs) to evaluate risks associated with profiling or automated decision-making. For high-risk AI systems under the EU AI Act, retain technical documentation for at least ten years after the system is released or put into service.

Set up processes to handle Data Subject Access Requests (DSARs), including requests for rectification, erasure, or objections to automated processing. Record the logic behind decisions, as well as their significance and potential effects on individuals, to meet transparency requirements under GDPR Articles 13 and 15. The Information Commissioner’s Office (ICO) emphasizes:

"It is essential to document each stage of the process behind the design and deployment of an AI decision-support system in order to provide a full explanation for how you made a decision".

Automate data retention schedules based on data classes, and establish systems to delete or anonymize data once it expires. Provide clear, easy-to-understand privacy notices at all AI interaction points to explain how data is collected and used. At NAITIVE AI Consulting Agency, we help organizations create documentation frameworks that meet both technical and legal standards, ensuring your AI systems are ready for audits from the start.

Step 4: Run Technical and Risk Assessments

After documenting your AI systems, it’s time to test them thoroughly. These tests aim to uncover performance gaps, security flaws, and compliance issues, giving you a chance to address problems before auditors step in. A Microsoft survey of 28 businesses revealed that 89% lack the right tools to secure their machine learning systems, highlighting the importance of these assessments.

Testing should align with the system’s risk level and be repeated whenever the system or its environment changes. High-risk systems, such as those trained on sensitive data or used in critical business functions, demand more rigorous evaluations compared to low-risk experimental models. Below, we’ll break down methods for assessing performance, security, and compliance to ensure your systems meet audit standards.

Test Model Performance and Reliability

Evaluate how reliably your model performs and how well it handles unusual inputs. Techniques like k-fold cross-validation can verify that your model generalizes well.

For generative AI systems, such as large language models, track metrics like hallucination rates, factual accuracy, safety, and robustness to varied prompts. In cases where manual testing isn’t feasible, consider using "LLM-as-a-judge", a secondary language model that scores the outputs of your primary model. Use counterfactual testing to identify fairness issues by altering sensitive variables.

Keep detailed documentation of data versions, parameters, and environmental conditions to ensure reproducibility. Create model cards summarizing architecture, training data, limitations, and performance metrics in a format that auditors can easily understand.

Assess Security Vulnerabilities

Securing your AI systems against attacks is just as important as ensuring performance. Start by cataloging all AI assets, including internal models, third-party services, and any unauthorized "shadow AI" programs. Use frameworks like MITRE ATLAS to map threats specific to AI, such as model poisoning, adversarial examples, prompt injection, and model extraction.

Incorporate tools like Static Application Security Testing (SAST), Dynamic Application Security Testing (DAST), and Software Composition Analysis (SCA) to scan your code and third-party libraries for vulnerabilities. Conduct red teaming exercises to simulate real-world attacks, such as malicious prompt injections, fake account manipulations ("sockpuppeting"), and attempts to extract model details through API queries.

| Attack Type | Likelihood | Impact | Exploitability |

|---|---|---|---|

| Extraction | High | Low | High |

| Evasion | High | Medium | High |

| Inference | Medium | Medium | Medium |

| Inversion | Medium | High | Medium |

| Poisoning | Low | High | Low |

Secure your API endpoints with tools like secrets managers, MFA, and TLS 1.2+ encryption. Implement rate limiting to prevent denial-of-service attacks and detect unusual query patterns that might indicate extraction attempts. Tim Blair, Sr. Manager at Vanta, emphasizes:

"Typically, threat modeling and risk analysis of AI systems is the most time-consuming part of an AI security assessment".

Clearly define responsibilities within your team to keep the assessment process efficient and focused.

Evaluate Compliance and Ethical Risks

Once your AI processes are documented, assess compliance and fairness to meet regulatory requirements. Use metrics like demographic parity and equal opportunity to evaluate bias and fairness. Be cautious of target leakage, where training data includes information unavailable during actual predictions, leading to misleading performance metrics in development but failures in production.

For systems using Retrieval-Augmented Generation (RAG), evaluate the quality of retrieved data and assess the risk of data poisoning in your knowledge base. Add content filters to block offensive or sensitive outputs. For high-stakes decisions, implement human-in-the-loop validation to ensure accountability.

Conduct Data Protection Impact Assessments (DPIAs) to confirm compliance with regulations like GDPR, especially for systems involving profiling or automated decisions. Tools like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) can help explain how features influence your model’s predictions, making it easier for auditors and users to understand decisions. Finally, establish mechanisms for users to challenge AI-driven outcomes.

At NAITIVE AI Consulting Agency, we specialize in helping organizations design and implement thorough assessment programs aligned with frameworks such as the NIST AI Risk Management Framework, ISO/IEC 42001, and the EU AI Act. This ensures your systems are technically sound and compliant, ready to face audit scrutiny.

Step 5: Set Up Monitoring and Continuous Improvement

Passing an audit isn’t the finish line - it’s more like a checkpoint. AI systems tend to drift from their original settings over time, which can lead to unexpected or even problematic outcomes if they aren’t regularly monitored. Having a strong monitoring system in place helps catch these issues as they arise. As ApX Machine Learning explains:

"Think of audit trails as the detailed flight recorder for your ML systems. Without them, understanding why a model behaved unexpectedly, proving compliance, or tracing the lineage of a deployed model becomes exceedingly difficult."

By building on your existing documentation and risk assessments, continuous monitoring ensures your systems stay in check and ready for future audits. Let’s break down how to set up monitoring, manage version control, and prepare for those “what if” moments.

Deploy Monitoring and Alert Systems

The first step is to implement a monitoring strategy tailored to the risk level of your system. For lower-risk applications, simple flow logging - tracking timestamps and outcomes - might be enough. But for high-stakes systems, like those used in financial or safety-critical environments, you’ll need detailed audit trails with tamper-proof logs.

To avoid slowing down production, log monitoring data asynchronously to a dedicated message queue. Use machine-readable formats like JSON to structure your logs and include metadata essential for audits.

Automated alerts are another must-have. These should flag issues like model drift, data quality problems, or changes in feature attribution. In high-security environments, integrate these logs into a centralized SIEM (e.g., Microsoft Sentinel) to quickly identify anomalies. Protect sensitive monitoring data with strict role-based access controls.

| Monitoring Tier | Recommended Use Case | Key Controls |

|---|---|---|

| Tier 1: Basic Flow | Moderate-stakes, testing environments | Track data flows, timestamps, and outcomes to reconstruct events. |

| Tier 2: Explicit Reasoning | Regulated activities, customer-facing systems | Log explanations, confidence scores, and reasoning behind decisions. |

| Tier 3: Comprehensive | High-risk finance, autonomous systems | Record decision trees, contextual risk factors, and use tamper-proof cryptographic logging. |

Create Maintenance and Version Control Processes

Keeping track of changes is crucial for maintaining control over your AI systems. Integrate audit logging into your MLOps pipeline to ensure every lifecycle event is recorded immutably. Tools like MLflow or SageMaker can help you manage model registries, keeping a clear history of code versions, data sources, and deployments.

When a model’s performance dips below acceptable levels, retraining workflows should kick in automatically. These workflows, combined with CI/CD mechanisms, allow for quick rollbacks if needed. Document the reasoning behind decisions, especially for systems that rely on complex logic or confidence scores.

Always keep recent, production-ready versions on hand to enable swift rollbacks if something goes wrong.

Prepare Contingency and Disaster Recovery Plans

Even with strong monitoring in place, unexpected issues can arise. That’s why it’s critical to have contingency plans ready.

Include kill switches in your infrastructure to immediately disable problematic model features or routes. Define severity levels - for example, Sev-1 for sensitive data leaks and Sev-2 for policy violations - to ensure the right recovery plan is triggered.

Design a “safe mode” that keeps your system running with limited functionality during failures. This could involve using a simpler model, applying stricter prompts, or increasing abstention thresholds. For high-risk systems, consider adding human oversight during these periods to catch errors automated processes might miss.

When rolling back, ensure all components are reverted as a unified set. Provide on-call staff with streamlined tools - like a single-button process - to execute recovery actions quickly. After any incident, conduct a post-mortem review within a week. Use the findings to update your recovery procedures and improve resilience.

For organizations looking to strengthen their AI systems, NAITIVE AI Consulting Agency offers expertise in building robust monitoring and recovery frameworks aligned with standards like NIST AI RMF and ISO/IEC 42001. Their guidance ensures your AI infrastructure stays ready for whatever challenges come next.

Conclusion

The five steps outlined earlier provide a solid framework for creating AI systems that are both resilient and compliant. Preparing for audits isn't just about meeting regulations - it's about building systems that are reliable and trustworthy. By adopting these practices, organizations can shift compliance from being a reactive challenge to a strategic advantage. In fact, organizations that embrace systematic auditability have reported a 30% drop in compliance-related incidents and a 25% boost in operational efficiency.

The clock is ticking. With major regulations like the EU AI Act imposing hefty penalties and deadlines like August 2026 for high-risk systems just around the corner, the urgency is clear. But there’s also significant potential: the AI Governance market is expected to grow at a 49.2% CAGR through 2034, and over 50% of compliance officers are now incorporating AI into their workflows, a sharp rise from 30% in 2023. Shifting audit-readiness into a continuous improvement strategy not only builds trust but also enhances operational performance. As the Compliance & Risks Marketing Team aptly puts it:

"The journey from reactive checklists to proactive, AI-powered resilience is a marathon, not a sprint".

These numbers highlight how a proactive approach to compliance doesn’t just mitigate risks - it can also drive efficiency. Start with simple steps like creating an AI system register that documents each tool, its purpose, and its risk level. Implement immutable logging to maintain a tamper-proof record of every decision made. Stress-test your documentation by conducting mock audits with internal "red teams" to ensure readiness before regulators step in.

The gap between perceived compliance and actual audit-readiness is striking. While 78% of organizations believe they align with AI safety principles, only 29% have implemented verifiable practices. Bridging this divide means moving beyond traditional MLOps, which focus on performance, to a compliance-first approach that emphasizes transparency, traceability, and human oversight.

To stay ahead, organizations need to build audit-ready AI systems from the ground up. For expert guidance, NAITIVE AI Consulting Agency offers tailored solutions aligned with frameworks like NIST AI RMF and ISO/IEC 42001. Their expertise ensures your AI infrastructure not only meets today’s compliance requirements but remains adaptable as regulations and technologies evolve.

FAQs

Which AI systems are considered “high-risk” for audit purposes?

AI systems classified as "high-risk" are often subject to more stringent regulations, like those outlined in the EU AI Act. These systems must undergo conformity assessments and implement accountability frameworks to meet both legal and ethical requirements.

What evidence do auditors expect beyond Model Cards and Data Cards?

Auditors focus on gathering extra evidence like thorough documentation related to governance, project management, data quality, evaluation methods, and organizational oversight. This involves outlining roles and responsibilities, conducting risk assessments, tracking performance metrics, and detailing compliance measures. Keeping detailed records not only helps with audit preparation but also highlights accountability.

How do we stay audit-ready after a model update or data drift?

To stay prepared for audits after a model update or data drift, prioritize ongoing performance monitoring. Keep detailed records of all retraining efforts or modifications, and consistently assess data quality and potential bias. Implement real-time validation processes and carry out transparency checks to maintain compliance and uphold accountability.