How to Monitor AI Bias with Human Oversight

Human oversight is essential to detect and fix AI bias using risk-based HITL, diverse teams, monitoring tools, and continuous feedback for fair, compliant AI.

AI bias can lead to unfair outcomes, damage trust, and even result in legal penalties. To address this, human oversight plays a critical role in ensuring AI systems make ethical and accurate decisions. Here’s a quick summary of how organizations can effectively monitor AI bias:

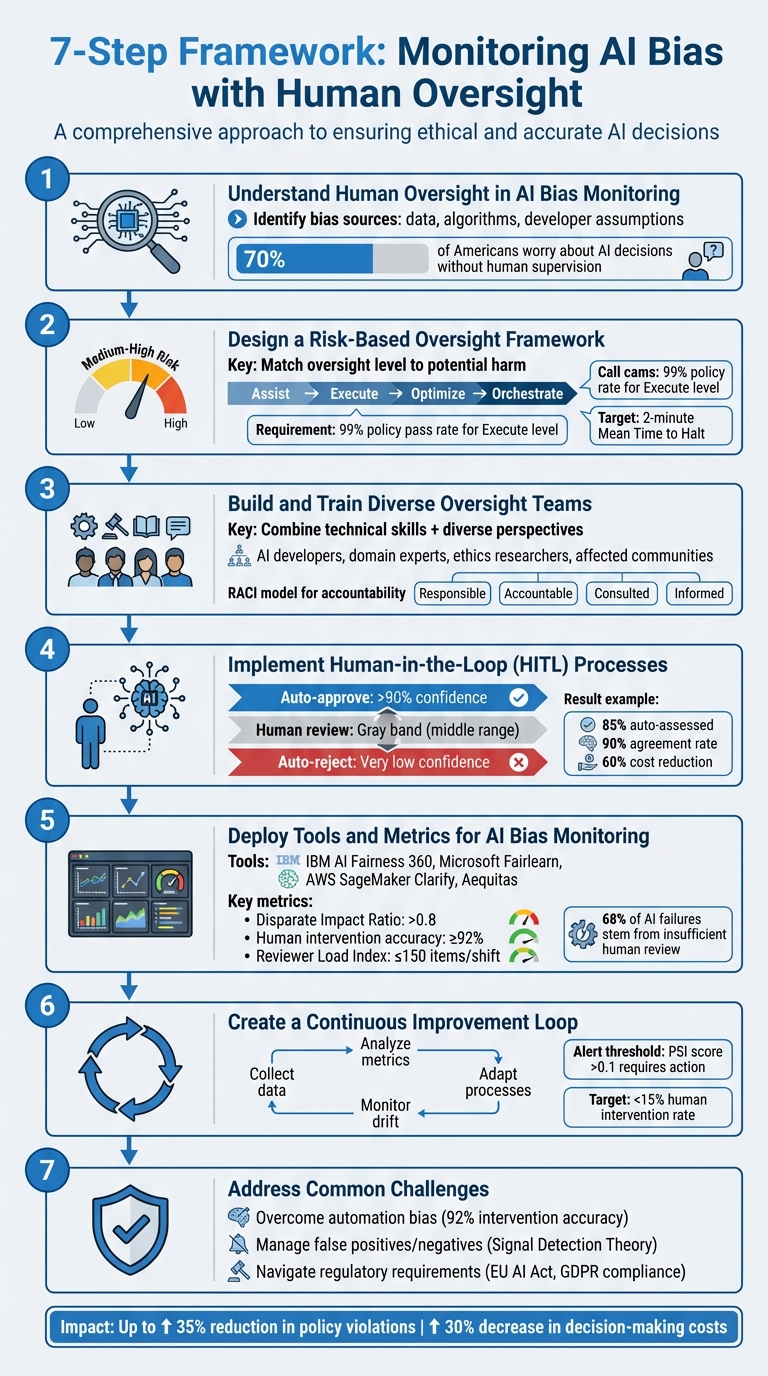

- Understand Bias and Oversight: Bias arises from flawed data, algorithms, or assumptions. Human reviewers act as gatekeepers to identify and mitigate these issues.

- Risk-Based Oversight: Tailor oversight to the level of risk. High-stakes applications (e.g., healthcare, finance) require stricter controls and dual human verification.

- Build Diverse Teams: Combine technical skills with diverse perspectives to challenge AI outputs and address potential biases.

- Human-in-the-Loop (HITL): Incorporate checkpoints where human judgment can review and adjust AI decisions in real time.

- Use Monitoring Tools: Leverage tools like IBM’s AI Fairness 360 or Microsoft’s Fairlearn to track bias metrics and ensure compliance.

- Continuous Improvement: Regularly collect feedback, analyze data, and refine oversight processes to adapt to new challenges.

- Address Challenges: Tackle issues like automation bias, false positives, and regulatory compliance with clear protocols and training.

7-Step Framework for Monitoring AI Bias with Human Oversight

AI Bias Starts With Data; Why Human Oversight Still Matters

Step 1: Understand Human Oversight in AI Bias Monitoring

Before diving into oversight strategies, it’s important to understand why bias happens and how human judgment acts as a safeguard. AI systems process patterns in data but lack moral reasoning or the ability to consider context. Knowing where biases originate highlights the importance of active human involvement.

Why AI Bias Happens

Bias in AI can creep in at many stages - data collection, labeling, model training, or deployment. For instance, data bias occurs when training datasets don’t accurately reflect the population. A well-known example is facial recognition systems that struggle with darker skin tones because the training data skewed heavily toward lighter-skinned individuals. Algorithmic bias can also emerge, such as hiring tools that favor majority groups. Even developer biases, whether intentional or not, can seep into systems, reinforcing stereotypes like gender assumptions in translation tools.

One particularly harmful type of bias is selection bias. If the data used to train a system doesn’t represent the real-world population, the system’s predictions will be skewed. Predictive policing offers a stark example: when unrepresentative data is fed into these systems, they perpetuate and amplify existing inequalities. Without consistent monitoring, biased outputs can spiral into feedback loops, further entrenching systemic issues.

The Role of Human Oversight

Given these challenges, human oversight becomes essential to counterbalance AI’s limitations. Human reviewers act as gatekeepers, ensuring AI outputs are accurate and fair before they affect real-world decisions. Mary Kryska, EY Americas AI and Data Responsible AI Leader, emphasizes this point:

"AI still relies on humans for creation and regulation because people can recognize when AI doesn't have the full picture - or worse, when it portrays a flawed or false picture"

― Mary Kryska, EY Americas AI and Data Responsible AI Leader

For oversight to work, systems need controllability - tools that allow operators to edit outputs in real time or even halt operations entirely. Human reviewers bring the contextual understanding that algorithms lack, helping to differentiate between legitimate societal patterns and harmful biases. This is particularly vital in sensitive fields like healthcare and law, where AI’s lack of moral judgment can lead to dangerous outcomes.

The importance of oversight is underscored by public concern: 70% of Americans worry about AI making major decisions without enough human supervision. Additionally, 71% of organizations using AI view human oversight as critical for maintaining public trust. Without this layer of accountability, companies risk legal trouble, financial losses, and damaged reputations. Understanding these stakes lays the groundwork for creating robust oversight frameworks, which will be explored in the next steps.

Step 2: Design a Risk-Based Oversight Framework

Once you've grasped why bias occurs and the importance of human oversight, it's time to focus on tailoring oversight based on the level of risk involved.

The risks associated with AI systems vary widely. For instance, a chatbot offering cooking tips poses minimal risk, while AI systems used for screening loans or diagnosing medical conditions carry much higher stakes. The goal is to match the level of oversight to the potential harm. The EU AI Act emphasizes this approach: "The oversight measures shall be commensurate with the risks, level of autonomy and context of use of the high-risk AI system". In other words, the higher the risk, the stricter the oversight. By combining an understanding of bias with a clear risk assessment, you can create a tailored oversight strategy with expert AI consulting.

Identify High-Risk Applications

Begin by identifying situations where biased AI decisions could lead to serious consequences. Sectors like healthcare, finance, law enforcement, and hiring are commonly flagged as high-risk because errors in these areas can directly impact people's rights, safety, or legal standing. For example, biometric identification systems used in security settings should require verification by at least two individuals before any action is taken. This helps prevent issues like wrongful detention or denial of services due to misidentification.

But don't stop at the obvious. Consider indirect impacts as well. For instance, an AI system managing childcare benefits might primarily affect parents, but children also bear the consequences of errors. The UK Information Commissioner’s Office highlights a critical issue: "non-meaningful human review is caused by automation bias or a lack of interpretability. As a result, people may not be able to exercise their right to not be subject to solely automated decision making with significant effects". Document all groups influenced by your AI's decisions and evaluate whether mistakes could infringe on privacy, equality, or legal rights.

Once you've mapped out high-risk areas, adjust the level of human oversight to match the potential harm.

Match Oversight to Risk Levels

Set clear oversight levels to align human involvement with the severity of risks. Common levels include:

- Assist: AI provides suggestions, but humans make the final decisions.

- Execute: AI operates within strict boundaries, with human reviews in place.

- Optimize: AI adjusts parameters to improve performance over time.

- Orchestrate: AI manages workflows with minimal human input.

For systems operating at the Execute level, ensure a high policy pass rate - at least 99% - before reducing human checks. High-risk tasks, such as identification in security contexts, should always require dual human verification. On the other hand, lower-risk recommendations might only need periodic sampling reviews.

Critical systems must also include "stop" buttons and rollback features to allow immediate intervention. The goal is to achieve a Mean Time to Halt of two minutes or less. This ensures that if a problem arises, your monitoring team can freeze operations and reverse any decisions instantly. As the Pedowitz Group explains: "Oversight = clear ownership + controllable autonomy + complete observability". Without these safeguards, your oversight framework would amount to little more than paperwork, offering no real protection.

Step 3: Build and Train Diverse Oversight Teams

Once your risk framework is ready, it’s time to assemble a team that can actively identify and address bias. This group needs to blend technical know-how with diverse perspectives to challenge automated decisions effectively. Without this balance, even the most well-designed framework will fall short.

Assemble the Right Team

Start by combining technical skills with real-world expertise. Your team should include AI developers and data scientists who understand the limits of machine learning models, alongside domain experts like HR professionals for hiring systems, healthcare practitioners for medical AI, or financial specialists for loan algorithms. Add ethics researchers and, importantly, representatives from the communities most impacted by your AI’s decisions.

A standout example comes from January 2025, when the Partnership on AI brought together a working group of 16 experts from six countries, including the US, UK, Canada, South Africa, Netherlands, and Australia. This group, which featured seven equity specialists focused on areas like data justice, racial justice, LGBTQ+ rights, and disability advocacy, developed demographic data guidelines. By incorporating intersectionality into their framework, they ensured fairness assessments addressed the needs of marginalized communities.

Including affected communities isn’t just a nice-to-have - it’s essential. As the Partnership on AI emphasizes:

"Without the voices of those most affected in its development and deployment, we risk deepening the very divides we hope to close" ― Partnership on AI

To ensure clear accountability, implement a RACI model (Responsible, Accountable, Consulted, Informed) for all AI workflows. Governance boards can establish policy standards and set autonomy levels, while channel owners use weekly scorecards to decide whether to continue or pause AI operations.

Once your team is in place, equip them with the training needed to critically evaluate AI outputs.

Train for Awareness and Critical Thinking

A diverse team is only as effective as its training. To make meaningful oversight possible, focus on combating automation bias. The UK Information Commissioner’s Office highlights a common risk:

"users of AI decision-support systems may become hampered in their critical judgment and situational awareness as a result of an overconfidence in the objectivity, or certainty of the AI system" ― UK Information Commissioner's Office

Training should help team members strike a balance - avoiding blind trust in AI while not dismissing accurate outputs. AI operates on probabilities, not absolutes, so it’s crucial to teach teams how to interpret confidence intervals, error margins, and explainability tools that clarify decision-making processes. In fact, the EU AI Act mandates AI literacy for anyone managing high-risk systems.

Practical exercises can make this training stick. For example:

- "Mystery shopping" exercises involve feeding misleading inputs into the system to test how well it handles edge cases.

- Monthly kill-switch drills ensure teams can halt AI operations within two minutes if needed.

- Clear override protocols should be in place, requiring teams to document every instance where they reject an AI recommendation, along with their reasoning.

Resources like MIT’s "AI Blindspot Cards" can also be invaluable. These cards guide teams through workshops to uncover hidden risks in areas like data representation, explainability, and optimization criteria.

The ultimate goal is straightforward: equip your team to identify when the AI gets it wrong - and empower them to step in. Without this level of preparation, oversight risks becoming symbolic rather than a meaningful safeguard.

Step 4: Implement Human-in-the-Loop (HITL) Processes

Incorporate human judgment into your AI workflows by creating checkpoints where decisions can be reviewed and adjusted before being finalized. This approach allows for strategic human intervention, especially in scenarios where errors could have serious consequences. By doing so, you can reduce bias and maintain ethical standards in decision-making.

Define HITL Models

HITL systems rely on confidence-based routing to determine when human involvement is necessary. A three-tier system can be particularly effective: automatically approve outputs with high confidence (over 90%), reject those with very low confidence, and send intermediate cases - the "gray band" - to human reviewers for closer examination. Before deployment, run the AI in shadow mode to fine-tune these thresholds and identify potential bias patterns. For example, a Turing HITL model managed to auto-assess 85% of developer submissions, achieving a 90% agreement rate with experts while reducing human workload to 30% of cases and cutting decision costs by 60%.

Beyond confidence scores, use additional routing rules to flag outputs for review. These could include validator failures (e.g., missing citations), policy triggers (such as sensitive demographic details in loan applications), or novelty signals (data significantly different from the model's training set). Train human reviewers to flag issues using standardized codes like "INCORRECT", "UNSAFE", or "BIASED", and ensure all overrides are documented. This structured feedback loop not only improves the model but also supports compliance efforts.

Set Up Override Mechanisms

Once you've defined your HITL models, the next step is to implement override protocols for real-time decision control. For high-stakes decisions - such as financial transactions, medical summaries, or hiring recommendations - use synchronous approvals that pause the AI for human sign-off. These add minimal delays (0.5–2.0 seconds) while ensuring critical decisions are reviewed. For lower-risk scenarios, asynchronous audits allow the AI to operate autonomously, with decisions logged for later review. This approach helps identify and address biased patterns without slowing down operations.

Organize review tasks by priority, with P0 for safety and legal issues, P1 for customer-facing decisions, and P2 for internal or low-risk items. To handle unexpected spikes, integrate a kill switch that lets supervisors pause auto-approvals when needed. The EU AI Act emphasizes the importance of human oversight, stating that "high‐risk AI systems shall be designed and developed in such a way... that they can be effectively overseen by natural persons during the period in which they are in use". Real-time dashboards can help supervisors monitor AI performance and quickly intervene if something goes wrong.

To ensure transparency and accountability, maintain an audit trail for every override. Document who made the change, what was altered, and the reasoning behind it. This not only supports compliance but also aids in refining the system over time.

Step 5: Deploy Tools and Metrics for AI Bias Monitoring

Once HITL (Human-in-the-Loop) is in place, the next step is deploying tools and metrics to effectively monitor and address emerging biases. Without this infrastructure, oversight efforts can lack the measurable impact needed to ensure fairness. By combining HITL with these tools, organizations can maintain continuous oversight and take informed, timely actions with the help of an AI automation agency.

Key Monitoring Tools

The choice of monitoring tools should align with your technical environment and risk considerations. For example:

- IBM's AI Fairness 360: This Python-based library provides over 70 fairness metrics and 10 bias mitigation algorithms, making it a robust option for comprehensive oversight.

- Microsoft's Fairlearn: Ideal for Microsoft ecosystems, this tool offers a dashboard that visualizes the trade-offs between fairness and performance.

- Aequitas: Developed by the University of Chicago, Aequitas caters to both technical and non-technical users. It includes command-line tools for data scientists and web applications for generating policy-ready audit reports.

For teams using Amazon's platform, AWS SageMaker AI Clarify integrates seamlessly into ML pipelines. It continuously monitors live endpoints, using statistical tests like Kullback–Leibler and Kolmogorov–Smirnov to detect drift. Additionally, FairBench is particularly suited for large language models and vision systems, offering automated fairness reports and support for these data types.

Continuous monitoring is critical for identifying concept drift before biases escalate into larger issues.

Important Metrics to Track

To complement these tools, organizations must track specific metrics that measure both AI bias and the effectiveness of human oversight. Key metrics include:

- Disparate Impact Ratio: This metric evaluates fairness in areas like employment and credit decisions, with a legal threshold typically set above 0.8.

- Demographic Parity Ratio: Measures whether positive outcomes are distributed evenly across different groups.

- Equalized Odds: Ensures that true and false positive rates are consistent across demographic groups.

A recent Gartner survey revealed that 68% of AI failures stemmed from insufficient human review. To address this, organizations should also monitor oversight performance through metrics like:

- Accuracy of Human Interventions: Aim for at least 92% accuracy in human oversight tasks.

- Time-to-Decision: Tracks the speed of human review processes.

- Coverage Ratio: Measures the proportion of cases reviewed by humans.

The Reviewer Load Index is another valuable metric. By managing the number of items each reviewer handles per shift - ideally capping it at 150 - organizations can reduce fatigue-related errors by up to 22%.

"The transformation happens when organizations move from 'deploy and hope' to continuous monitoring with actionable metrics." - Errin O'Connor, Chief AI Architect, EPC Group

Baseline metrics should be established during the training phase to set meaningful thresholds for automated alerts in production. Stratified reporting is also crucial; breaking down accuracy, sensitivity, and specificity by race, ethnicity, gender, and age helps uncover biases that overall averages might hide. Tools like FairBench can further enhance analysis by examining sensitive attributes intersectionally, such as race and gender together, to identify complex discrimination patterns.

Step 6: Create a Continuous Improvement Loop

Turning AI bias monitoring into a dynamic process requires constant feedback and data analysis. Without this ongoing cycle, your system risks becoming stagnant, unable to adapt to new challenges. By building on earlier strategies, you can create a monitoring framework that stays flexible and responsive.

Collect and Analyze Data

The first step is gathering diverse feedback from your oversight process. This includes quantitative data (like ratings or binary responses), qualitative insights (open-ended feedback that captures complex issues), and implicit feedback (user actions, such as accepting or ignoring AI suggestions). Every decision should be logged with details like inputs, retrieved sources, tool calls, model versions, and reviewer IDs.

To stay ahead of potential issues, monitor model drift using tools like PSI and the KS test. A PSI score above 0.1 indicates it's time to take action. Set up automated alerts to notify your team - via email or Slack - when fairness metrics, such as the Disparate Impact Ratio, fall below 0.8.

"A Human Feedback Loop is a critical detective and continuous improvement mechanism that involves systematically collecting, analyzing, and acting upon feedback provided by human users, subject matter experts (SMEs), or reviewers." – FINOS

Evaluate oversight performance by tracking metrics like intervention accuracy, time-to-decision, and false positive/negative rates. For example, if human intervention exceeds 15%, it could indicate automation bias, meaning the system either fails often or reviewers overly depend on AI outputs. Running a two-week pilot can help establish baseline numbers before setting permanent thresholds.

Adapt Oversight Processes

Once you've analyzed the data, use those insights to refine your oversight strategies. Regular retrospectives are essential for updating policies in response to recurring bias patterns. When issues like data drift or edge cases emerge, send them to human operators for annotation. These annotations can then serve as new ground truth data to improve model performance during retraining. This approach - focusing on enhancing data quality while keeping the model stable - is becoming a widely adopted industry practice.

To prevent burnout and inefficiencies, stick to reviewer workload limits as previously discussed. Monitoring time-to-decision metrics can also help reduce overtime costs by up to 30% through better resource allocation. Always have a kill-switch in place, complete with a rollback mechanism, to revert to a stable version if critical bias is detected. Ideally, aim for a Mean Time to Halt of two minutes or less.

This iterative process ensures that human oversight remains a core element in addressing AI bias, even as operational challenges evolve. By continuously improving your methods, your system can adapt to new demands while maintaining fairness and accountability.

Step 7: Address Common Challenges in AI Oversight

Even the best oversight frameworks face challenges in practice. Identifying these hurdles early helps create systems that stay effective, even under pressure. Below are some frequent issues and strategies to tackle them.

Overcome Automation Bias

People often place too much trust in automated systems, even when there’s evidence to question their decisions. This tendency, known as automation bias, can have serious consequences. A tragic example occurred in March 2018 when a self-driving Uber vehicle in Tempe, Arizona, fatally struck a pedestrian. The NTSB report (HAR-19-03) highlighted that the safety driver had become distracted, relying too heavily on the system's reliability.

To reduce automation bias, introduce measures that force reviewers to think critically before viewing AI outputs. For instance, require them to document their reasoning beforehand. Equip teams with tools like Uncertainty Quantification and Saliency Maps to help them better interpret AI decisions. Additionally, train staff not just on how the system works but on how to respond when it fails. In high-risk scenarios, aim for a human intervention accuracy rate of at least 92% and monitor the Reviewer Load Index to avoid errors caused by fatigue.

"The human brain is evolutionarily wired for active engagement, not for prolonged periods of inactive supervision." – Nanda Min Htin, Privacy and AI Governance Lawyer

Manage False Positives and Negatives

Another challenge is distinguishing genuine bias signals from noise. Incorrect bias flags can waste resources and undermine trust in your monitoring system. To address this, apply Signal Detection Theory (SDT) to fine-tune how your system identifies and flags errors. Configure it so only high-confidence flags trigger immediate action, while borderline cases are sent for human review. Regular evaluations can help maintain the rigor of your oversight processes.

Navigate Regulatory Requirements

Oversight isn’t just about internal processes - it must also align with legal standards. Regulations like the EU AI Act (Article 14) and UK GDPR (Articles 5 and 22) require human oversight to be "meaningful." This means reviewers must have the authority and independence to challenge or override AI decisions.

To meet these requirements, keep detailed logs of human interventions and regularly evaluate the effectiveness of your oversight system. Using frameworks like the NIST AI Risk Management Framework or ISO/IEC 42001 can strengthen your approach. Assign clear responsibilities with models like RACI to avoid accountability gaps, and use standardized checklists to minimize subjective biases.

"When a regulator mandates a task that a human cannot reliably perform, they are effectively mandating a breach of duty and setting the industry up to fail." – Nanda Min Htin, Privacy and AI Governance Lawyer

For tailored advice on implementing these strategies, check out NAITIVE AI Consulting Agency, which specializes in optimizing human oversight for AI bias monitoring.

Conclusion and Next Steps

Keeping AI bias in check with human oversight is a crucial step toward creating systems that are fair, compliant, and reliable. By following the seven steps outlined earlier, you can establish a framework where human judgment bridges the gaps left by algorithms, ensuring your AI aligns with ethical principles and meets your business objectives.

To move forward, focus on turning this framework into tangible actions. Start by assigning clear ownership and accountability. Use a RACI model to designate a single human owner for each AI workflow, set up tiered approval processes for high-risk decisions, and implement a kill-switch capable of halting operations in under two minutes. Measure your progress with key performance indicators - like achieving at least 92% accuracy in human interventions for high-risk tasks and maintaining a policy compliance rate of 99% or higher. Studies show that effective oversight can cut policy violations by up to 35% while reducing decision-making costs by 30%.

Begin by running your system in shadow mode. This allows you to compare AI performance against human reviews, adjust confidence thresholds, and identify failure patterns - all critical steps in building trust in your system. Over time, as your AI demonstrates consistent quality and safety, you can gradually increase its level of autonomy.

To meet regulatory requirements, it's important to note that frameworks like the EU AI Act and GDPR mandate "meaningful human review" with full authority to override AI decisions. Make sure to log every intervention, document the reasoning behind it, and conduct regular audits to demonstrate the effectiveness of your oversight framework.

For expert guidance, NAITIVE AI Consulting Agency offers tailored solutions to match your specific risk profile. Their team can help you implement human-in-the-loop architectures, fine-tune confidence thresholds, and develop custom oversight scorecards. By combining technical expertise with practical strategies, they ensure your monitoring systems not only meet compliance standards but also provide measurable benefits - protecting your organization while fully leveraging AI's potential.

FAQs

What counts as “meaningful” human oversight?

"Meaningful" human oversight involves keeping a close eye on AI systems in a way that's both practical and suited to the specific risks and uses of the technology. This means actively monitoring how the system operates, being aware of its limitations, and staying in control through tools like audits, overrides, and approval processes. The goal is to ensure that humans can track performance, step in when necessary, and make sense of the system's outputs. This helps reduce issues like bias, mistakes, or harm. Tools such as dashboards, alerts, and manual controls play a big role in supporting this oversight.

How do I decide which AI decisions need human review?

To figure out which AI decisions need human oversight, consider the impact and potential risks tied to each decision. Pay special attention to critical areas like healthcare or finance, where mistakes could lead to harm, violate regulations, or erode trust. Ask yourself a few essential questions: What happens if the AI gets it wrong? Can the decision be undone? Does it influence safety or compliance? When the risks are high, human review should take priority.

Which bias metrics should I track first?

To tackle AI bias effectively, start by monitoring fairness metrics that spotlight disparities among different subgroups or protected attributes. Key measures to focus on include demographic parity, equalized odds, and data representativeness checks. These metrics allow you to compare outcomes across various groups and evaluate whether your data adequately reflects diverse populations. By prioritizing these checks, you can lay a solid groundwork for identifying and addressing potential biases in your AI models.