https://llm-explorer.com/list/

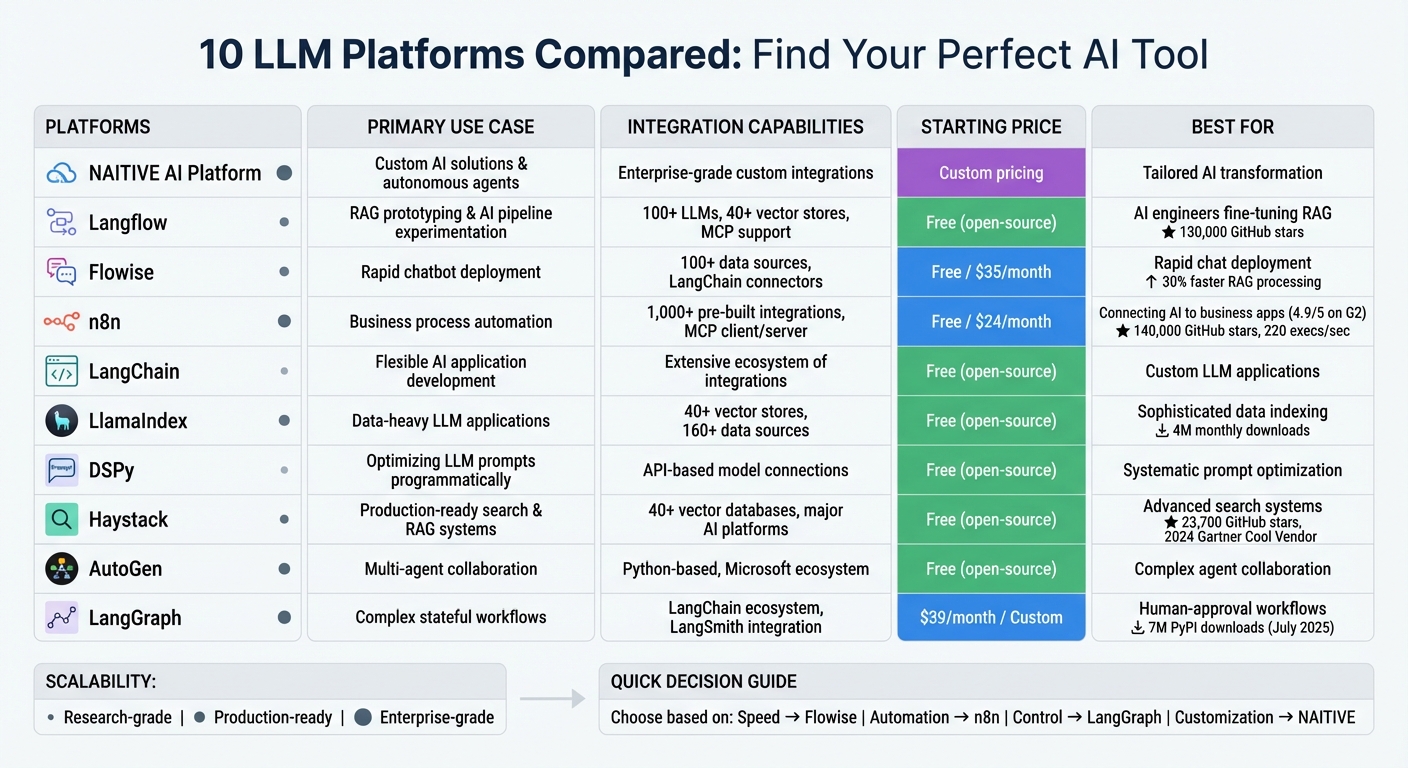

Compare 10 LLM platforms for prototyping, RAG, multi-agent systems, workflow automation, scalability, and pricing to pick the best fit for your project.

Large Language Models (LLMs) are transforming businesses, but implementing them effectively requires the right tools. This article explores ten LLM platforms that simplify AI integration, automate workflows, and improve efficiency. Here's a quick overview:

- NAITIVE AI Platform: Enterprise-focused with multi-agent systems and scalability.

- Langflow: Open-source, drag-and-drop prototyping for AI workflows.

- Flowise: Visual-first platform for building AI chatbots and workflows.

- n8n: Workflow automation with extensive integrations and coding flexibility.

- LangChain: Open-source framework for building custom LLM applications.

- LlamaIndex: Bridges LLMs with enterprise data for accurate insights.

- DSPy: High-code orchestration for precise AI logic and execution.

- Haystack: Production-ready framework for retrieval-augmented generation (RAG).

- AutoGen: Multi-agent collaboration for handling complex tasks.

- LangGraph: Graph-based orchestration for intricate workflows.

These platforms cater to different needs, from quick prototyping to enterprise-grade AI solutions. Choose based on your goals, team expertise, and integration needs.

Quick Comparison:

| Tool | Use Case | Integration Capabilities | Workflow Support | Scalability | Price |

|---|---|---|---|---|---|

| NAITIVE AI Platform | Custom enterprise solutions | Tailored integrations | Advanced multi-agent | Enterprise-grade | Custom pricing |

| Langflow | AI prototyping | Pre-built components | Visual IDE | Production-ready | Free (open-source) |

| Flowise | Chatbot deployment | LangChain connectors | Multi-agent conversations | Scalable | Free/$35+ per month |

| n8n | Process automation | 1,000+ integrations | Custom code nodes | High throughput | Free/$24+ per month |

| LangChain | Custom LLM apps | Extensive ecosystem | Modular components | Horizontally scalable | Free (open-source) |

| LlamaIndex | Data-heavy applications | 40+ vector stores | Advanced indexing | Enterprise processing | Free (open-source) |

| DSPy | Prompt optimization | API-based model connections | High-code orchestration | Research-grade | Free (open-source) |

| Haystack | RAG systems | Modular retrieval techniques | End-to-end pipelines | Production-grade | Free (open-source) |

| AutoGen | Multi-agent collaboration | Python-based integrations | Autonomous agents | Cloud-native scaling | Free (open-source) |

| LangGraph | Stateful workflows | LangChain ecosystem | Graph-based architecture | Horizontally scalable | $39+/custom pricing |

Select based on your requirements - whether it's automation, prototyping, or managing complex workflows.

LLM Platform Comparison: Features, Pricing, and Best Use Cases

How to Choose the BEST LLM for YOUR Project: A Methodical Guide

1. NAITIVE AI Platform

The NAITIVE AI Platform showcases how specialized AI solutions can seamlessly integrate into enterprise workflows to improve operations and deliver tangible results. By embedding AI agents directly into business processes, it enables streamlined workflows and measurable efficiency gains, setting the stage for effective, agent-driven operations across even the most complex tasks.

Advanced Agentic AI Features

Built with agentic AI at its core, the platform supports collaborative multi-agent systems, including 24/7 AI phone agents capable of maintaining natural, human-like conversations.

Designed for Scalability

Scalability is one of the platform's standout features. It’s built to handle large-scale applications, offering flexible deployment options such as cloud-native Kubernetes, serverless architectures, or on-premise Docker setups. With features like auto-scaling, load-balancing, and a 99.9% uptime SLA, it ensures reliable performance even in demanding environments.

2. Langflow

Langflow makes it easier to navigate the complexities of AI workflows, allowing teams to quickly prototype and deploy applications powered by large language models (LLMs) without diving deep into code. With over 130,000 GitHub stars as of late 2025, this open-source platform has become a trusted tool for enterprises aiming to move swiftly from ideas to production-ready solutions. Its features are tailored for real-world enterprise applications.

Simplified Prototyping and Deployment

Langflow’s drag-and-drop interface allows developers to visually design AI workflows and deploy them as REST APIs or Model Context Protocol (MCP) servers, enabling direct integration with other applications. The platform also includes a Playground feature, which lets teams test and refine their workflows before transitioning them to production. Jan Schummers, Senior Software Engineer at WinWeb, shared:

"Langflow has transformed our RAG application development."

Seamless Integration with Enterprise Systems

Langflow connects to over 40 vector stores and supports more than 100 LLMs. It also integrates with popular tools like Slack, Gmail, Google Drive, Notion, GitHub, and Confluence. For systems requiring unique solutions, Langflow’s Python-based framework enables developers to create custom components tailored to proprietary databases or legacy infrastructure.

Advanced Agentic AI Features

The platform’s Agent component takes workflows to the next level by enabling them to call tools, manage session memory, and coordinate multiple agents. With Tool Mode, any component - whether it’s a calculator or a web search tool - can be transformed into a standalone tool. Additionally, built-in session management using session_id ensures that conversations remain distinct across users and applications, maintaining clarity and organization.

Built for Scalability

Langflow supports deployment via Docker, cloud platforms, or its free cloud tier. For production environments, typical setups require 2–4 CPU cores and 4–8GB of RAM, and the system can scale to 8+ cores with GPU support for resource-intensive tasks. To monitor performance at scale, Langflow integrates with enterprise observability tools like LangSmith, Langfuse, and Prometheus. Its scalability and flexibility make it a powerful choice for enterprises looking to implement AI solutions at scale.

3. Flowise

Flowise offers a visual-first platform for building AI workflows, catering to users with varying levels of expertise through its three main builders: Assistant for beginners, Chatflow for single-agent systems and RAG applications, and Agentflow for managing multi-agent orchestration. This tiered approach allows organizations to start with simple workflows and expand their AI capabilities as their requirements grow. The platform's visual-first design makes it an accessible yet powerful tool for AI orchestration.

Ease of Prototyping and Deployment

Flowise's visual editor streamlines the process of creating and deploying production-ready workflows. According to David Micotto, Senior Director of DX & AI:

"Flowise has truly changed how we approach AI. It's simple enough to prototype an idea in minutes, yet powerful enough to take all the way to production."

The platform supports various deployment options, including self-hosting, cloud-based setups, and air-gapped environments. Flowise offers a free tier starting at $0/month, which includes 2 flows and 100 predictions per month. For advanced needs, the Starter plan costs $35/month, providing unlimited flows and 10,000 predictions, while the Pro plan is available at $65/month and includes features like SSO, RBAC, and 50,000 predictions.

Support for Agentic AI Capabilities

Flowise’s AgentFlow V2 architecture introduces granular nodes for defining detailed workflow sequences. It supports both "Supervisor" and "Worker" agent patterns, enabling the platform to handle complex, multi-step tasks that go beyond standard automation. A unique Human Input node allows workflows to pause for feedback and then resume seamlessly, ensuring smooth task execution.

Tyler Merritt, CTO at UneeQ, shared his experience:

"Flowise has been able to dramatically decrease the resources required to deploy our digital human experiences."

In addition to internal workflow management, Flowise integrates effortlessly with external systems, further enhancing its versatility.

Integration with Enterprise Workflows

Flowise connects to over 100 data sources and vector databases using HTTP nodes and MCP. To meet enterprise security requirements, it includes features like Role-Based Access Control (RBAC), Single Sign-On (SSO), encrypted credentials, and domain restrictions. Dr. Fethi Filali of QmicQatar successfully incorporated Flowise into the "iFleet" product, using LLM function-calling to improve the copilot feature’s performance and efficiency. These integrations make it easier for enterprises to embed AI into their existing workflows, aligning with broader efforts to create seamless and effective AI solutions.

Scalability for Large-Scale Applications

Built with enterprise-level demands in mind, Flowise supports both vertical and horizontal scaling. Horizontal scaling is achieved through the use of message queues and workers, providing the flexibility to handle high-throughput tasks. The platform also ensures observability at scale by offering full execution traces and integrating with external monitoring tools like Prometheus and OpenTelemetry. By August 2023, Flowise had earned 12,000 GitHub stars, highlighting its growing popularity among organizations seeking reliable, production-ready AI infrastructure.

4. n8n

n8n is a platform that pairs visual workflow building with the ability to inject custom JavaScript or Python, making it a powerful tool for enterprises looking to integrate AI with their complex business systems. With 140,000 GitHub stars and over 1,000 pre-built integrations, n8n has become a go-to solution for AI-driven automation. Its approach reflects current trends in scalable and integrated AI workflow management.

Integration with Enterprise Workflows

n8n is designed to seamlessly connect a variety of business systems, aligning with the growing demand for enterprise AI automation. Its extensive node library and a native HTTP Request node make it easy to integrate with REST APIs and proprietary systems. It also supports the Model Context Protocol (MCP) as both a client and server, allowing external AI systems to directly trigger workflows in n8n.

For enterprises, security and compliance are crucial, and n8n delivers with features like SOC2 compliance, role-based access control (RBAC), and Git-based deployment tools for versioning and rollbacks. These governance tools ensure workflows meet high standards for security. Users can choose between cloud hosting, priced at $24 per month for 2,500 executions, or self-hosting via Docker/npm for complete control over their data.

Support for Agentic AI Capabilities

n8n also supports advanced AI functionalities, such as autonomous decision-making and multi-agent orchestration, through its AI Agent node. The platform integrates with leading large language model (LLM) providers, including OpenAI, Anthropic, and Azure, as well as local models, offering flexibility for various AI automation needs. Its Human-in-the-Loop (HITL) feature adds an extra layer of oversight by pausing workflows for manual approval, ensuring outputs align with compliance standards before proceeding.

In 2024, SanctifAI adopted n8n to enhance productivity for a workforce of over 400 employees. By using n8n's visual builder instead of writing custom Python code for LangChain, the team created their first workflow in just two hours - tripling their development speed. This efficiency enabled product managers to design and test workflows directly, reducing dependency on engineering resources. Nathaniel Gates, CEO of SanctifAI, highlighted the platform's versatility:

"There's no problem we haven't been able to solve with n8n".

Scalability for Large-Scale Applications

n8n is built to handle demanding workloads, making it a reliable choice for large-scale applications. Its Queue Mode architecture allows for multiple instances with worker nodes to efficiently manage high-volume tasks, supporting up to 220 workflow executions per second. The platform also achieved an 84% integrability score and a 65% codability score, earning the highest combined ratings among workflow automation tools evaluated. This combination of scalability and flexibility makes n8n an excellent option for enterprises needing robust and reliable AI automation infrastructure.

5. LangChain

LangChain is an open-source framework that’s become a go-to tool for building LLM (Large Language Model) applications. Big names like Rakuten, Cisco, and Moody's use it for critical workflows. Released under the MIT license, LangChain offers over 1,000 integrations with models, tools, and databases. Plus, connecting to major LLM providers like OpenAI, Anthropic, and Google takes fewer than 10 lines of code.

Integration with Enterprise Workflows

LangChain takes enterprise AI workflows to the next level by simplifying how businesses integrate AI into their systems. Acting as middleware, it provides a standardized interface that allows companies to swap components without rewriting their application logic. It works seamlessly with cloud services like Amazon Bedrock for hosting models, Amazon Kendra for internal search, and Amazon SageMaker JumpStart for machine learning operations.

One standout feature is its Retrieval-Augmented Generation (RAG) workflows, which integrate LLMs with proprietary data sources. This not only reduces the risk of model hallucinations but also boosts response accuracy for enterprise-specific tasks.

For production-ready deployments, LangServe converts LangChain workflows into REST APIs, while LangSmith offers tools to monitor runtime metrics and trace execution paths. Middleware options allow developers to extend agent behavior, adding features like human-in-the-loop approvals, conversation compression, or the removal of sensitive data. With standardized interfaces, LangChain enables complex automated workflows, from user queries to final model outputs.

Support for Agentic AI Capabilities

LangChain’s Agent module empowers LLMs to take actions using tools, monitor outcomes, and achieve specific goals. These agents can connect to various tools - like web search, calculators, Python REPLs, and custom APIs - allowing interaction with external data and systems. For more sophisticated workflows, LangGraph offers a low-level orchestration framework. It supports features like stateful multi-agent applications, explicit branching, and human-in-the-loop interactions.

"Use LangGraph, our low-level agent orchestration framework and runtime, when you have more advanced needs that require a combination of deterministic and agentic workflows, heavy customization, and carefully controlled latency." - LangChain Documentation

Scalability for Large-Scale Applications

LangChain is built to scale. Using LangGraph, it handles advanced state management while minimizing latency. In production, enterprises typically deploy multiple replicas behind a load balancer, leveraging Kubernetes and Helm charts for horizontal scaling. For large-scale applications, it’s recommended to externalize state to databases like PostgreSQL, ensuring conversation states persist across sessions and enabling scaling across multiple instances.

Its model-agnostic design is another big win - it avoids vendor lock-in, making it easy to switch between LLM providers without rewriting agent logic.

6. LlamaIndex

LlamaIndex takes enterprise AI a step further by connecting large language models (LLMs) directly to corporate data, offering businesses a powerful tool for AI-driven insights.

This open-source data framework is specifically designed to bridge the gap between LLMs and enterprise data. With 4 million monthly downloads and a community of over 1,500 contributors, LlamaIndex has gained the trust of enterprises looking for reliable, production-ready AI solutions. Its LlamaHub ecosystem supports connections to 160+ data sources, enabling seamless integration with APIs, SQL databases, PDFs, and even slide decks.

Integration with Enterprise Workflows

LlamaIndex simplifies enterprise AI processes by offering managed services through LlamaCloud. These services include LlamaParse for document parsing, LlamaExtract for data extraction, and automated indexing pipelines that sync with platforms like SharePoint, Google Drive, and S3. For industries like legal and finance, where complex PDFs are the norm, LlamaParse transforms unstructured data into AI-ready formats like Markdown or JSON.

Its event-driven Workflows system allows developers to create multi-step processes that can pause and resume as needed, making it ideal for dynamic enterprise environments. For example, in 2025, Salesforce's Agentforce Team used LlamaIndex and LlamaParse to build Retrieval-Augmented Generation (RAG) applications. These tools handled intricate documents with hierarchical structures and tables, leveraging Vision Language Models (VLMs) for exceptional accuracy.

"LlamaIndex's framework gave us the flexibility we needed to quickly prototype and deploy production-ready RAG applications... What really stands out is how seamlessly we could customize the retrieval pipeline for our specific use cases while maintaining enterprise-grade performance." - Salesforce Agentforce Team

The llama_deploy tool further enhances integration by converting workflows into microservices, making it easy to embed AI capabilities into existing systems. Its asynchronous design ensures smooth compatibility with Python web frameworks like FastAPI, enabling high-performance applications.

Ease of Prototyping and Deployment

LlamaIndex is designed for simplicity. Developers can kickstart projects with just five lines of code using its high-level API, significantly speeding up the prototyping process. LlamaCloud sweetens the deal with 10,000 free credits per month for document parsing and extraction, along with flexible SaaS and self-hosted plans for larger enterprise needs.

The Workflows framework also supports type-safe programs, offering precise control over automation. Features like human-in-the-loop checkpoints ensure reliability for critical enterprise applications.

Scalability for Large-Scale Applications

LlamaIndex is built to scale, making it a strong choice for high-demand environments. It integrates with 40+ vector store backends, giving organizations the flexibility to choose storage solutions based on their data volume. LlamaCloud's managed services handle the heavy lifting for document parsing and retrieval pipelines, freeing enterprises from infrastructure maintenance.

The LlamaHub connector library simplifies large-scale data ingestion, while stateful and resumable workflows ensure that even complex AI processes can handle interruptions without a hitch. This combination of scalability, managed services, and flexible deployment options makes LlamaIndex suitable for everything from quick prototypes to production systems handling millions of requests.

7. DSPy

DSPy reimagines how AI systems are built by treating prompts as programmable logic. This high-code orchestration framework offers developers complete control over memory management, execution paths, and tool usage. It’s a strong fit for organizations that need precise backend control for their systems.

Integration with Enterprise Workflows

With DSPy, language models move beyond being simple responders and become part of integrated systems. Engineers can design and manage complex workflows that go far beyond single LLM prompts. This includes tasks like routing, tool execution, and delegating responsibilities across multiple roles. For organizations with advanced backend infrastructures, DSPy enables a shift from basic LLM usage to sophisticated systems capable of coordinating intricate tasks and connecting with external APIs.

"AI agent platforms enable the creation of a prompt sequence flow without manually stitching every step together." - Patronus AI

One of DSPy’s standout features is its programmatic approach, which separates high-level application logic from specific LLM implementations. This means developers can swap out models without needing to rewrite the application logic, making it easier to adapt to new technologies. This flexibility is especially useful for handling complex, large-scale deployments.

Scalability for Large-Scale Applications

DSPy’s architecture is designed to handle the rigorous demands of enterprise-level deployments. It provides engineers with granular control over memory and execution paths, which is essential for scaling applications that process large volumes of requests. This control allows for multi-step logic and precise resource allocation, making it well-suited for enterprise environments.

As a high-code solution, DSPy offers the flexibility to build durable, long-running agents capable of meeting enterprise-scale execution needs. It’s particularly beneficial for organizations that value deep integration with their existing technology stacks, even if it comes at the cost of rapid prototyping. Teams with strong engineering expertise can use DSPy to ensure their AI systems remain reliable, even under heavy workloads.

Ease of Prototyping and Deployment

DSPy simplifies the development process by letting developers define application logic through function signatures. This automated evaluation approach makes it easier for teams familiar with high-code environments to prototype and deploy systems. However, DSPy’s complexity and resource demands make it better suited for custom, large-scale projects rather than quick, lightweight demos.

8. Haystack

Haystack stands out as a production-focused framework designed to integrate AI systems smoothly into enterprise environments. With 23,700 GitHub stars and recognition as a 2024 Gartner Cool Vendor in AI Engineering, it offers developers the tools to connect retrievers, generators, and rankers into dynamic pipelines capable of handling complex business logic with loops and branches.

Integration with Enterprise Workflows

Haystack connects effortlessly with leading AI platforms like OpenAI, Anthropic, and Hugging Face, while also supporting over 40 vector databases, including Pinecone, Milvus, and Elasticsearch. This ensures flexibility when working with different vendors. Pipelines can be deployed as REST APIs through Hayhooks or as Model Context Protocol (MCP) Servers, making them accessible to other enterprise systems.

For added functionality, the Haystack Enterprise Platform includes a visual pipeline design tool, secure access controls, and governance features, simplifying data management and testing for teams of all sizes.

"Haystack's modular framework gives you full visibility to inspect, debug, and optimize every decision your AI makes." - Haystack

Haystack also integrates with low-code platforms like n8n, enabling organizations to combine AI-driven decision-making with traditional workflow automation. Its built-in logging, monitoring, and tracing capabilities ensure full observability, making it a reliable choice for production environments.

Scalability for Large-Scale Applications

Haystack’s architecture is designed to handle advanced retrieval tasks across millions of documents without unnecessary complexity. Its pipelines are fully serializable, cloud-agnostic, and Kubernetes-ready, making them adaptable for a wide range of enterprise use cases. Whether you're prototyping or scaling up to production, the framework's composable design ensures consistency at every stage.

Ease of Prototyping and Deployment

Starting with the free, open-source core framework, developers can prototype AI workflows in Python and seamlessly transition to enterprise infrastructure using the same components. The Haystack Enterprise Starter provides templates and deployment guides to streamline the shift from proof-of-concept to full-scale production. Additionally, built-in tracing and evaluation tools help verify the reliability of pipelines before deployment.

9. AutoGen

AutoGen offers a distinctive approach to tackling complex business challenges by leveraging a multi-agent orchestration model. With this framework, various AI agents - such as planners, researchers, and executors - work together autonomously to address intricate problems in business environments.

Support for Agentic AI Capabilities

What sets AutoGen apart is its ability to organize multiple specialized agents. These agents communicate using structured protocols and strictly defined APIs, ensuring consistent and predictable outcomes. The framework also provides debugging and observability tools, giving users full visibility into how agents interact. This level of control is critical, especially as the agentic AI market is forecasted to grow from $13.81 billion in 2025 to $140.80 billion by 2032. Gartner predicts that by 2028, roughly 33% of enterprise software will incorporate agentic capabilities.

Integration with Enterprise Workflows

Built on Python, AutoGen gives developers extensive control over agent behavior, memory management, and tool integration. It also works seamlessly with observability platforms like LangSmith, enabling detailed tracing and debugging of agent activities - features that are vital for maintaining enterprise-level reliability. This integration ensures that technical precision aligns with operational demands. However, enterprises deploying autonomous loops in production should consider adding external safety measures to prevent risks like cascading errors or excessive token usage.

Scalability for Large-Scale Applications

AutoGen is designed for scalability, supporting cloud-ready deployments and horizontal scaling through Kubernetes and Docker. While the framework itself is open source and free, organizations need to budget for LLM token usage and the necessary infrastructure. The performance of AutoGen largely depends on the language model selected, allowing teams to balance latency and accuracy based on their specific needs.

Ease of Prototyping and Deployment

Although AutoGen has a steeper learning curve, it’s a powerful tool for teams well-versed in Python development. To simplify prototyping, AutoGen Studio offers a visual interface that helps teams monitor and fine-tune agent interactions before rolling out to production. This makes it an ideal choice for high-stakes, real-time, and highly customized applications.

10. LangGraph

LangGraph takes a graph-based approach to building AI agents, making it perfect for handling intricate, stateful workflows. Instead of sticking to a straightforward, linear process, it relies on nodes and edges to create branching logic, loops, and conditional paths. This makes it especially useful in situations where decisions hinge on earlier steps or require human intervention. This architecture enables advanced features that give developers greater control over workflows.

Support for Agentic AI Capabilities

By July 2025, LangGraph had hit 7 million downloads on PyPI, highlighting its growing popularity in production-grade environments. One standout feature is its checkpoint system, which pauses workflows for human review - an essential tool in industries where automated decisions must be reviewed for compliance. Another useful feature is its "time travel" capability, allowing developers to rewind workflows, debug errors, or explore alternative logic paths.

"LangGraph enables granular control over the agent's thought process, which has empowered us to make data-driven and deliberate decisions to meet the diverse needs of our guests." - Andres Torres, Sr. Solutions Architect

Integration with Enterprise Workflows

LangGraph's advanced capabilities make it a natural fit for enterprise environments. It integrates seamlessly with tools like LangSmith, providing clear visibility into multi-step agent interactions. Its modular design allows developers to create reusable subgraphs, which is particularly helpful as business logic grows more complex. Additionally, its persistence layer ensures agents maintain context across sessions, a key feature for personalized customer experiences and long-term projects.

Scalability for Large-Scale Applications

LangGraph is built to handle enterprise-level demands, using horizontally scalable servers and task queues to manage heavy workloads efficiently. It offers flexible deployment options: fully managed cloud SaaS, hybrid setups with self-hosted data planes in a VPC, or on-premises infrastructure. Pricing starts at $39 per month for the Plus Plan, which includes cloud deployment and LangSmith access. Enterprise plans come with custom pricing and dedicated support.

Ease of Prototyping and Deployment

LangGraph emphasizes a code-first approach, requiring knowledge of Python or JavaScript, which means it might take some time to learn compared to visual builders. To simplify things, LangGraph Studio offers a visual IDE that lets developers map out state flows and interact with agents in real time. A great example of its potential: in June 2025, Orangeloops used LangGraph's state machine architecture to create "OLivIA", an HR assistant for Slack. Tests showed it excelled at handling complex reasoning tasks.

Feature Comparison Table

Choose the right LLM tool based on your specific needs - whether it's automation, prototyping, or managing multi-agent systems. The table below highlights the key features of each platform, offering a quick reference to help you make an informed decision.

| Tool | Primary Use Case | Integration Capabilities | Agentic Workflow Support | Scalability | Starting Price | Best For |

|---|---|---|---|---|---|---|

| NAITIVE AI Platform | Custom AI solutions & autonomous agents | Enterprise-grade custom integrations | Advanced multi-agent orchestration with human-in-the-loop | Enterprise-grade scale | Custom pricing | Businesses seeking tailored AI transformation |

| Langflow | RAG prototyping & AI pipeline experimentation | Hundreds of pre-built components with native MCP support | Visual IDE for multi-agent systems | Production-grade scaling | Free (open-source) | AI engineers fine-tuning RAG strategies |

| Flowise | Rapid chatbot deployment | LangChain connectors, three specialized builders | Multi-agent conversations with state management | 30% faster RAG processing than competitors | Free (open-source), Cloud at $35/month | Rapid deployment of chat solutions |

| n8n | Business process automation | 1,000+ pre-built integrations, MCP client/server | Custom JavaScript/Python code nodes | Handles up to 12,000 records per minute | Free (self-hosted), Cloud at $24/month | Connecting AI to existing business apps |

| LangChain | Flexible AI application development | Extensive ecosystem of integrations | Modular components for agent building | Horizontal scaling capabilities | Free (open-source) | Developers building custom LLM applications |

| LlamaIndex | Data-heavy LLM applications | 40+ vector store integrations | Advanced indexing for complex queries | Enterprise data processing | Free (open-source) | Industry solutions requiring sophisticated data indexing |

| DSPy | Optimizing LLM prompts programmatically | API-based model connections | Automated prompt optimization | Research-grade experimentation | Free (open-source) | Teams optimizing prompt performance systematically |

| Haystack | Production-ready search & RAG systems | Modular retrieval techniques like HyDE | End-to-end pipeline orchestration | Production-grade architecture | Free (open-source) | Building advanced search and retrieval systems |

| AutoGen | Multi-agent collaboration | Microsoft ecosystem integration | Autonomous agent conversations with minimal intervention | Cloud-native scaling | Free (open-source) | Complex tasks requiring agent collaboration |

| LangGraph | Complex stateful workflows | LangChain ecosystem, LangSmith integration | Graph-based architecture with checkpoints | 7M PyPI downloads (July 2025) | Plus at $39/month, Enterprise custom | Workflows requiring human approval steps |

Key considerations:

n8n, rated 4.9/5 on G2, has been a game-changer for companies like Delivery Hero, saving significant time in production workflows. Flowise is noted for its speed, while LangGraph excels in delivering precise control for stateful processes.

"We have seen drastic efficiency improvements since we started using n8n for user management. It's incredibly powerful, but also simple to use."

- Dennis Zahrt, Director of Global IT Service Delivery, Delivery Hero

When selecting a platform, consider what matters most to your organization. For instance, visual prototyping is Langflow’s specialty, business automation is where n8n shines, deployment speed is Flowise’s forte, and LangGraph is ideal for precise orchestration control. If your organization requires a fully customized solution with dedicated support, the NAITIVE AI Platform can address complex industry challenges with enterprise-level AI transformation.

This comparison provides a clear path to identifying the tool that aligns with your enterprise AI objectives.

Conclusion

When choosing an LLM tool, it's essential to match its capabilities with your team's goals and limitations. Tools like Langflow and n8n are excellent for quick prototyping and enabling non-technical teams, while code-first frameworks such as LangChain and LangGraph provide the precision needed for complex production systems. The choice ultimately depends on your team's expertise, deployment needs, and whether you prioritize speed or customization.

Start by outlining your primary objectives and defining critical requirements like data residency, compliance standards, budget constraints, and integration needs. For industries like finance or healthcare, where sensitive data is involved, self-hosted tools such as n8n or Langflow support a zero-trust architecture, ensuring tighter control. On the other hand, cloud-native solutions can deliver faster deployment with less overhead, making them ideal for teams aiming for quick results.

To future-proof your strategy, select tools that support multiple LLM providers (e.g., OpenAI, Anthropic, Google, or local models via Ollama). This flexibility helps you avoid vendor lock-in and optimize costs as the AI landscape continues to evolve. Keep in mind that usage-based pricing can escalate unpredictably at an enterprise scale, so consider whether fixed-tier plans or self-hosted deployments align better with your financial goals.

From the outset, prioritize observability with features like monitoring, token cost tracking, and error reporting. Tools like LangSmith or Langfuse can help you debug agent reasoning, track performance, and maintain accountability as your workflows expand. Often, the success of a project hinges on your ability to trace and resolve issues in real time.

Finally, assess how well a platform integrates with your existing workflows. Whether you need n8n’s extensive library of pre-built integrations for automating business processes, Flowise for quick chatbot setup, or the NAITIVE AI Platform for enterprise-grade customization, the right tool depends on both your current needs and your long-term AI strategy.

FAQs

How can I choose the best LLM platform for my business?

Choosing the right large language model (LLM) platform boils down to understanding your business objectives and technical requirements. Start by pinpointing your main use case - whether it's automating customer support, analyzing data, or streamlining workflows. Then, think about how the platform will connect with your current tools, such as CRMs, ERPs, or APIs, to ensure a smooth integration.

Next, assess your team's technical expertise and the level of customization you'll need. If you're looking for a fast and user-friendly setup, low-code platforms like Langflow or Flowise let you create workflows with minimal coding. On the other hand, if your team requires more advanced customization options, code-first frameworks might be the better route. Also, determine if your needs call for a straightforward single-agent setup or a more robust multi-agent system capable of managing complex, interconnected tasks.

To make an informed decision, run a small pilot project. Set aside a modest budget - say, $0 to $500 - connect a data source, and evaluate the platform's performance, scalability, and user satisfaction. By following these steps, you can find a platform that meets your business needs while balancing speed, cost, and flexibility.

What are the benefits of using a multi-agent system in AI workflows?

A multi-agent system breaks down complex workflows into smaller, focused tasks, each managed by individual agents. These agents specialize in areas like document retrieval, fact-checking, or formatting, which helps ensure tasks are handled with precision by using tools and data tailored to each specific need.

This setup is also a smart way to cut costs. By spreading out workloads, it reduces token usage and avoids relying too heavily on a single model. Plus, it boosts efficiency by allowing agents to work simultaneously or be swapped out as requirements evolve. The system also handles task delegation seamlessly, making it easier to monitor progress and fine-tune processes.

Thanks to its modular design, a multi-agent system allows for quick adjustments and smooth integration into production. You can add new features or update existing ones without reworking the entire system. For businesses looking to balance flexibility, dependability, and cost-effectiveness in their AI solutions, this approach is a game-changer.

How can I ensure my AI solutions are scalable and integrate seamlessly into existing systems?

To build AI solutions that can grow and integrate seamlessly, start by selecting a platform that supports modular workflows and provides flexible deployment options. Tools like n8n or Langflow make it easier to design multi-step AI workflows with minimal coding. Plus, they offer deployment flexibility - whether you need the security of on-premises hosting or the scalability of cloud-based solutions. This approach simplifies the transition from prototype to production without requiring major adjustments.

If your system involves multiple AI agents, an agent orchestration framework becomes essential. These frameworks handle communication, task delegation, and shared states between agents, ensuring operations remain efficient even as demand increases. To keep things scalable, design agents with specific, focused tasks, use containerization for easy management, and expose their functionality through well-defined APIs. Incorporating monitoring tools can also help track performance and dynamically adjust resources during periods of high activity.

Lastly, make sure the platform you choose meets your organization’s compliance and integration requirements. Decide whether a cloud-based or self-hosted solution is the better fit, and confirm it works smoothly with your existing APIs, databases, and authentication systems. By combining modular design, effective orchestration, and clear policies, you can create AI solutions that not only scale with your business but also integrate effortlessly into your existing workflows.