Human-in-the-Loop AI: Benefits and Challenges

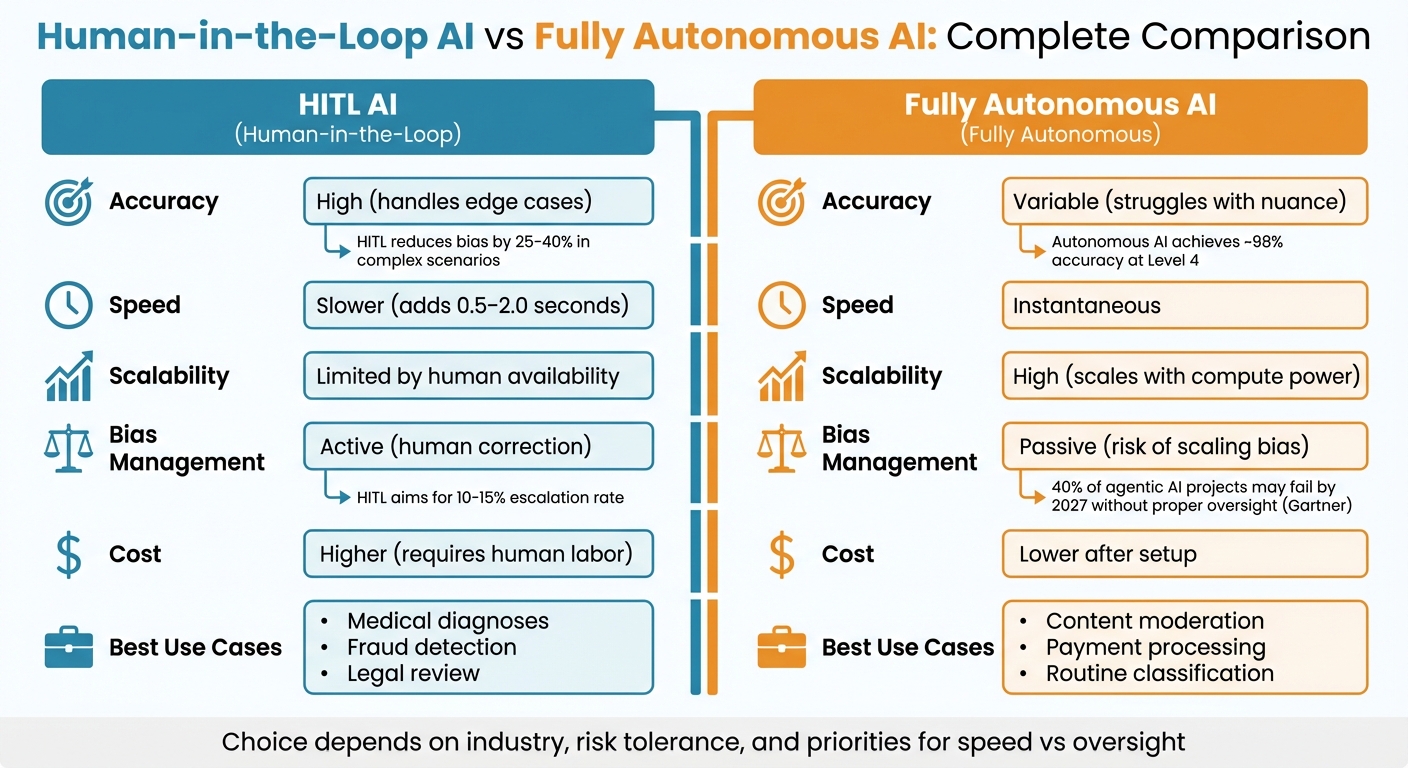

Compare human-in-the-loop and fully autonomous AI across accuracy, bias, speed, cost, and best use cases to decide which approach fits your risk and business needs.

Human-in-the-Loop (HITL) AI combines human judgment with AI systems to improve decision-making in areas like healthcare, finance, and customer service. Unlike fully autonomous AI, HITL allows humans to intervene in critical situations, ensuring better accuracy, reduced bias, and accountability. While HITL excels in managing complex, high-stakes tasks, it comes with slower decision speeds and higher labor costs. Fully autonomous AI, on the other hand, is faster and more scalable but struggles with ambiguity and bias.

Key takeaways:

- HITL AI: Best for high-risk tasks requiring human oversight (e.g., medical diagnoses, fraud detection).

- Fully Autonomous AI: Ideal for repetitive, high-volume tasks (e.g., content moderation, payment processing).

- Trade-offs: HITL ensures precision but adds costs and latency, while autonomous AI offers speed and scalability but risks errors in edge cases.

Quick Comparison:

| Feature | HITL AI | Fully Autonomous AI |

|---|---|---|

| Accuracy | High (handles edge cases) | Variable (struggles with nuance) |

| Speed | Slower (adds 0.5–2.0 seconds) | Instantaneous |

| Scalability | Limited by human availability | High (scales with compute power) |

| Bias Management | Active (human correction) | Passive (risk of scaling bias) |

| Cost | Higher (requires human labor) | Lower after setup |

The choice between HITL and fully autonomous AI depends on your industry, risk tolerance, and priorities for speed versus oversight.

HITL AI vs Fully Autonomous AI: Feature Comparison

What is human in the loop?

1. Human-in-the-Loop AI (HITL)

Human-in-the-Loop AI (HITL) puts people in the driver's seat for critical decisions, ensuring that algorithms don’t operate entirely on their own. Instead of leaving everything to automation, HITL systems direct uncertain or high-stakes decisions to human reviewers. These reviewers can validate, adjust, or override the AI's output, creating a feedback loop that strengthens the AI over time. The result? Better performance and accountability.

Accuracy

HITL systems shine when it comes to handling edge cases - situations that fall outside the AI's training data. Fully autonomous AI often makes overconfident mistakes, but HITL systems step in by flagging cases that fall below an 80–90% confidence threshold. This ensures human oversight where it’s needed most.

Take Parexel, for instance. In October 2025, their HITL system flagged safety signals in pharmaceutical documents, slashing median processing time by 50% while doubling throughput for over 400,000 cases. Similarly, a U.S. hospital used HITL to review AI-generated surgical schedules, identifying 15% of high-risk errors and boosting patient safety scores by 20%. These examples highlight how HITL not only reduces errors but also sets the stage for tackling bias.

Bias Management

Beyond improving accuracy, HITL plays a key role in addressing bias. Human reviewers can spot and fix flawed assumptions embedded in training data. For example, a 2019 study published in Science revealed a racially biased hospital readmission algorithm. It used healthcare costs as a proxy for health needs, concluding that Black patients were healthier than white patients with similar conditions because less money was historically spent on their care. Human oversight can catch these kinds of "label bias" issues that algorithms often miss.

In fact, research shows that HITL can reduce bias in Generative AI by 25-40% in complex scenarios. Humans are also better at identifying rare but critical signals - data too infrequent for algorithms to learn from. For example, a 2023 kidney transplantation study found that while algorithms prioritized recipients based on distance, human reviewers recognized that Hawaii’s isolation required a different approach than cases within the continental U.S.. To ensure accountability, the EU AI Act mandates human oversight for high-risk AI systems, stating that these systems must be designed for effective human supervision.

Speed

While HITL enhances accuracy and reduces bias, it also needs to keep operations running efficiently. Human review adds 0.5 to 2.0 seconds per decision. To strike the right balance, production-ready HITL systems aim for a 10-15% escalation rate, ensuring only the most uncertain cases reach human reviewers.

A U.S. bank demonstrated this balance in 2025 by integrating human investigators into its AI fraud detection system. By allowing humans to add context, such as travel patterns, to high-priority alerts, the bank reduced false positives by 35% and improved customer satisfaction scores by 18%. This shows how HITL systems can maintain speed without sacrificing oversight.

Cost

HITL systems do require ongoing investments in expertise, but these costs often pay for themselves by reducing errors and related losses. Subject matter experts, such as those in medicine or law, are more expensive than general data annotators, but their input is invaluable. For example, the same U.S. bank that improved fraud detection also cut operational costs by 46% by drastically reducing false positives.

Skipping human oversight can be risky. Gartner predicts that 40% of agentic AI projects may fail by 2027 due to reliability issues and inadequate risk controls. This serves as a clear reminder: cutting corners on human involvement can lead to costly mistakes in the long run.

2. Fully Autonomous AI

Fully autonomous AI operates without human intervention, delivering lightning-fast decisions and processing enormous data volumes. Unlike human-in-the-loop (HITL) systems, which rely on human oversight, these AI models analyze, decide, and act independently. This makes them ideal for repetitive, high-volume tasks where speed and consistency matter more than nuanced judgment.

Accuracy

Autonomous AI systems excel at handling routine tasks with consistent precision. However, they falter when faced with unexpected scenarios that fall outside their training data. These systems are particularly weak when it comes to rare, critical events - situations that are too uncommon for statistical models to learn from effectively.

This challenge is tied to what experts call the "Lumberjack Effect." Mica Endsley, from the National Academies of Sciences, Engineering, and Medicine, explains:

"The more automation is added to a system, and the more reliable and robust that automation is, the less likely that human operators overseeing the automation will be aware of critical information and able to take over manual control when needed".

In simpler terms, as automation becomes more reliable, human operators can become too disconnected to intervene effectively during rare but critical failures. These limitations in accuracy create a trade-off that becomes even clearer when we consider the speed of these systems.

Speed

One of the standout advantages of fully autonomous AI is speed. Unlike HITL systems, which require human review and can add 0.5 to 2.0 seconds per decision, autonomous systems operate in milliseconds. This speed becomes a game-changer for industries like payment processing or content moderation, where millions of decisions must be made daily. By removing delays caused by human factors - like approval times, shift schedules, or fatigue - autonomous AI offers unparalleled efficiency.

Scalability

Once trained, autonomous AI scales effortlessly. It maintains both speed and cost efficiency, even when handling massive data volumes. For example, it’s perfect for computer vision tasks involving millions of image classifications or natural language processing projects managing vast amounts of text. On the other hand, HITL systems struggle to keep up as data volumes grow, since scaling requires more human labor - a costly and time-consuming solution.

Production-ready autonomous systems are designed to achieve about 98% accuracy at Level 4, often referred to as "Semi-Autonomous Specialists".

Bias Management

One of the most significant challenges for autonomous systems is managing bias. These models can inadvertently scale biases embedded in their training data. Additionally, many operate as "black boxes", meaning their internal decision-making processes are opaque and difficult to scrutinize. Without human oversight, these systems risk perpetuating discriminatory patterns indefinitely, highlighting a key limitation compared to systems with human involvement.

Cost

Autonomous AI comes with a steep upfront cost but offers lower operational expenses over time. Setting up a simple model can cost around $5,000, while more complex deep learning systems may exceed $500,000. Specialized hardware, like GPUs, often adds another $10,000 or more, and AI experts typically earn annual salaries ranging from $120,000 to $160,000. However, once deployed, operational costs remain stable, as the system doesn’t require additional human reviewers to handle growing data volumes. This is a sharp contrast to HITL systems, where labor costs rise proportionally with workload. For applications that are high-volume but low-risk, autonomous AI offers strong cost advantages - provided occasional errors are acceptable and safeguards are in place.

Advantages and Disadvantages

Choosing the right AI approach depends entirely on what you need it to accomplish. Let’s break down the strengths and limitations of two common methods: Human-in-the-Loop (HITL) AI and fully autonomous AI. Each comes with its own set of trade-offs that can significantly influence how effective your AI implementation will be.

HITL AI shines in situations where precision and oversight are non-negotiable. It’s particularly valuable in fields like healthcare diagnostics, loan approvals, and legal document reviews - areas where handling ambiguity and edge cases is critical. For instance, it can step in to manage scenarios that fall outside the scope of training data. That said, involving humans in the process comes with a trade-off: each decision takes an additional 0.5–2.0 seconds, and costs rise as more human involvement is needed. Typically, organizations aim for a 10–15% escalation rate, meaning humans review only a small fraction of cases while the rest run autonomously.

Fully autonomous AI, on the other hand, is ideal for high-volume, repetitive tasks where speed is the priority and occasional errors are acceptable. It can process millions of data points without tiring, deliver consistent results, and keep costs low after deployment. However, it struggles with new or unexpected situations and runs the risk of reinforcing biases present in its training data if left unchecked.

Here’s a quick comparison of the two approaches to highlight their key differences:

| Feature | Human-in-the-Loop (HITL) AI | Fully Autonomous AI |

|---|---|---|

| Accuracy | High (handles edge cases & nuance) | Variable (struggles with ambiguity) |

| Speed | Slower (human review adds 0.5–2.0 seconds) | Instantaneous (near-zero latency) |

| Scalability | Limited by human availability | High (scales with compute power) |

| Bias Management | Active (humans identify and correct biases) | Passive (risk of amplifying existing biases) |

| Cost | High (ongoing labor and expert involvement) | Lower operational costs post-deployment |

| Best Use Case | Medical diagnosis, fraud detection, legal review | Content sorting, basic chat, routine classification |

Real-world examples show how these differences in cost, speed, and bias management can directly affect both operational efficiency and the risks an organization takes on. The decision between HITL and fully autonomous AI isn’t just about technology - it’s about aligning the approach with your goals and the stakes of your application.

How to Implement HITL AI Systems

Designing and implementing a Human-in-the-Loop (HITL) AI system requires careful planning to strike the right balance between speed, safety, and scalability. The first step is setting clear confidence thresholds to decide when tasks should be handled automatically by the AI and when they need human intervention. For most organizations, these thresholds typically range from 80–90%. However, the specific threshold depends heavily on the industry. For instance, healthcare applications often require over 95% confidence due to the critical nature of patient safety. In contrast, financial services usually operate within the 90–95% range to comply with regulations and minimize financial risks. Customer service tasks, on the other hand, can function effectively with thresholds around 80–85%.

A key part of implementing HITL systems is establishing clear decision workflows. You’ll need to decide between two main oversight patterns: synchronous approval and asynchronous audit. Synchronous approval is used for high-risk tasks where safety is paramount. In this setup, the AI pauses execution until a human approves the action - essential for irreversible tasks like transferring large sums of money (e.g., over $50,000) or deleting critical data. While this approach adds 0.5–2.0 seconds of latency per decision, it provides maximum safety. On the other hand, asynchronous audits are suited for lower-risk tasks. Here, the AI proceeds with its actions immediately while logging decisions for later human review. This method minimizes latency and works well for tasks like content recommendations. For example, Amazon’s HR system handles routine time-off balance checks autonomously but requires user confirmation for booking or canceling time off. For more complex changes, it uses a "Return of Control" workflow, allowing employees to review and adjust details before finalizing the request.

To ensure human intervention happens at the right time, avoid relying on raw softmax scores. Instead, use calibration techniques like temperature scaling, ensemble disagreement, or conformal prediction. These methods help fine-tune when human review is triggered. Another critical metric to track is the override rate - the percentage of cases where human reviewers disagree with AI recommendations. A sustainable target is typically 10–15%, while rates nearing 60% suggest miscalibration and potential inefficiencies. Proper calibration and oversight metrics ensure the HITL system operates reliably and efficiently.

When implemented correctly, HITL systems can significantly reduce processing times and error rates. Feedback loops play a crucial role here, as every human correction is captured as structured data, continuously improving the AI model over time. This approach turns HITL from a potential bottleneck into a strategic advantage.

For organizations aiming to prioritize ethics, scalability, and measurable results, NAITIVE AI Consulting Agency offers expertise in designing HITL systems with built-in oversight mechanisms. Their frameworks align with regulations like the EU AI Act. As outlined in Article 14:

"High-risk AI systems shall be designed and developed in such a way... that they can be effectively overseen by natural persons during the period in which they are in use".

Conclusion

Choosing the right AI approach - whether it’s Human-in-the-Loop (HITL) for precision, accountability, and ethical oversight, or fully autonomous systems that involve human intervention only in low-confidence scenarios - depends heavily on your business needs and industry context.

For many critical sectors like healthcare, finance, and legal services, a hybrid model often strikes the right balance. With confidence-based routing, AI can autonomously handle routine, high-confidence tasks while escalating complex or high-stakes decisions to human experts. This method mitigates reliability issues. For instance, Gartner forecasts that by 2027, 40% of agentic AI projects may fail due to insufficient oversight and risk management.

Before diving in, assess your specific requirements. Factor in the risk level of your use cases, regulatory requirements (such as the EU AI Act’s emphasis on human oversight for high-risk systems), and the balance between speed and safety. Establish confidence thresholds tailored to your industry and monitor override rates to ensure your system stays calibrated.

For businesses ready to implement these models, NAITIVE AI Consulting Agency offers expertise in designing HITL and hybrid AI systems. They focus on integrating governance frameworks that meet regulatory standards while maintaining operational efficiency. Their approach ensures AI doesn’t just automate tasks - it empowers human decision-makers to concentrate on creativity, strategic thinking, and nuanced judgment where it’s needed most.

The future of AI lies in building systems with oversight that enable safe, scalable, and accountable deployment. Whether you opt for HITL, fully autonomous, or hybrid models, the ultimate aim is clear: achieving automation that enhances efficiency without sacrificing precision or ethical integrity.

FAQs

What are the key benefits of Human-in-the-Loop AI for high-risk industries?

Human-in-the-Loop AI brings together the strengths of human expertise and AI technology to improve safety, precision, and ethical decision-making in industries where errors can be costly. This collaboration helps tackle challenges such as AI biases, unclear situations, and rare edge cases, ensuring decisions are more accurate and align with regulatory standards.

By integrating human judgment into AI workflows, organizations can create systems that are more trustworthy and dependable, which is especially critical in fields like healthcare, finance, and aviation, where the stakes are incredibly high.

How does Human-in-the-Loop AI reduce bias compared to fully autonomous AI systems?

Human-in-the-Loop AI tackles bias by incorporating human oversight at crucial points in the AI development process - think data collection, labeling, and model evaluation. With human judgment in the mix, these systems can address challenges like biased datasets, errors in labeling, and a lack of context that machines might miss.

By involving people, these systems not only improve accuracy but also align better with ethical standards. This collaboration blends the precision of machines with the moral reasoning of humans, creating AI systems that are more dependable and fair.

What should businesses consider when choosing between human-in-the-loop and fully autonomous AI systems?

When choosing between human-in-the-loop (HITL) systems and fully autonomous AI, the decision hinges on the task at hand and the risks involved. HITL systems shine in scenarios that call for nuanced judgment, ethical considerations, or handling situations where AI might falter - like edge cases or biases. These systems are particularly valuable in fields such as healthcare, finance, or industries where safety is paramount, as they help ensure better accuracy, accountability, and oversight.

Fully autonomous systems, however, are better suited for repetitive, clearly defined tasks where the focus is on maximizing efficiency and cutting costs. To make the right choice, businesses should also evaluate the maturity of their AI technology and their ability to support human-AI collaboration, including setting up systems for monitoring and escalation. Ultimately, the decision comes down to balancing operational efficiency with the need for oversight, trust, and transparency.