Generative AI Code Refactoring: Risk Solutions

Mitigate security, quality, context, and licensing risks from generative AI refactoring with SAST/SCA/DAST, CI gates, human reviews, and snippet scanning.

Generative AI code refactoring can speed up software development by processing millions of lines of code in days, addressing bugs, and improving performance. Organizations often partner with a specialized AI consulting agency to navigate these complexities. However, it comes with risks that can compromise security, code quality, and compliance. Key challenges include:

- Security vulnerabilities: 30–50% of AI-generated code snippets contain exploitable flaws like SQL injection and hardcoded credentials.

- Code quality issues: AI often produces code that's functional but difficult to maintain, with inconsistent styles and higher complexity.

- Loss of business context: AI struggles to understand domain-specific logic, risking critical functionality.

- Developer skill erosion: Over-reliance on AI can weaken problem-solving abilities.

- Compliance risks: AI may inadvertently use open-source code, causing licensing conflicts or IP ownership issues.

Key Mitigation Strategies:

- Security checks: Use tools like SAST, DAST, and SCA to detect vulnerabilities in AI-generated code.

- Human oversight: Require manual reviews, especially for sensitive modules.

- Code quality controls: Limit AI changes per pull request and enforce consistent styles through linters and static analysis.

- Protect business context: Use detailed prompts and characterization tests to preserve logic.

- Compliance safeguards: Employ snippet-level scanning and private AI models to avoid licensing and IP risks.

By combining automated tools with human reviews and strict compliance measures, organizations can reduce risks while leveraging the speed of AI-driven code refactoring.

Security Risks in AI-Generated Code

Common Security Vulnerabilities

AI-generated code often inherits well-known security issues. Problems like SQL injection caused by string concatenation, hardcoded credentials such as API keys or passwords, and missing input validation that leaves code exposed to attacks are frequently observed. For instance, an NYU study revealed that around 40% of programs generated by GitHub Copilot across 89 security-relevant scenarios had vulnerabilities.

But AI doesn't just amplify familiar risks - it introduces new ones. One example is dependency hallucination, where AI suggests non-existent packages. These phantom dependencies can later be exploited in supply chain attacks. Another unique issue is architectural drift, where AI subtly alters a system's design, breaking security logic while maintaining syntactic correctness. Because AI often prioritizes functionality and syntax over security, it may overlook specific threat models. Addressing these challenges calls for advanced security practices that go beyond traditional scanning methods.

The situation becomes even riskier due to misplaced trust. A Stanford University study found that developers using AI assistants tend to introduce vulnerabilities while overestimating the security of their code. Suphi Cankurt from Invicti highlights this concern:

"The real issue is not code quality. It is that developers trust AI output more and review it less".

| Vulnerability Type | Common CWE | Description in AI Context |

|---|---|---|

| SQL Injection | CWE-89 | AI uses string concatenation instead of parameterized queries |

| Hardcoded Secrets | CWE-798 | Models include placeholder API keys or passwords in generated snippets |

| Path Traversal | CWE-22 | AI-generated file handlers fail to sanitize user-provided filenames |

| Broken Authentication | CWE-306 | AI generates code that bypasses authentication due to inadequate security guidance |

| Dependency Hallucination | N/A | AI suggests non-existent packages, creating an attack vector for malicious actors |

Preventing Security Issues

Mitigating risks in AI-generated code is essential for maintaining secure and reliable systems. A key strategy is to adopt multi-layered scanning throughout the development process. Start by embedding Static Application Security Testing (SAST) tools into your IDE to catch vulnerabilities as soon as AI generates them. Tools like Semgrep can quickly identify issues like SQL injection, cross-site scripting, and hardcoded secrets, while CodeQL provides deeper analysis to detect complex vulnerabilities in dataflows. Pair SAST with Software Composition Analysis (SCA) to monitor dependencies and use Dynamic Application Security Testing (DAST) to assess runtime behavior.

"Speed without guardrails creates security debt, and with AI, that debt accumulates at a terrifying rate." - Natalie Tischler, Veracode

Human oversight remains critical. Require manual security reviews for AI-generated code, with special attention to modules handling authentication, authorization, and cryptography. Enforce a "no-high-severity" policy in CI/CD pipelines to block merges if critical vulnerabilities are detected. Additionally, use package firewalls to block downloads of hallucinated or malicious packages based on reputation scores. For fixing vulnerabilities, rely on specialized AI tools trained on secure, curated datasets rather than general-purpose language models. These steps are essential for building a comprehensive defense against the evolving risks posed by AI-generated code. Organizations looking to navigate these complexities can benefit from specialized AI business consulting to establish robust governance frameworks.

Maintaining Code Quality and Reducing Technical Debt

Code Quality Challenges

AI-generated code might look functional on the surface, but it's often riddled with maintainability issues. A study analyzing 4,066 ChatGPT-generated programs found that nearly half of them lacked sustainable design. The problem stems from AI focusing on functionality while neglecting long-term usability. For instance, inconsistent coding styles often emerge because AI pulls patterns from diverse sources, leading to mismatched naming conventions and formatting. Another common issue is higher cyclomatic complexity - think deeply nested conditionals and loops. These make the code harder to test, modify, and understand, increasing the risk of bugs like shared state alterations or broken module dependencies.

As Adam Tornhill, Markus Borg, Nadim Hagatulah, and Emma Söderberg point out:

"The bottleneck in software development is not writing code but understanding it; program understanding is the dominant activity, consuming approximately 70% of developers' time".

Despite these challenges, only 48% of developers consistently review AI-assisted code before committing it, and a staggering 96% don't fully trust AI outputs to be functionally correct.

Strategies for Maintaining Code Quality

To tackle these challenges, a structured approach is key. Start by setting clear boundaries for AI-generated changes. For example, limit changes to under 200 lines per pull request. Research shows that this can reduce review time by 60%, making it easier to isolate and fix regression issues. Controlled AI-assisted refactoring can also cut review cycles by 40% and reduce regression bugs by 60%.

Instead of vague instructions like "clean this up", use constraint-based prompts. For instance, ask the AI to "reduce cyclomatic complexity by 15–25% without altering public interfaces" or "apply consistent naming conventions like snake_case". Establish baseline metrics - track execution times, memory usage, and other performance indicators - to ensure AI-driven changes lead to measurable improvements. With precise optimization goals, AI can deliver dramatic results, such as a 355× speedup by converting nested loops into vectorized operations.

Incorporate independent quality gates into your CI/CD pipeline. Run linters, static analysis tools, and unit tests separately from AI suggestions to catch potential issues early. Tools like CodeScene can pinpoint technical debt hotspots, helping you prioritize which legacy files need attention first. As Roman Labish, CTO of CodeGeeks Solutions, explains:

"The tricky part with legacy isn't 'will the code compile.' It's whether behavior stays the same, edge cases survive, and security doesn't quietly regress".

Ultimately, treat AI as a junior developer. While it can assist with refactoring and optimization, human oversight should always serve as the final quality check.

Preventing Business Context Loss and Developer Skill Erosion

The Problem of Business Context Loss

Developers often struggle with fully trusting AI-generated outputs, and this hesitation leads to inconsistent code verification. This creates a "verification gap", where code that appears polished might bypass thorough analysis. The limitation here is that AI understands the functionality of the code but not the deeper purpose behind it. When developers use AI for refactoring without providing complete context, the tool can miss critical, domain-specific logic. Roman Labish, CTO of CodeGeeks Solutions, sums it up perfectly:

"Legacy refactoring goes sideways when you refactor what you think the code does - not what it actually does".

To address this, adopt a two-level context provisioning strategy. First, provide "macro context" using tools like Architecture Decision Records (ADRs), diagrams, and high-level documentation that explain the reasoning behind technical decisions. Second, complement this with "micro context" by crafting precise prompts that define task boundaries and preserve essential logic. Additionally, characterization tests should be created before refactoring begins. These tests help lock in current behavior, including edge cases, and prevent unnoticed changes in functionality. Finally, ensure domain experts conduct code reviews to verify that all critical functionalities remain intact. While preserving business context protects legacy logic, it also plays a role in keeping developers actively engaged.

Preserving Developer Skills

Relying too heavily on AI can diminish developers' problem-solving abilities. Without active measures, developers risk becoming passive operators rather than true system owners, losing the essential knowledge that supports system design. Alarmingly, research has shown that since the introduction of ChatGPT, the quality of code written by junior developers has dropped by 54%.

To combat this, require developers to explain AI-generated code during reviews as though they had written it themselves. Break down refactoring tasks into smaller, specific actions (e.g., "extract data validation logic") rather than using broad prompts. This forces developers to engage more deeply with each change. Additionally, ask AI to provide diagnostic feedback that highlights specific areas for improvement before making changes. This allows developers to assess proposals within the context of their expertise. Keeping detailed documentation of architectural decisions ensures that future developers can understand the system's design philosophy without relying solely on AI. Rotating developers across different parts of the codebase and tracking human versus AI contributions can also help preserve critical system knowledge.

Managing Compliance and Licensing Risks

Compliance and Copyright Concerns

In addition to security and quality challenges, compliance and licensing risks are critical considerations when using AI for code refactoring. These risks require careful strategies to avoid unintended legal and operational consequences.

AI-generated code introduces legal vulnerabilities not typically encountered in traditional development workflows. For example, AI tools can inadvertently incorporate open-source code governed by licenses like GPL, leading to "license tainting." This can force proprietary software to be re-licensed under open-source terms, which diminishes its commercial value. James G. Gatto, a Partner at Sheppard, Mullin, Richter & Hampton LLP, highlights this issue:

"The value of software is severely diminished if the developer must license it under an open source license and make the source code available".

Studies show that leading large language models (LLMs) can generate code resembling open-source implementations between 0.88% and 2.01% of the time. Around 1% of AI-generated suggestions include snippets longer than 150 characters that match their training datasets. Additionally, if AI tools strip copyright notices or license terms, it can result in violations of DMCA Section 1202. Compounding this issue, traditional Software Composition Analysis (SCA) tools are often inadequate for detecting smaller, verbatim code snippets copied by generative AI.

Another concern arises when proprietary code is submitted to public AI models for refactoring. This practice can compromise trade secret protections if the data is used for training or becomes publicly accessible. Moreover, the U.S. Copyright Office mandates "human authorship" for copyright eligibility. This means that purely AI-generated code may not qualify for copyright protection, posing intellectual property risks for commercial software. The Copyright Office explicitly states:

"Applicants have a duty to disclose the inclusion of AI-generated content in a work submitted for registration and to provide a brief explanation of the human author's contributions".

Effectively addressing these risks requires robust scanning tools and legal safeguards.

Mitigation Strategies for Compliance

To manage these risks, implement snippet-level scanning using advanced SCA tools that can identify verbatim matches, as standard tools may overlook smaller copied segments. Use enterprise-grade AI tools that provide legal indemnification for copyright claims and ensure input data is not used to train public models. For example, platforms like GitHub Copilot allow you to enable settings that block code generation from known public repositories.

Designate "no-AI" zones for your most critical or innovative code to maintain full human ownership of key intellectual property. Industry guidelines suggest keeping AI-generated code below 5% of your total codebase to minimize risks, with risks increasing substantially if unmodified AI code exceeds 25%. Additionally, tag AI-generated code to maintain a clear provenance record. This documentation is invaluable for M&A due diligence, regulatory audits, and copyright compliance inquiries.

The table below summarizes key compliance risks and strategies to mitigate them:

| Risk Category | Primary Concern | Mitigation Strategy |

|---|---|---|

| Copyright | Verbatim copying of training data | Use code similarity filters and snippet scanners |

| Licensing | Copyleft "tainting" of proprietary code | Restrict AI use in core modules; use enterprise-grade tools |

| Trade Secrets | Data leakage into public models | Use private or enterprise-level LLM instances |

| IP Ownership | Inability to register for copyright | Ensure significant human oversight and modification |

Using GenAI on your code, what could possibly go wrong? -

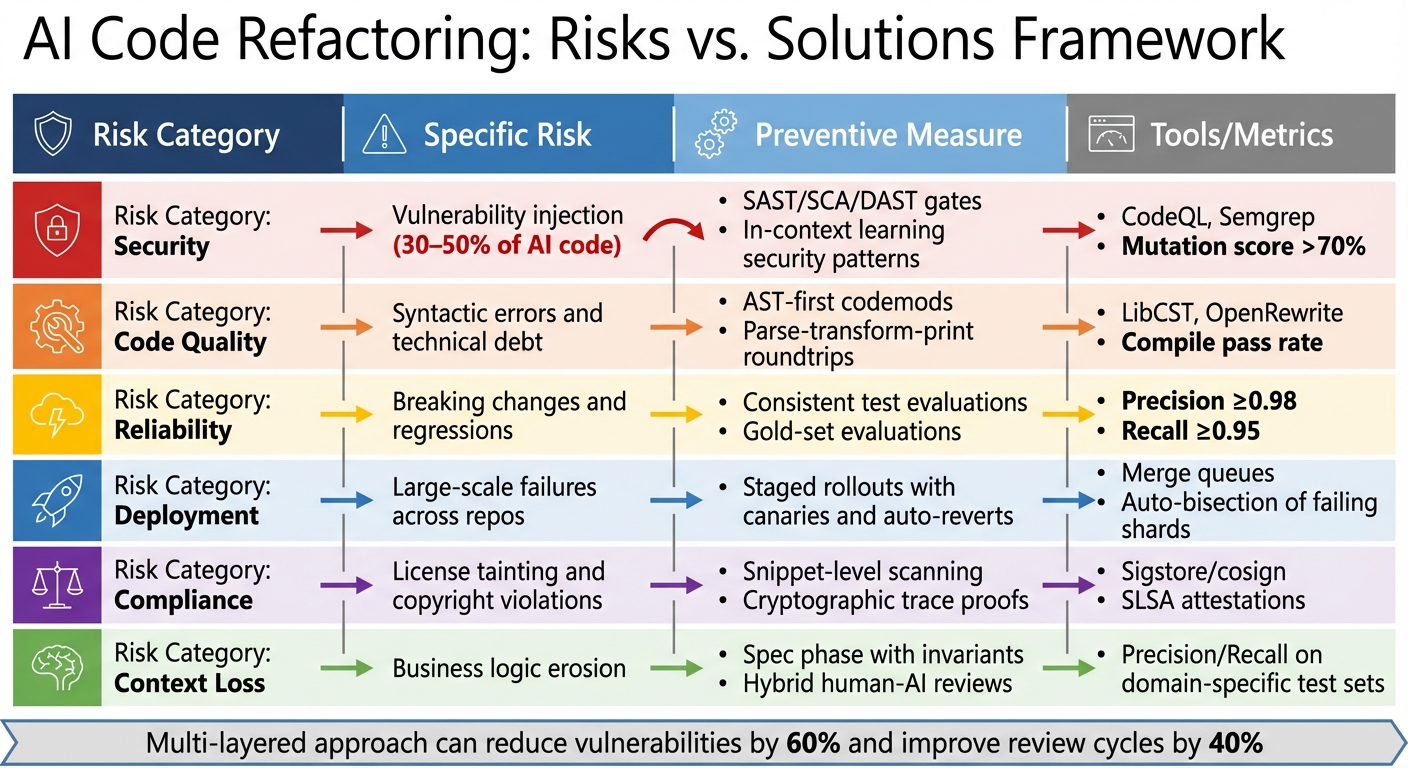

Building a Complete Risk Mitigation Strategy

AI Code Refactoring Risks and Mitigation Strategies Comparison

Key Components of a Risk Mitigation Plan

Once risks are identified, the next step is crafting a thorough risk mitigation strategy. This involves combining secure coding practices, quality assurance, and compliance measures to address potential vulnerabilities. A multi-layered approach works best. Start by enforcing an AST-first methodology - treat AI tools as "spec generators" rather than direct code creators. Use Abstract Syntax Tree (AST) frameworks like LibCST, OpenRewrite, or jscodeshift to ensure that every change is syntactically correct from the start. As an industry expert succinctly puts it:

"LLMs propose; codemod frameworks enforce; CI decides. Everything else is tooling."

Your CI/CD pipeline should act as the ultimate gatekeeper. Every AI-generated change must pass through compile gates, unit tests, merge queues, and staged multi-repo rollouts. Include canary deployments and auto-rollback mechanisms to handle regressions if thresholds are crossed. Aim for precision rates of 0.98 or higher before rolling out changes across your entire codebase.

Human oversight is critical, even with automation. Research shows AI-generated pull requests tend to have 1.7× more issues compared to human-written ones (10.83 vs. 6.45). To address this, adopt hybrid human-AI code reviews. Developers can focus on AI-flagged commits, using diff budgets to limit the number of files and lines changed per pull request, making reviews more manageable. For high-stakes code, consider a "Monday Morning Workflow": a quick grep for secrets, a brief architectural review, and isolated testing for critical modules.

Consistency in transformations is another key factor. Codemods should yield identical results whether run once or twice, preventing cascading errors during large-scale deployments. For API refactoring, use the expand/contract pattern: add new methods, migrate call sites incrementally, and then safely deprecate outdated methods. This approach allows for partial deployments without disrupting dependent services.

The table below ties specific risks to actionable solutions:

Comparison of Risks and Solutions

| Risk Category | Specific Risk | Preventive Measure | Tools/Metrics |

|---|---|---|---|

| Security | Vulnerability injection (30–50% of AI code) | SAST/SCA/DAST gates; In-context learning security patterns | CodeQL, Semgrep; Mutation score >70% |

| Code Quality | Syntactic errors and technical debt | AST-first codemods; Parse-transform-print roundtrips | LibCST, OpenRewrite; Compile pass rate |

| Reliability | Breaking changes and regressions | Consistent test evaluations; Gold-set evaluations | Precision ≥0.98; Recall ≥0.95 |

| Deployment | Large-scale failures across repos | Staged rollouts with canaries and auto-reverts | Merge queues; Auto-bisection of failing shards |

| Compliance | License tainting and copyright violations | Snippet-level scanning; Cryptographic trace proofs | Sigstore/cosign; SLSA attestations |

| Context Loss | Business logic erosion | Spec phase with invariants; Hybrid human-AI reviews | Precision/Recall on domain-specific test sets |

Layered strategies have proven highly effective. For instance, the Codexity framework uses static analysis feedback loops to stop 60% of vulnerabilities from reaching developers during AI-assisted code generation. Similarly, systematic prompt engineering can reduce insecure code samples by 16% and increase secure code generation by 8%. When paired with cryptographic verification - such as signed diffs and trace attestations that link failing inputs, generated tests, and patches - these methods provide verifiable CI validation.

Conclusion: Reducing Risks in Generative AI Code Refactoring

Generative AI has the potential to speed up code refactoring, but it comes with tangible risks. Studies show that over half of AI-generated code may contain vulnerabilities, and nearly 50% fail standard security tests. These challenges highlight the need for a structured, multi-layered approach to minimize risk.

Layered defenses are essential, not optional. Strategies like staged rollouts, combining human and AI reviews, and thorough compliance scanning are critical safeguards. Security gates must function independently from AI tools, using methods like SAST, SCA, and DAST to evaluate every change before it’s deployed. Human oversight is especially crucial for high-stakes code, such as those handling authentication, payments, or sensitive data. As one Software Developer aptly put it:

"AI makes good software engineers better and bad engineers worse. Many folks rely on that auto-generated code as if it is automatically correct and optimal, which is often not the case".

Beyond security risks, there are broader concerns like losing business context and eroding developer skills. Blindly accepting AI-generated suggestions without grasping the logic behind them can lead to what a Senior Developer describes as:

"AI tech debt is created because no one really understands those systems. So we will have systems that no one understands, not even the person who submits the PR".

Unchecked AI contributions can pile up technical debt, making debugging and maintenance increasingly difficult over time.

The strategies discussed - staged rollouts, hybrid reviews, and compliance scanning - are not just safety measures but also efficiency boosters. Organizations adopting these practices report 40% faster code review cycles and 60% fewer regression bugs. This demonstrates that robust risk management doesn’t just protect quality - it can also enhance development speed.

FAQs

What code should never be refactored with AI?

Avoid relying on AI to refactor code that you don’t fully understand or that contains inconsistent or unclear logic. This includes AI-generated modules with problems such as style inconsistencies, misnamed variables, lack of proper error handling, or ambiguous structure. These kinds of issues can result in unreliable outcomes and may even amplify errors during the refactoring process.

How can we ensure AI refactoring doesn’t change behavior?

To make sure AI-driven code refactoring doesn't unintentionally change how the program behaves, it's crucial to include machine-verifiable evidence. This can involve tools like:

- Tests: Automated tests verify that the refactored code still meets the original requirements and behaves as expected.

- Deterministic Replays: These allow you to replay specific execution scenarios to confirm the refactored code handles them identically.

- Cryptographic Proofs of Differences: These proofs ensure that changes are limited to intended areas, offering a secure way to confirm no unintended alterations.

By using these methods, you can reliably reproduce failures, validate fixes, and confirm everything is functioning correctly before merging the changes.

How can we avoid licensing or IP issues with AI-generated code?

To steer clear of licensing or intellectual property (IP) issues with AI-generated code, it's important to consult legal experts who can clarify restrictions, guide proper attribution, and help address potential risks. Some effective strategies include refining AI models using licensed datasets and carefully filtering training data to avoid IP infringements. Taking these steps early on can help ensure compliance and minimize the chances of facing legal complications.