Frameworks for Bias Detection in AI Models

Explore essential frameworks for detecting bias in AI models, ensuring ethical practices and compliance while minimizing risks and enhancing accountability.

AI bias detection is critical for ethical AI use and legal compliance. Businesses face risks like discrimination, reputational damage, and legal penalties if bias in AI models goes unchecked. Bias arises from training data imbalances, poorly defined criteria, or model drift. To address this, six frameworks stand out:

- BiasGuard: Uses fairness guidelines and reinforcement learning for detecting social biases (gender, race, age). High precision but limited scope.

- GPTBIAS: Employs GPT-4 to audit black-box models, detecting 9+ bias types, including intersectional ones. Resource-intensive but thorough.

- Projection-Based Methods: Visualizes biases in model components using tools like PCA. Effective for collaboration but requires technical expertise.

- IBM AI Fairness 360: Open-source toolkit with 70+ metrics, covering all bias stages (pre-, in-, post-processing). Versatile but demands Python skills.

- Microsoft Fairlearn: Integrated with Azure, it highlights performance disparities across demographics. Best for organizations using Microsoft’s ecosystem.

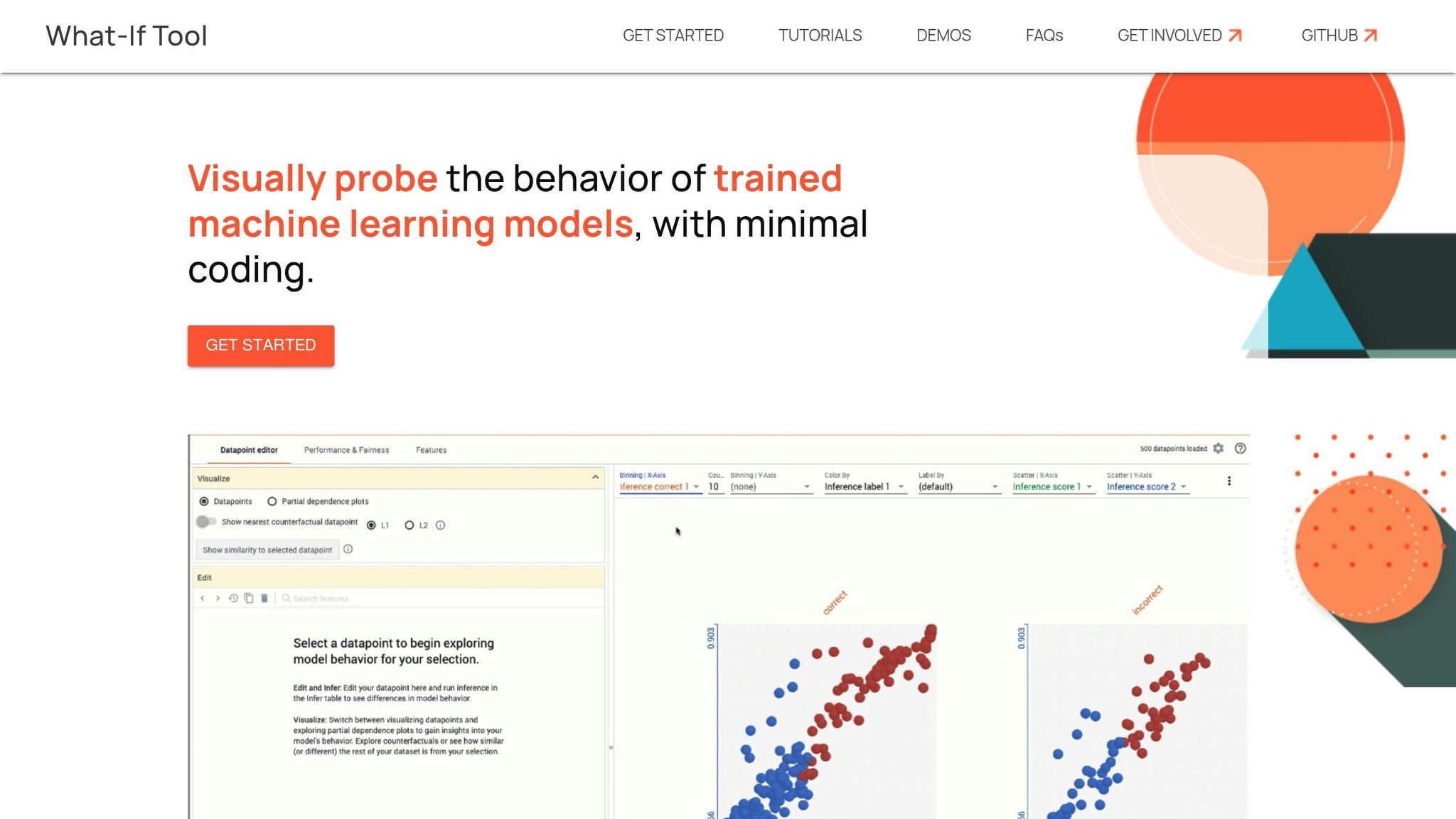

- Google What-If Tool: Browser-based, code-free platform for visual bias analysis. User-friendly but limited to Google Cloud environments.

Quick Comparison:

| Framework/Tool | Detection Methodology | Bias Types Addressed | Strengths | Limitations |

|---|---|---|---|---|

| BiasGuard | Fairness guidelines + RL | Social biases (gender, race) | High accuracy, JSON reports | Limited scope, setup needed |

| GPTBIAS | GPT-4-based evaluation | 9+, including intersectional | Broad detection, detailed reports | High computational cost |

| Projection-Based | Internal model analysis | Encoded biases | Collaborative, visual insights | Requires expertise, not scalable |

| IBM AI Fairness 360 | 70+ metrics across ML lifecycle | Demographic, proxy, model drift | Comprehensive metrics library | Steep learning curve |

| Microsoft Fairlearn | Demographic analysis + dashboard | U.S. regulatory biases | Azure integration, scalable | Limited to Azure ecosystem |

| Google What-If Tool | Interactive, visual analysis | Dataset attribute biases | Code-free, privacy-friendly | Limited scalability |

Each framework suits different needs. For high precision, choose BiasGuard. For black-box audits, opt for GPTBIAS. IBM AI Fairness 360 is best for extensive bias analysis, while Microsoft Fairlearn and Google What-If Tool support specific ecosystems. NAITIVE AI Consulting Agency offers tailored implementation support for businesses navigating these tools and compliance requirements.

Bias and Fairness Testing in AI Models - real life examples

1. BiasGuard

BiasGuard is designed to spot social biases in large language models by following specific fairness guidelines and using reinforcement learning. It serves as one of the tools in our analysis of frameworks for identifying bias.

Detection Methodology

BiasGuard takes a two-step approach to identify biases. First, it applies fairness guidelines and uses structured prefixes to assess the input text. Then, it relies on reinforcement learning to refine its results, helping it detect subtle forms of bias. By analyzing sentence structure, intent, and sociological definitions of bias, it can pick up on nuanced cues that might otherwise go unnoticed. This detailed process is the backbone of BiasGuard's ability to identify and report biases effectively.

Bias Types Addressed

This tool excels at identifying biases related to gender, race, and age. To avoid over-flagging, it is designed to reduce false positives, ensuring that fair content isn't mistakenly flagged as biased. In benchmark tests using datasets like Toxigen and Implicit Toxicity, BiasGuard outperformed standard methods on three out of five datasets, showcasing its ability to detect subtle social biases.

Strengths

One of BiasGuard's key advantages is its high precision and the ability to generate clear, JSON-formatted reports. These reports detail the reasoning behind each bias classification, making them useful for compliance and documentation. This reliability makes BiasGuard a solid choice for production environments where accuracy is critical.

Limitations

While BiasGuard is precise, its focus is limited to social biases. Organizations looking to address a wider range of biases may need to use it alongside other tools. Additionally, the framework requires significant setup time and configuration before it can be deployed effectively.

2. GPTBIAS

GPTBIAS leverages GPT-4 to evaluate biases in black-box AI models, making it particularly suited for auditing proprietary systems.

Detection Methodology

At its core, GPTBIAS uses GPT-4 to assess and report on various forms of bias in AI systems. What sets it apart is its ability to operate without direct access to a model's internal workings. Instead, it relies on GPT-4's advanced language capabilities to detect subtle patterns of bias and provide thorough evaluations. This approach enables a comprehensive analysis, even for systems that are otherwise opaque.

Bias Types Addressed

One standout feature of GPTBIAS is its ability to detect a wide range of bias types - at least nine, to be exact. This includes nuanced intersectional biases, which are often missed by other tools. These biases can affect individuals belonging to multiple marginalized groups, making GPTBIAS particularly effective in identifying complex, layered issues.

Strengths

GPTBIAS shines when it comes to detailed reporting. Its assessments go beyond just identifying biases - they include insights into the types of biases detected, the groups affected, trigger keywords, potential root causes, and actionable recommendations. Another advantage is its ability to evaluate black-box models, allowing it to analyze proprietary systems without needing access to their internal mechanics.

Limitations

Despite its strengths, GPTBIAS has some drawbacks. Since it relies heavily on GPT-4, it comes with significant computational costs, making it more resource-intensive than other bias detection frameworks. Additionally, because GPTBIAS depends on GPT-4, there's a risk that its assessments could mirror any biases present in GPT-4 itself, potentially affecting the objectivity of its findings.

| Feature | GPTBIAS | BiasGuard |

|---|---|---|

| Bias Types Detected | 9+ including intersectional | Gender, race, age (focused) |

| Computational Cost | High (GPT-4 dependent) | Moderate |

| Best Use Case | Auditing & detailed analysis | Production environments |

| Model Access Required | None (black-box) | None (black-box) |

| Reporting Style | Detailed written reports | Structured JSON outputs |

3. Projection-Based Methods

Projection-based methods dive into the inner workings of models, analyzing components like word embeddings and hidden states. Tools such as PCA and t-SNE are used to create visualizations that highlight clusters, revealing patterns and biases in the data.

Detection Methodology

Unlike black-box methods that focus solely on outputs, projection-based techniques examine what’s happening inside the model. By extracting internal features and projecting them into a visual space, these methods allow teams to observe clusters that represent group dynamics and biases. This visual approach helps uncover nuanced, intersectional biases and opens up the conversation to both technical and non-technical stakeholders.

Bias Types Addressed

These methods are particularly effective at identifying social biases, including those related to gender, race, and age. By visualizing demographic clusters, they bring to light biases that might not be apparent when looking at outputs alone.

Strengths

One of the standout benefits of projection-based methods is their ability to produce clear, visual representations of complex bias patterns. These visualizations are easy to interpret, even for team members without advanced statistical knowledge, which encourages collaboration across diverse groups. Additionally, this approach is highly adaptable during the model development process, enabling teams to make iterative improvements based on immediate feedback from the visual analysis.

Limitations

However, these methods are not without challenges. Setting up and using projection-based tools requires technical expertise in data visualization, which can make them less accessible than black-box alternatives. While the visualizations are intuitive, interpreting them accurately demands careful attention to avoid mistaking legitimate patterns for biases. Another drawback is their limited scalability, making them less suitable for large-scale, automated bias audits.

| Feature | Projection-Based | GPTBIAS | BiasGuard |

|---|---|---|---|

| Primary Approach | Visual embedding analysis | GPT-4 evaluation | Fairness guidelines |

| Team Collaboration | High (visual tools) | Low (automated) | Moderate |

| Technical Expertise | High (setup required) | Low (black-box) | Moderate |

| Development Phase Use | Excellent | Limited | Good |

| Scalability | Limited | High | High |

4. IBM AI Fairness 360 (AIF360)

IBM AI Fairness 360 (AIF360), developed by IBM Research, is an open-source toolkit designed to tackle bias across the entire machine learning lifecycle. Unlike other frameworks that focus on specific bias detection methods, AIF360 offers over 70 fairness metrics, making it a versatile resource for both enterprise and academic users.

Detection Methodology

AIF360 evaluates bias at three critical stages: pre-processing, in-processing, and post-processing.

- Pre-processing: It examines training data for imbalances in representation and demographic disparities.

- In-processing: The toolkit monitors model behavior during training to catch emerging patterns of bias.

- Post-processing: It analyzes model outputs to detect prediction disparities across different groups.

Using statistical analysis and machine learning, AIF360 generates detailed reports on factors like feature importance, counterfactual scenarios, and demographic parity. This multi-stage approach ensures comprehensive bias detection throughout the machine learning workflow.

Bias Types Addressed

AIF360 identifies bias in sensitive attributes such as race, gender, age, and socioeconomic background. It addresses issues stemming from unrepresentative datasets, flawed decision criteria, and inadequate monitoring during deployment. Beyond basic demographic bias, the toolkit also detects:

- Proxy variable bias: When seemingly neutral variables indirectly encode protected characteristics.

- Model drift bias: When initially unbiased models develop discriminatory patterns over time due to changes in data.

Strengths

One of AIF360's standout features is its wide-ranging coverage of bias detection and mitigation techniques. It offers one of the largest collections of fairness metrics in the open-source space and can integrate seamlessly with CI/CD pipelines like MLflow and Kubeflow for automated fairness testing. It supports popular machine learning frameworks such as scikit-learn, TensorFlow, and PyTorch.

For real-time monitoring, AIF360 works with IBM Watson OpenScale, enabling continuous evaluation of deployed models. This includes automated alerts when performance differences arise across demographic groups. Its open-source nature, combined with detailed tutorials, makes it a practical tool for customizing fairness solutions.

Limitations

While AIF360 is powerful, it does come with challenges. The toolkit requires proficiency in Python, which might limit its usability for non-technical teams. Choosing the right fairness metrics can be daunting, as developers often face trade-offs between different fairness definitions without clear guidance. Additionally, its effectiveness hinges on correctly identifying variables that could act as proxies for protected characteristics. It may also struggle to adapt to evolving data patterns when fairness regulations remain static.

5. Microsoft Fairlearn/Fairness Dashboard

Microsoft Fairlearn is a blend of an open-source Python library and a dashboard integrated into Azure Machine Learning, designed to identify and address bias in machine learning models. This dual approach balances technical flexibility with regulatory compliance, particularly focusing on U.S. regulations.

Detection Methodology

Fairlearn evaluates fairness by analyzing model performance across various demographic groups. It uses fairness metrics and mitigation algorithms to highlight disparities. The Microsoft Fairness Dashboard provides an interactive way to explore how models perform across sensitive attributes like race, gender, and age. It also allows users to test fairness definitions, such as demographic parity and equalized odds. These tools give development teams the ability to fine-tune fairness constraints during the model-building process.

Bias Types Addressed

This tool primarily focuses on identifying demographic biases regulated in the U.S., but it also offers the flexibility to define custom sensitive attributes for different industries. By pinpointing disparities in predictions, Fairlearn helps organizations adhere to regulations enforced by agencies like the Equal Employment Opportunity Commission (EEOC) and the Fair Credit Reporting Act (FCRA).

Strengths

One of Fairlearn's standout features is its ability to both detect and address bias. Its open-source library, combined with Azure integration, provides detailed visualizations and interactive tools that scale efficiently for organizations using Microsoft's cloud services. The platform allows teams to tackle bias directly by incorporating fairness constraints and mitigation algorithms. It also supports custom fairness definitions, making it adaptable to specific organizational and regulatory requirements.

Limitations

Fairlearn does have some limitations. Its reliance on the Azure ecosystem may make it less accessible for organizations using other cloud platforms or on-premises setups. Additionally, using Fairlearn requires proficiency in Python and machine learning, which could pose challenges for less technical teams. While the dashboard offers real-time insights during model development, its capabilities for ongoing production monitoring are more limited compared to specialized enterprise tools. Furthermore, addressing intersectional or highly context-specific biases may require additional customization.

6. Google What-If Tool

Google's What-If Tool is a browser-based platform designed to help users, regardless of technical expertise, identify bias in machine learning models. By integrating with TensorFlow and Keras, it provides a code-free interface, making it accessible for a broad range of users to analyze fairness in AI systems.

How It Works

The tool relies on interactive visualizations and real-time input adjustments to uncover bias across different demographic groups. Users can tweak input features and instantly see how these changes affect model predictions. This process allows for the creation of "what-if" scenarios, where users adjust variables and examine the resulting confusion matrices, scatter plots, and partial dependence plots. These visualizations make it easier to spot patterns of bias and zero in on areas that need further investigation.

Types of Bias It Identifies

The What-If Tool is particularly effective at identifying biases tied to sensitive attributes. It enables users to segment datasets and compare how a model performs across different groups. This can reveal disparities in results, such as differences in prediction accuracy or variations in false positive and false negative rates. The visual nature of the tool makes it easier to detect these issues, even for those who may not be familiar with traditional statistical methods.

Key Advantages

What sets the What-If Tool apart is its focus on visual analysis. Unlike tools that rely solely on text or statistical outputs, this platform uses an intuitive, code-free interface that appeals to both technical and non-technical users. Its visualization features allow for quick exploration of model fairness and performance. The tool is also versatile, supporting various data types and models, and integrates smoothly within Google's ecosystem. This makes it a valuable resource for tasks like debugging, education, and prototyping. Another plus: the browser-based tool doesn't store or share datasets, addressing privacy concerns during sensitive data analysis.

Drawbacks

While the What-If Tool is user-friendly, it does have its limitations. Its integration with Google's ecosystem can make it less accessible for users outside of Google Cloud. Although the code-free interface is great for non-technical users, setting up custom models may still require technical expertise. The tool also struggles with scalability when working with very large datasets and focuses more on exploratory analysis rather than offering automated, in-depth statistical bias measurements. This makes it less suited for large-scale production monitoring. Additionally, users must handle data governance and ensure compliance with regulations like HIPAA or CCPA when working with sensitive information.

Framework Comparison

The right framework depends heavily on the specific use case. Each one takes a unique approach to detecting and addressing bias, which makes some more appropriate for certain scenarios than others.

Here’s a quick comparison of popular frameworks:

| Framework/Tool | Detection Methodology | Bias Types Addressed | Strengths | Limitations |

|---|---|---|---|---|

| BiasGuard | Fairness guidelines + reinforcement learning | Social biases (gender, race, age) | High precision, fewer false positives, works well in production | Limited bias coverage, setup required |

| GPTBIAS | GPT-4 based evaluation for black-box models | 9+ types, including intersectional biases | Broad detection scope, detailed and interpretable reports | High computational costs, depends on GPT-4 |

| Projection-Based Methods | Internal model analysis + visual clustering tools | Encoded biases within model components | Visual collaboration, supports iterative model improvements | Requires technical expertise for setup |

| IBM AI Fairness 360 | 70+ fairness metrics + mitigation algorithms | Demographic and intersectional biases | Open-source toolkit, supports multiple frameworks | Steep learning curve, needs ML expertise |

| Microsoft Fairlearn/Dashboard | Fairness constraints + demographic parity visualizations | Group fairness across sensitive attributes | Focus on enterprise compliance, automated fairness testing | Best within Azure ecosystem, limited pre-processing options |

| Google What-If Tool | Interactive, code-free model analysis | Performance and fairness across dataset attributes | Easy to use, no data storage needed, great for education | Works best in Google’s ecosystem, limited scalability |

Real-world tests highlight these differences even more. For example, BiasGuard excelled at detecting nuanced social biases in challenging datasets, outperforming baseline methods. Meanwhile, GPTBIAS demonstrated its strength by analyzing the BLOOMZ model, where it uncovered a high sexual orientation bias score of 0.93.

However, each framework comes with trade-offs. GPTBIAS’s reliance on GPT-4 makes it resource-intensive, whereas IBM AI Fairness 360 provides over 70 fairness metrics in a more cost-efficient way. Google What-If Tool’s code-free interface is ideal for non-technical users, but Projection-Based Methods demand a stronger technical background.

Integration into existing workflows also matters. Microsoft Fairlearn and Google What-If Tool perform best within their respective ecosystems - Azure for Fairlearn and Google Cloud for What-If Tool. On the other hand, IBM AI Fairness 360 offers flexibility across platforms, making it a versatile choice.

Frameworks like IBM AI Fairness 360 and Microsoft Fairlearn also align well with responsible AI standards, such as IEEE 7003-2024 and various state-level AI regulations. For regulatory audits, tools like GPTBIAS and BiasGuard generate detailed bias reports that meet compliance needs.

Ultimately, the choice depends on organizational goals. BiasGuard is ideal for high-precision production environments, while GPTBIAS is better suited for comprehensive audits of black-box models. Teams focusing on collaborative development might prefer Projection-Based Methods, and those requiring extensive mitigation tools will benefit most from IBM AI Fairness 360.

For organizations looking to implement these frameworks effectively, NAITIVE AI Consulting Agency offers tailored support to help U.S. enterprises adopt AI solutions while ensuring compliance with industry-specific regulations.

Conclusion

Choosing the best bias detection framework depends on your organization’s specific needs, available resources, and any legal requirements you must meet. No single framework works perfectly for every scenario, so understanding what each one brings to the table is key.

For example, BiasGuard focuses on delivering high production accuracy, making it a strong choice for organizations prioritizing precision. On the other hand, GPTBIAS excels in conducting in-depth black-box audits, though it requires more computational power. Enterprise-level tools like IBM AI Fairness 360, Microsoft Fairlearn, and Google What-If Tool are designed to handle a wide range of compliance and accessibility needs.

In the U.S., companies must also navigate regulatory guidelines, particularly from the Equal Employment Opportunity Commission (EEOC) and the Fair Credit Reporting Act (FCRA), which are especially relevant in hiring and financial services. Regular monitoring and thorough documentation are crucial to staying compliant.

Building cross-functional teams is another critical step. By bringing together data scientists, legal experts, and HR professionals, organizations can ensure consistent monitoring, compliance, and ethical AI practices. Experts emphasize the importance of these teams, along with frequent audits and ongoing training, to maintain effective bias detection.

For organizations needing additional support, NAITIVE AI Consulting Agency offers tailored guidance. Their services include evaluating AI systems, identifying areas where AI can make the most impact, and ensuring secure, compliant implementations. These efforts are essential for addressing bias and achieving ethical AI deployment.

FAQs

What factors should I consider when selecting a bias detection framework for my organization?

When selecting a bias detection framework, it’s essential to align your choice with your organization’s unique needs and objectives. Begin by assessing the AI models you currently employ and identifying where bias might pose a risk in your applications. Key considerations include how well the framework integrates with your existing systems, its capacity to manage your data volume, and the specific types of biases it is equipped to identify.

NAITIVE AI Consulting Agency offers support in pinpointing and implementing bias detection solutions that are tailored to your business. With their deep knowledge of advanced AI systems and automation, they can help you tackle bias effectively while ensuring your AI models maintain top performance.

What challenges might arise when using bias detection frameworks in AI models?

Implementing frameworks to detect bias in AI models comes with its fair share of challenges. One of the biggest hurdles lies in the complexity of defining and measuring bias. Bias can look very different depending on the context or societal norms, which vary greatly across communities and regions. This makes it tricky to pin down a universal approach to identifying and addressing it.

On top of that, the tools used for bias detection are only as good as the data they analyze. If the data lacks diversity or quality, the results might be incomplete or even misleading.

Another significant challenge is the delicate balance between fairness and model performance. Reducing bias often means tweaking the model, which can sometimes reduce its accuracy or efficiency. And let’s not forget the practical side of things - integrating these frameworks into existing systems isn’t always straightforward. It demands technical know-how, time, and resources, which can be a heavy lift for many organizations.

How can businesses ensure their use of AI bias detection tools aligns with legal and ethical standards?

To meet legal and ethical expectations, businesses need to focus on transparency, accountability, and fairness in their AI systems. This means conducting regular audits to identify and address bias, keeping clear records of decision-making processes, and adhering to relevant laws and guidelines.

Working with professionals like NAITIVE AI Consulting Agency can make this process smoother. Their deep knowledge of AI automation and advanced systems equips businesses to seamlessly incorporate ethical AI practices into their operations with confidence.