AI Risk Management: Role of Human Oversight

Covers HITL, HOTL, and audit-based oversight, plus regulatory rules, governance, tools, and metrics to reduce AI bias, errors, and safety risks.

Artificial intelligence (AI) is transforming industries, but it comes with risks like bias, errors, and decisions that lack transparency. To address these issues, human oversight is essential. Oversight ensures AI systems are safe, ethical, and compliant with regulations like the EU AI Act and NIST AI Risk Management Framework. Key takeaways include:

- AI Risks: Automation bias and loss of context can lead to harmful outcomes.

- Oversight Models:

- Human-in-the-Loop (HITL): Humans actively approve AI decisions (e.g., surgical robots).

- Human-on-the-Loop (HOTL): AI operates independently but is monitored by humans (e.g., autonomous vehicles).

- Human-out-of-the-Loop: AI operates autonomously, with audits ensuring accountability (e.g., spam filters).

- Frameworks: The EU AI Act mandates oversight for high-risk systems, while NIST AI RMF offers voluntary guidance for proportional human involvement.

- Implementation: Effective oversight requires clear governance and AI automation, tools like kill-switches and audit logs, and well-trained reviewers to mitigate automation bias.

Applied Artificial Intelligence - Human Oversight in AI Systems

Frameworks and Standards for Human Oversight

Regulatory and industry frameworks provide diverse guidance on implementing human oversight in AI systems. Let's break down some of the key approaches, starting with NIST's consensus-driven model.

NIST AI Risk Management Framework (AI RMF)

Introduced in early 2023, the NIST AI Risk Management Framework is a voluntary, collaborative guideline for AI oversight. Developed over 18 months with input from over 240 organizations spanning industries, academia, and government, it frames AI as a socio-technical system where human judgment plays a critical role.

The framework is built around four core functions:

- Govern: Define roles and establish accountability.

- Map: Identify the operational context of the AI system.

- Measure: Monitor fairness, bias, and other metrics.

- Manage: Implement mitigation strategies, including human-in-the-loop processes.

One of its central principles is proportional oversight - human involvement should align with the level of risk. For example, high-stakes applications like threat detection demand rigorous review, while lower-risk tasks may require less intervention. The framework also stresses the importance of clear documentation, outlining the system's limitations and specifying when human intervention is needed.

In mid-2024, NIST expanded its guidance by releasing the Generative AI Profile (NIST-AI-600-1), which addresses risks specific to generative models.

EU AI Act and Human Oversight

Unlike NIST's voluntary framework, the EU AI Act enforces mandatory oversight for high-risk AI systems. Article 14 of the Act, which took effect on August 1, 2024, with full enforcement starting August 2, 2026, mandates that these systems be designed for effective human oversight during their operational use.

The Act outlines specific qualifications for human overseers. They must:

- Understand the AI system's capabilities and limitations.

- Be aware of automation bias and interpret outputs accurately.

- Have the authority to override or reverse AI decisions when necessary.

Additionally, human operators must have the ability to "intervene or interrupt operation via a 'stop' button or similar mechanism." For high-risk applications like remote biometric identification, the Act goes further, requiring a "two-person rule" where results must be independently verified by at least two individuals before any action is taken.

The Act also includes enforcement measures, such as granting individuals the right to file complaints (Article 85), demand explanations for AI decisions (Article 86), and imposing steep administrative fines for non-compliance (Article 99).

GDPR and Automation Oversight

The General Data Protection Regulation (GDPR), which predates the NIST framework and EU AI Act, laid the groundwork for human oversight in automated decision-making. Article 22 of GDPR prohibits individuals from being subjected solely to automated decisions with significant consequences. Organizations are required to provide:

- Competent human review.

- Transparent logic behind decisions.

- Clear explanations of outcomes.

These frameworks collectively highlight the importance of embedding human oversight into AI systems to ensure responsible and ethical operations.

| Framework | Nature | Primary Focus | Key Requirement |

|---|---|---|---|

| NIST AI RMF | Voluntary and collaborative | Building trust throughout the AI lifecycle | Define roles and document system limitations |

| EU AI Act | Mandatory for high-risk AI | Protecting health, safety, and basic rights | Ensure human intervention (e.g., a "stop" button) |

| GDPR | Mandatory for personal data use | Safeguarding individual rights in automation | Provide meaningful human review and clear explanations |

These frameworks reinforce the necessity of human oversight in AI, ensuring a balance between technological efficiency and human responsibility. For organizations navigating these requirements, NAITIVE AI Consulting Agency offers tailored solutions to integrate these standards effectively.

Models of Human Oversight in AI Systems

Three Models of Human Oversight in AI Systems: HITL, HOTL, and Audit-Based Approaches

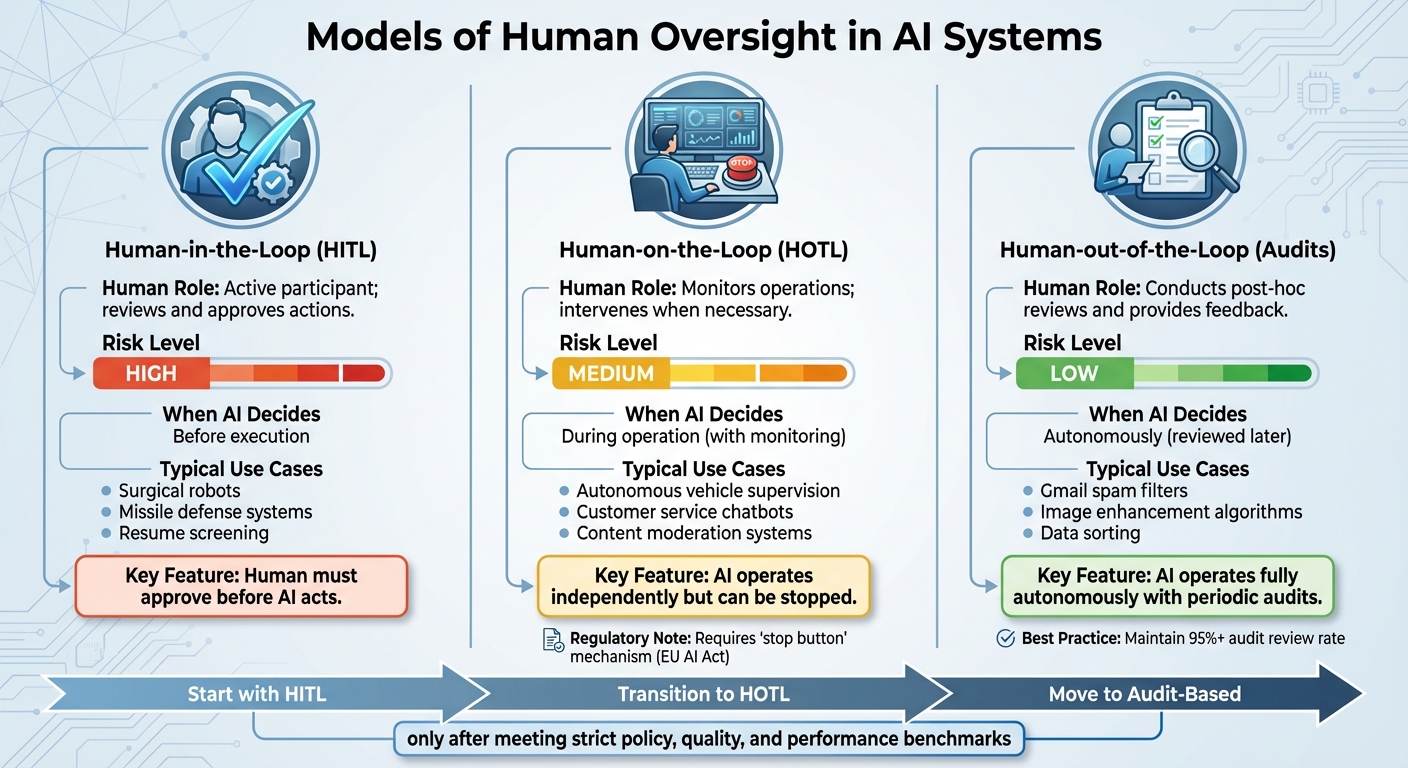

Choosing the right oversight model is critical. It ensures human involvement matches the risks and autonomy levels of an AI system. The three main models - Human-in-the-Loop (HITL), Human-on-the-Loop (HOTL), and Human-out-of-the-Loop with Audits - each serve different operational needs. These models lay the groundwork for the oversight processes covered in the next section.

Human-in-the-Loop (HITL) Systems

In HITL systems, humans play an active role, either selecting from AI-generated options or approving a single AI recommendation before execution. This model is essential for high-risk scenarios, such as surgical robotics, missile defense, and resume screening, where errors can have serious consequences.

"Solving AI risks with a HITL requires: clearly defining the applicable loop, clearly specifying the underlying principles to drive oversight... and having proper metrics to assess AI-enabled results." - Orrie Dinstein, Global Chief Privacy Officer, Marsh McLennan

HITL systems align with regulatory requirements, often demanding multi-person verification for high-stakes decision-making.

Human-on-the-Loop (HOTL) Systems

HOTL systems operate autonomously but are monitored by humans who can step in to stop operations if necessary. This model is ideal for medium-risk applications where constant human approval would slow processes, despite reliable AI performance. Examples include autonomous vehicle supervision, customer service chatbots, and content moderation systems.

Unlike HITL, the AI continues independently unless human intervention is required. To comply with regulations like the EU AI Act, these systems must include mechanisms like a "stop button" for immediate operation halts.

Human-out-of-the-Loop with Audits

In this model, the AI operates fully autonomously during decision-making, while human involvement comes later through audits and feedback loops. This approach works well for low-risk tasks, where constant monitoring isn't practical or necessary. Common examples include Gmail spam filters, image enhancement tools, and data sorting algorithms.

To ensure accountability, systems should maintain detailed logs and undergo regular audits. According to The Pedowitz Group, maintaining an audit review rate of at least 95% is essential for effective oversight.

| Model | Human Role | Risk Level | Typical Use Case |

|---|---|---|---|

| Human-in-the-Loop (HITL) | Active participant; reviews and approves actions | High | Surgical robots, missile defense systems, resume screening |

| Human-on-the-Loop (HOTL) | Monitors operations; intervenes when necessary | Medium | Autonomous vehicle supervision, customer service chatbots, content moderation |

| Human-out-of-the-Loop (Audits) | Conducts post-hoc reviews and provides feedback | Low | Gmail spam filters, image enhancement algorithms, data sorting |

The choice of oversight model depends on when the AI system makes "consequential decisions" - those with financial, legal, or health-related impacts. Starting with HITL is often the safest option, transitioning to more autonomous models only after meeting strict policy, quality, and performance benchmarks.

How to Implement Human Oversight in AI

Putting human oversight into practice requires clear governance structures, technical safeguards, and disciplined operational processes. The Pedowitz Group simplifies this approach with a formula: "Oversight = clear ownership + controllable autonomy + complete observability". Specialized AI consulting services can help organizations tailor these formulas to their specific operational needs. These three elements must work in harmony to effectively manage AI risks.

Building Oversight Governance

To establish effective oversight, organizations need strong governance as the foundation. Start by assigning a dedicated human owner to each AI workflow. Using a RACI (Responsible, Accountable, Consulted, Informed) matrix can help define these roles clearly, avoiding the pitfalls of "shared responsibility" where no one takes charge when issues arise. Document these responsibilities in an Oversight Charter, which outlines the roles of oversight committees, cross-functional teams, and the criteria for advancing AI systems to higher autonomy levels.

Set up autonomy tiers that align with your organization's risk tolerance. A common framework includes four levels:

- Assist: Human-led operations

- Execute: AI-led processes with approval gates

- Optimize: AI-led actions with ongoing monitoring

- Orchestrate: High autonomy under strategic oversight

Each tier should have distinct approval processes. For instance, high-risk actions may require manual reviews or multi-approver sign-offs, while low-risk tasks can rely on automated checks.

It's equally important to ensure that human reviewers have independent reporting authority, allowing them to challenge AI decisions without fear of retaliation. This independence should be built into job descriptions and organizational policies. To maintain discipline, aim for a review cadence adherence of at least 95%.

Once governance structures are in place, the focus shifts to implementing technical tools that enforce these protocols.

Technical Tools for Oversight

Technical tools form the backbone of any oversight framework. Every AI system should include a kill-switch paired with a rollback playbook for quick recovery. The goal is to achieve a Mean Time to Halt of ≤ 2 minutes from detection to activation. Additionally, automated policy validators act as a safety net, ensuring legal, brand, and consent compliance before finalizing AI actions.

Audit traces are vital for accountability, logging every decision with details like inputs, sources, tool calls, costs, and versions tied to a specific case record. The target here is 100% audit completeness. For high-risk systems, Human-Machine Interface (HMI) tools are essential. These tools allow human operators to understand the system's limitations, identify anomalies, and override or reverse decisions when necessary.

| Oversight Tool | Function | Target Metric |

|---|---|---|

| Kill-Switch | Instant stop and revert | Mean Time to Halt ≤ 2 minutes |

| Policy Validators | Automated compliance checks | Policy Pass Rate ≥ 99% |

| Audit Traces | Complete decision logs | Audit Completeness = 100% |

| Approval Gates | Human review flows | Escalation Rate ≤ 10% |

With these tools in place, organizations can refine their oversight through well-defined operational processes.

Operational Processes for Oversight

Begin new AI workflows in "shadow" or "assist" mode, where AI outputs are compared against human decisions before granting execution authority. During the early stages, use weekly oversight scorecards to monitor performance, transitioning to monthly reviews once the system stabilizes. When assessing AI systems for promotion or rollback, freeze the system version to ensure data consistency.

Address automation bias by training human reviewers to critically evaluate AI outputs. For example, use "mystery shopping" exercises - feeding the AI misleading information - to test whether reviewers can identify and challenge incorrect outputs. Document every override decision along with its rationale to improve system training and accountability. Aim for a sensitive-step escalation rate of ≤ 10%, which indicates readiness for higher autonomy levels.

"Human oversight shall aim to prevent or minimise the risks to health, safety or fundamental rights that may emerge when a high-risk AI system is used." – EU AI Act, Article 14

Conduct monthly drills to test the kill-switch and rollback procedures, ensuring teams are prepared for real-world failures. Formal review schedules and re-review processes should also be in place to catch and correct mistakes made by reviewers who may deviate from established protocols.

Common Challenges in Human Oversight

Even with well-designed frameworks, organizations often face hurdles in ensuring effective human oversight. These obstacles can reveal gaps that persist even when systems appear to be well-structured.

Addressing Automation Bias

One significant issue is automation bias, which can take two forms: over-reliance on AI systems or excessive skepticism toward them. Both tendencies can erode the quality of oversight. When people trust an AI system too much, they may reduce their monitoring efforts - a behavior known as complacency effects.

A more subtle challenge is deceptive epistemic access, where explainability tools provide misleading or incomplete information. This can create a false sense of confidence, making it harder to identify errors.

To tackle these biases, organizations can use Signal Detection Theory (SDT). This approach helps measure oversight performance by distinguishing between two key factors: sensitivity (how well errors are detected) and response bias (the threshold for reporting an error). By analyzing hit rates and false alarms, teams can pinpoint whether issues stem from skill gaps or poor decision thresholds.

The EU AI Act also takes this issue seriously. It mandates that high-risk AI systems be designed to help humans recognize automation bias and interpret outputs accurately. For critical applications like biometric identification, the Act requires verification by at least two qualified individuals before any action is taken. Additionally, organizations should gather data on how often and why humans override AI outputs. This feedback loop can improve both the AI system and the oversight process.

These challenges highlight the importance of assembling oversight teams with diverse expertise, which leads to the next point.

Managing Expertise Gaps

Effective oversight demands a deep understanding of both AI systems and the contexts in which they operate. As Sarah Sterz and her team at Saarland University explain:

For human oversight to be effective, the human overseer has to have... suitable epistemic access to relevant aspects of the situation.

The problem is that oversight often requires interdisciplinary collaboration. For example, domain experts (like doctors, lawyers, or compliance officers) are needed to evaluate whether AI outputs make sense in context, while technical experts monitor system performance and identify malfunctions.

| Strategy | Implementation Method | Objective |

|---|---|---|

| Interdisciplinary Teams | Combine domain experts with technical monitors | Ensure technical accuracy and contextual relevance |

| Epistemic Access | Provide tools to interpret AI reasoning and limitations | Detect subtle failures |

| Redundancy | Require verification by at least two qualified individuals for high-risk tasks | Reduce individual error and bias |

| Performance Audits | Conduct "mystery shopping" and regular reviews | Maintain vigilance and prevent automation bias |

The Information Commissioner's Office stresses that:

Human reviewers have appropriate knowledge, experience, authority and independence to challenge decisions.

This means training reviewers to interpret AI outputs accurately and identify failures. They also need to be equipped to recognize security threats, such as adversarial attacks or data poisoning, which can manipulate AI behavior to mislead human monitors.

While addressing expertise gaps is critical, organizations must also balance thorough oversight with maintaining operational efficiency.

Balancing Efficiency with Oversight

Thorough human review can slow processes, potentially offsetting the efficiency gains AI brings. As Boston Consulting Group (BCG) puts it:

Human oversight is time well spent - usually. An all-in, always-on, leave-no-output-unturned approach can cancel out all efficiency gains that a GenAI system can bring.

The key is risk-differentiated oversight. High-risk decisions should undergo rigorous review, while low-risk tasks can proceed with automated checks. For instance, tiered approval systems allow low-risk actions to be automated after passing policy checks, while high-risk ones are escalated to human reviewers with clear timelines.

To keep oversight efficient, organizations can:

- Use structured rubrics or "cookbooks" with specific criteria and red flags.

- Periodically test systems with intentional errors to ensure vigilance.

- Design AI to provide summaries of supporting and contradicting evidence, helping reviewers make quicker, informed decisions.

It's also essential to remove barriers that discourage escalation. Reviewers should not face penalties for slowing processes when identifying potential errors.

As The Pedowitz Group explains:

Oversight = clear ownership + controllable autonomy + complete observability.

Conclusion and Key Takeaways

Human oversight is the cornerstone of managing AI risks effectively. As a senior compliance leader aptly put it, "Ultimately, it is the human beings who must be accountable. You can't outsource accountability." The strategies, frameworks, and models discussed throughout this guide all emphasize one crucial principle: AI systems must incorporate human judgment as a core element, not as an afterthought.

To make oversight effective, it’s important to empower reviewers with the authority, expertise, and independence to challenge AI outputs. The level of oversight should also match the level of risk - high-stakes decisions demand thorough human involvement, while lower-risk tasks can rely on automated systems complemented by periodic human audits.

Final Thoughts on Human Oversight

As previously discussed, successful oversight depends on three critical factors: clear ownership, controllable autonomy, and complete observability. Clear ownership ensures accountability, while controlled autonomy prevents premature deployment of systems that aren't fully vetted. Observability - through audit logs and performance metrics - ensures potential failures are detected before they cause harm.

The adoption of Human-on-the-Loop (HOTL) models reflects the practical need to balance efficiency with control. While it may not be feasible for humans to review every decision in high-volume scenarios, they can monitor patterns and intervene when needed.

Next Steps for Implementation

To strengthen your oversight framework, start with these actionable steps:

- Assign clear ownership: Designate one accountable individual for each AI workflow.

- Implement technical controls: Use kill-switch procedures, maintain detailed audit trails, and develop scorecards that combine performance metrics with safety measures.

- Establish review processes: During scaling phases, conduct weekly oversight reviews, transitioning to monthly as systems stabilize. Aim for a 95% adherence rate to these reviews.

- Train oversight teams: Educate teams on recognizing automation bias and understanding the system’s specific limitations. Incorporate periodic "mystery shopping" exercises to test the rigor of evaluations.

- Document human interventions: Record instances where human reviewers override AI outputs, including the reasoning behind these decisions. This feedback loop not only enhances the oversight process but also helps refine the AI system itself.

For organizations looking to establish strong AI governance, NAITIVE AI Consulting Agency offers tailored services to design oversight frameworks, implement technical safeguards, and train teams in managing AI risks effectively. Learn more at https://naitive.cloud.

FAQs

How do I choose between HITL, HOTL, and audit-only oversight?

When deciding on the oversight model for your AI system, the choice between Human-in-the-Loop (HITL), Human-on-the-Loop (HOTL), and audit-only oversight hinges on the system's risk level and operational demands.

- HITL: This approach requires humans to make decisions in real time, making it the best fit for high-stakes scenarios where immediate human judgment is crucial.

- HOTL: Here, humans monitor the system passively and intervene only when necessary. It strikes a balance between efficiency and maintaining safety.

- Audit-only: This model involves reviewing decisions after they’ve been made, suitable for systems operating in low-risk environments.

The right choice depends on your system's risk profile, regulatory requirements, and operational capabilities.

What does the EU AI Act require for human oversight in high-risk AI?

The EU AI Act requires that high-risk AI systems come equipped with tools enabling effective human oversight. The goal is to reduce potential risks to health, safety, or fundamental rights. These oversight measures need to match the system's level of risk, autonomy, and intended use. They can either be implemented by the provider before the system hits the market or by the deployer. This ensures that individuals can step in and take control when needed.

What metrics show our human oversight is actually working?

When evaluating human oversight in AI systems, several metrics can provide valuable insights:

- Accuracy of interventions: This measures how effectively humans correct or guide AI outputs, ensuring the system operates as intended.

- Time-to-decision (TTD): Tracks how quickly humans respond to and address AI-generated outputs, highlighting efficiency in oversight.

- False positive/negative rates: These indicate the frequency of errors, such as incorrect approvals or rejections, within the oversight process.

- Coverage ratio: Represents the proportion of AI decisions that are reviewed by humans, offering a sense of how thorough the oversight is.

- Reviewer Load Index (RLI): Helps monitor the workload of reviewers, ensuring they aren't overwhelmed, which could compromise decision quality.

By analyzing these metrics, organizations can better understand the effectiveness of their oversight processes and manage associated risks more effectively.