How AI Middleware Simplifies Legacy Integration

Connect legacy mainframes to modern apps with AI middleware, using APIs to cut integration costs, reduce errors, and speed deployment.

Legacy systems are still the backbone of many businesses, but they struggle to keep up with modern demands. AI middleware offers a practical solution by acting as a bridge between outdated systems and modern applications. Instead of replacing costly, stable infrastructure, middleware wraps legacy systems in modern interfaces like APIs, enabling seamless communication and real-time data processing.

Key points:

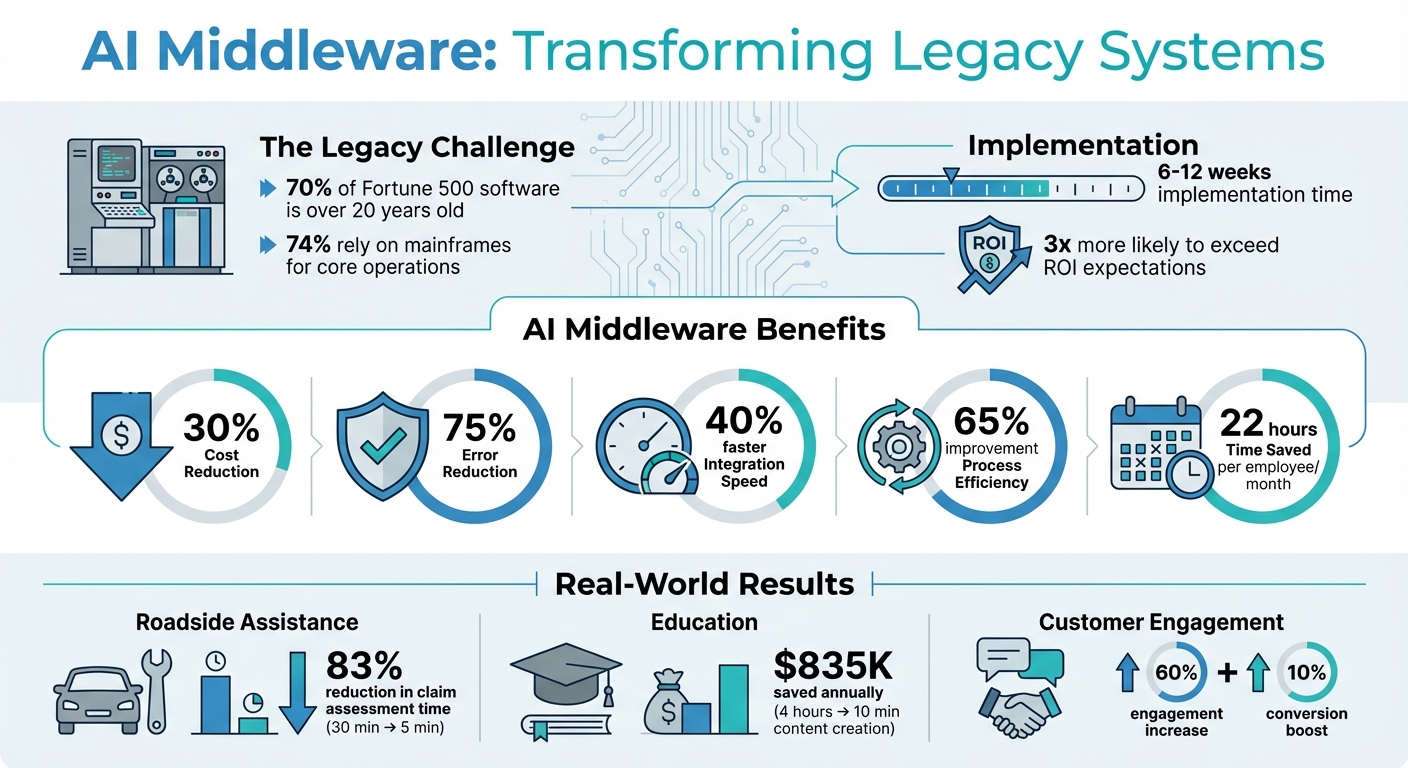

- 70% of Fortune 500 software is over 20 years old, and 74% rely on mainframes for core operations.

- Replacing these systems is risky and expensive, especially in industries like banking and healthcare.

- AI middleware reduces integration costs by 30%, cuts errors by 75%, and enhances process efficiency by 65%.

- Implementation is quick (6–12 weeks) and minimizes disruption.

AI Middleware Benefits: Cost Reduction and Efficiency Gains for Legacy System Integration

What AI Middleware Does for Legacy Integration

Defining AI Middleware

AI middleware acts as a kind of "intelligent translator" that bridges the gap between older legacy systems and modern applications. Think of it as a tool that not only enables these systems to communicate but also enhances the process by automating workflows, identifying anomalies, and delivering predictive insights using data from various sources.

Its design is built on four key layers: data connectors that standardize inputs, an integration framework to manage data flow, security and access controls, and resource management. This modular setup means you can update or replace individual parts without overhauling your entire legacy system. Essentially, it allows your infrastructure to adapt and grow with your needs.

Main Advantages of AI Middleware

The benefits of AI middleware are hard to ignore. It can reduce integration costs by 30%, cut processing errors by 75%, speed up business integration by 40%, and improve overall process efficiency by 65%. On top of that, automating workflows can save employees about 22 hours each month.

"AI middleware connects siloed systems together and adds additional intelligence. In the process of building total organizational intelligence, legacy software and platforms can be integrated, rather than building everything from scratch."

- Jacob Andra, CEO, Talbot West

What’s more, the implementation is surprisingly quick - usually taking just 6 to 12 weeks. This minimizes disruptions while delivering tangible results fast. Companies that use AI middleware strategically are nearly three times more likely to exceed their ROI expectations. These benefits make it a game-changer for businesses looking to modernize without starting from scratch.

Real Applications of AI Middleware

The real-world impact of AI middleware is evident across various industries:

- Roadside Assistance: ARC Europe used a GPT-powered AI agent linked to its legacy insurance systems. This reduced claim assessment times from 30 minutes to just 5 minutes - an impressive 83% decrease.

- Education: NewGlobe integrated Generative AI with teacher guide templates and spreadsheets via APIs. This cut content creation time from 4 hours to just 10 minutes, saving an estimated $835,000 annually.

- Customer Engagement: Newzip developed API interfaces connecting AI systems to customer data. This enabled hyper-personalized interactions, resulting in a 60% increase in user engagement and a 10% boost in conversion rates.

Other industries are also reaping the benefits. In healthcare, AI middleware helps integrate patient databases with diagnostic tools while ensuring HIPAA compliance. Meanwhile, in manufacturing, IoT sensor data is fed into predictive maintenance systems to prevent downtime. These examples highlight how AI middleware is transforming operations across diverse sectors.

Evaluating Your Legacy Systems for AI Middleware

Checking System Compatibility

Start by identifying the limitations of your current systems that could hinder integration efforts. Did you know that approximately 74% of Fortune 500 companies still rely on legacy mainframe systems for core operations? You're not alone in facing this challenge.

Determine whether your systems are monolithic or modular. Monolithic systems often require middleware encapsulation to function effectively with AI. Next, check for data standardization needs. If your data is stored in proprietary or non-standard formats, you'll need to create standardized schemas to enable smooth AI middleware integration. Similarly, systems without modern RESTful APIs - especially those dependent on outdated methods like screen scraping - pose additional complexity and require extra planning.

Evaluate your systems’ processing capabilities. Legacy setups based on batch processing aren't built to handle the real-time data flows AI demands. Also, assess security readiness. Ensure your systems support modern encryption methods, multi-factor authentication, and compliance with standards like GDPR, HIPAA, or SOX. Lastly, consider hardware limitations. Older systems with restricted memory might need lightweight middleware wrappers to avoid overloading.

Once you’ve mapped out system compatibility, focus on prioritizing which systems to address first based on their value and complexity.

Prioritizing Systems by Value and Complexity

Not all legacy systems are created equal, and not all deserve the same level of attention. Start by developing a business-criticality matrix to weigh each system’s importance against the challenges of integration. Prioritize systems that create operational bottlenecks or house siloed data that could significantly improve decision-making across your organization.

Take inspiration from real-world examples. For instance, TechnoFab Industries integrated machine learning into its legacy ERP system across 12 factories, leading to a 75% reduction in equipment downtime and a 30% decrease in maintenance costs. Similarly, GE Industrial adopted a phased approach, connecting decades-old machinery to cloud analytics one category at a time. This strategy ultimately reduced maintenance costs by 25% across their operations.

Focus on high-impact, low-risk pilots to build confidence. Keep in mind that systems with incomplete documentation or those where key personnel with legacy knowledge have retired can be more challenging to address. These institutional knowledge gaps should factor into your prioritization process.

With priorities in place, the next step is to tackle data integrity and conversion challenges.

Finding Data Issues and Conversion Requirements

Data quality is often a stumbling block for AI projects, delaying 80% of them. Start with a comprehensive data audit to identify inconsistencies and remove unnecessary information, ensuring a smoother integration process. Legacy systems often use proprietary formats and batch processing, which clash with the real-time, bidirectional data flows AI requires.

"Legacy systems were designed in an era before massive data throughput and real-time processing were standard requirements." - Modernize.io

Map out system dependencies to uncover potential data flow barriers. Before allowing AI to directly interact with legacy systems, create a read-only layer that provides sanitized data context. This allows you to validate AI-generated decisions against historical outcomes in a "shadow mode", minimizing risks to data integrity. To further streamline integration, implement canonical data models in your middleware. These models help normalize conflicting identifiers and inconsistent taxonomies across your systems.

Bridging Legacy and Cloud-Native Systems | Suman Neela | Conf42 SRE 2025

How to Implement AI Middleware for Legacy Integration

Once you've assessed your legacy systems, it's time to move forward with middleware strategies that allow seamless integration without disrupting your existing infrastructure.

Choosing the Right Middleware Technology

Middleware serves as the bridge between your legacy systems and modern AI, ensuring smooth communication without upheaval. The key is to select middleware that aligns with your system’s requirements, including risk tolerance and latency. For initial pilots, consider non-invasive middleware that interacts with your legacy system by polling data without altering its original code. For more complex environments requiring standardized interactions, an API facade can wrap your legacy logic into modern endpoints, ensuring secure and consistent communication across platforms.

Your middleware should support modern protocols like REST or MQTT while staying compatible with emerging frameworks such as the Model Context Protocol (MCP). As Maria Paktiti from WorkOS explains:

"Rather than treating every integration as a one-off, MCP introduces a standardized, client-server architecture... It's the difference between writing a custom driver for every hardware device and simply using USB".

This approach avoids the messy "spaghetti" integrations that often result from custom solutions.

Security is a must-have. Look for middleware with features like role-based access control (RBAC), in-transit encryption, and automated audit trails that comply with regulations such as GDPR, HIPAA, and SOX. To handle system failures gracefully, include circuit breakers to prevent cascading issues, and ensure the middleware is lightweight enough to function within the memory limitations of older hardware.

Creating Data Pipelines and APIs

An API-first strategy simplifies data flow between systems, turning your middleware into an effective translator. Compared to a complete system overhaul, implementing this approach typically takes just 6–12 weeks. The goal is to encapsulate legacy functionality within a controlled API layer, often referred to as the facade or medallion pattern.

For systems lacking full API support, you can set up a GET-based Data Query Service to enable data retrieval. When building data pipelines, use asynchronous connection pooling to avoid blocking operations and ensure scalability for concurrent tasks. Message brokers can also help by managing data flow, allowing systems to publish events while downstream components subscribe only to the data they need.

Standardized API interfaces keep data flowing smoothly without disrupting existing operations. Make sure that write operations are idempotent, so retries don’t result in duplicate entries in your legacy database. Additionally, integrate automated data cleansing into your middleware to standardize formats and address missing values before the data reaches your AI models.

Once your data pipelines and APIs are ready, it’s time to move into rigorous testing and phased deployment.

Testing and Deployment Methods

A phased deployment minimizes risks and ensures a smooth transition. Start with shadow mode, where the AI processes real production inputs but logs outputs for evaluation without affecting live data. This allows you to measure accuracy against historical outcomes without any operational impact.

Create realistic test environments using anonymized production data to run contract and integration tests, which can help identify bottlenecks before going live. Studies show that organizations lacking proper orchestration face failure rates 3.2 times higher, while those with robust orchestration enjoy 47% faster processing and 42% lower operational costs.

When moving to live operations, begin with a canary rollout by directing a small percentage of traffic through the AI-enabled path. For critical decisions where the AI lacks confidence, use human-in-the-loop (HITL) intervention. Additionally, adopt the strangler fig pattern to gradually replace legacy components via API gateways, avoiding the risks of an all-at-once migration.

To ensure smooth operations, include error-compensation mechanisms and distributed tracing to quickly identify and resolve issues. As Modernize.io puts it:

"Successful AI integration doesn't require throwing away your existing tech investments... It's like technological acupuncture, strategically applied in exactly the right places".

Managing, Maintaining, and Expanding AI Middleware

Keeping your AI middleware running smoothly and scaling effectively requires consistent oversight and a solid framework. By focusing on security, compliance, and scalability, you can maximize its value over time.

Setting Up Governance and Monitoring Systems

Creating an AI Center of Excellence (CoE) is an essential first step. This team, which includes representatives from legal, compliance, architecture, and operations, plays a key role in approving new use cases and setting clear guidelines. These guidelines help determine when AI systems can operate independently and when human oversight is required. Without such a structure, you risk exposing sensitive data or facing security vulnerabilities.

Governance should address four critical areas:

- Data Governance: Ensure compliance with regulations like GDPR and HIPAA.

- Agent Observability: Implement centralized logging and monitoring.

- Agent Security: Protect against threats like prompt injection attacks.

- Agent Development: Standardize the way new integrations are built.

Routing all AI traffic through a managed gateway helps enforce policies, track costs, and maintain consistent logs. Assigning unique identities to AI agents ensures accountability and prevents unauthorized deployments. Regular quarterly audits can identify unused agents that pose security risks or waste resources, allowing you to retire them systematically.

To stay on top of costs, set up real-time alerts for budget thresholds and use cost center tags to allocate expenses to specific departments. For testing new model versions, maintain "golden datasets" that mirror production patterns and serve as benchmarks.

With these governance measures in place, your team will be better equipped to manage and optimize middleware performance.

Training Your Team on AI Middleware

Your team’s expertise is critical to getting the most out of AI middleware. Training should emphasize effective prompting techniques and teach staff to recognize the limitations of AI agents. This helps them understand when to trust automated decisions and when to step in. By involving your team in this way, they become active participants in improving middleware performance.

Modern tools have simplified tasks that once required specialized knowledge, enabling internal staff to fine-tune models for specific needs without needing advanced data science skills. Equip your operations team to use tools like Azure Log Analytics for interpreting centralized logs, investigating anomalies, and executing hot rollback plans when something goes wrong.

Once your team is confident in managing middleware, you can look to expand its integration across the organization.

Expanding Integration Across Your Organization

Start small by targeting high-impact, low-risk use cases within specific business units. This approach allows you to validate the value of AI middleware before scaling up. Standardize successful architectures and prompts into templates to ensure new integrations meet security and logging requirements from the start. By doing so, you can accelerate development while avoiding the technical debt that often comes with isolated, custom-built solutions.

A structured rollout timeline can help streamline this process. For example:

- Weeks 1-2: Discovery

- Weeks 3-4: Deployment

- Weeks 5-8: Tuning

- Weeks 9-12: Controlled pilots

To modernize legacy systems without disrupting existing operations, use an "anti-corruption layer" with standardized protocols like Model Context Protocol. This isolates AI agents from backend systems, making updates more manageable.

As you scale, implement maker-checker loops, where one agent proposes an action and another reviews it before execution. This reduces errors in critical scenarios. Automated scans can also detect configuration drift and ensure compliance with evolving privacy and data residency regulations. Following this disciplined approach has allowed organizations to cut integration costs by 30% and reduce processing errors by 75%.

Conclusion

AI middleware serves as a bridge between legacy systems and modern technology, ensuring that valuable, time-tested business logic isn't lost in the process. Rather than undertaking costly and risky system overhauls, it functions as a universal translator, enabling older mainframes to communicate effortlessly with modern cloud APIs. This is especially critical for organizations that still rely heavily on legacy mainframes for their core operations.

The adoption of intelligent middleware has led to noticeable improvements in efficiency and reductions in errors for many businesses. These benefits highlight how AI middleware can reshape operations in a meaningful way.

"Legacy systems are untapped opportunities for transformation." - Klover.ai

By taking a phased approach to integration - starting with a thorough system evaluation, introducing standardized APIs, and gradually scaling across the organization - businesses can modernize without disrupting their operations. Establishing robust governance frameworks, investing in team training, and implementing monitoring systems ensures a smooth transition and sets the stage for future growth.

AI middleware goes beyond simply linking old and new systems. It helps unlock hidden potential within existing infrastructure while creating a strong, adaptable foundation for future advancements - all without compromising the stability that businesses rely on to operate effectively.

FAQs

How does AI middleware improve the performance of legacy systems?

AI middleware serves as a clever bridge, linking older systems with newer, cutting-edge applications. By connecting outdated infrastructures with advanced AI features, it improves compatibility, simplifies workflows, and eliminates the need for expensive system replacements.

This technology also brings smart functionality to existing systems, making them easier to upgrade, more secure, and better equipped to handle changing business demands. The result? Quicker integration, better efficiency, and a system that's ready to adapt to the future.

Which industries benefit most from using AI middleware?

Industries dealing with outdated systems and requiring improved integration, automation, and decision-making often see the most gains from AI middleware. Key sectors like manufacturing, finance, healthcare, government, and banking stand out as prime examples.

Take manufacturing, for instance. By connecting sensors, edge devices, and automation systems, companies can enhance efficiency, cut costs, and create safer work environments. In finance, AI middleware helps modernize operations by enabling fraud detection and automating processes - all while working within existing infrastructure. Healthcare organizations, on the other hand, benefit by integrating AI into their older systems to simplify decision-making and improve operational workflows.

AI middleware reshapes how these industries function by enabling quicker data movement, smarter decision-making, and better system interoperability. This keeps businesses competitive and better equipped to adapt to changing demands.

What are the key steps to evaluate legacy systems for AI middleware integration?

To successfully integrate AI middleware into legacy systems, you’ll need to follow a few key steps. It all starts with a thorough system audit. This means evaluating your current setup to determine its readiness for AI. Pay close attention to areas like data quality, infrastructure, and how easily the system can handle integration. This will help you pinpoint any gaps or weaknesses that need fixing.

Once you’ve assessed the system, the next step is to prepare your data. Make sure your data is clean, accurate, consistent, and available in real-time. This is crucial for ensuring seamless interaction between your legacy system and AI technologies. Without high-quality, accessible data, the integration process can hit major roadblocks.

Finally, you’ll need to select the right integration method. Options like APIs or middleware tools can bridge the gap between old and new systems. Keep in mind the specific quirks of your legacy system, such as outdated components or sparse documentation, as these will influence the complexity of the integration process. By addressing these steps, you can set the stage for a smooth and efficient AI middleware deployment.