Agentic AI Orchestration: Dynamic, Resource-Aware Guide

Discover how Flight 2.0 transforms AI orchestration with dynamic workflows, scalable solutions, and resource-aware tools for modern AI challenges.

Artificial intelligence continues to transform the landscape of business and technology, driving innovation and operational efficiency. As organizations increasingly adopt AI-driven solutions, the importance of effective orchestration tools has grown exponentially. In a recent presentation, Neils Bentilan, Chief Machine Learning (ML) Engineer at Union AI, unveiled Flight 2.0, a dynamic, resource-aware AI orchestration platform built to address the challenges of modern AI systems. This article explores the core concepts, challenges, and innovations discussed, offering insights into how businesses and technical teams can leverage advanced orchestration in their AI initiatives.

The Evolution of Orchestration: From Static Pipelines to Dynamic Workflows

Orchestration has long played a critical role in data engineering and AI/ML workflows. Historically, orchestration tools were designed for static, predefined processes, but the rapid rise of large language models (LLMs), multimodal AI systems, and agent-based architectures has redefined what orchestration must achieve.

A Brief Timeline of Orchestration Tools:

- 2012: The focus was on Extract, Transform, Load (ETL) processes to manage "big data."

- 2014: Microservices became a trend, requiring tools to orchestrate independent services.

- 2017: Data and ML pipelines gained prominence, fueled by open-source contributions from companies like Lyft and Uber.

- 2020–2021: Managed orchestration solutions (e.g., MLOps platforms) emerged, offering enterprises scalable and user-friendly tools.

- 2025: The AI community now demands orchestration solutions capable of supporting dynamic agentic workflows, where workflows adapt in real time based on AI decision-making.

Flight 2.0 embodies this evolution by addressing the limitations of static orchestration and embracing dynamism, flexibility, and fault tolerance.

Key Challenges in Modern AI Orchestration

Neils Bentilan’s presentation highlighted six major pain points faced by AI/ML practitioners and businesses:

1. Steep Learning Curves for Orchestration Tools

Learning domain-specific languages (DSLs) or complex orchestration frameworks can be time-consuming, creating barriers for new adopters.

2. Costly Onboarding and Debugging

Onboarding new data scientists and engineers to orchestration platforms often requires significant time and resources. Debugging failures in distributed systems further adds to operational complexity.

3. Static Execution Limitations

Traditional orchestration tools rely on static Directed Acyclic Graphs (DAGs), which lack flexibility. They cannot dynamically adjust workflows in response to runtime conditions, such as resource constraints or new data inputs.

4. Inefficient Scaling and Parallelization

Scaling workflows across distributed environments is often difficult, particularly for tasks requiring parallel execution. Limitations in scalability hinder performance for workloads like training models or running complex AI agents.

5. Brittle Pipelines

Many pipelines fail to tolerate runtime errors. A single failure often necessitates restarting the entire workflow, wasting compute resources and time.

6. Implementation of Best Practices

Practices like versioning, model management, and deployment coordination are complex to implement consistently across teams, especially as workflows grow in complexity.

Flight 2.0: A Breakthrough in AI Orchestration

Flight 2.0 introduces a dynamic, resource-aware orchestration platform designed to overcome these challenges. Built on robust principles such as scalability, fault tolerance, and native Python support, it offers a transformative approach to building and managing AI workflows.

Key Features of Flight 2.0:

- Dynamic Workflow Execution: Supports workflows that adapt to runtime conditions by dynamically generating new tasks or modifying existing pipelines.

- Pythonic Design: Allows users to write orchestration logic using familiar Python constructs, including

async/awaitand parallelized operations with minimal setup. - Fault Tolerance: Automatically detects and recovers from failures, enabling workflows to resume from the point of failure without restarting entirely.

- Resource Awareness: Enables developers to declare resource requirements (e.g., GPUs, memory) for each task, ensuring optimal allocation and utilization.

- Scalability: Supports parallel execution of tasks across cloud-based environments, enabling faster processing and more efficient use of compute resources.

- Observability and Debugging: Provides detailed insights into workflow execution, including error tracking, logs, and the ability to debug directly in production-like environments.

Practical Applications of Flight 2.0

1. Dynamic Orchestration for AI Agents

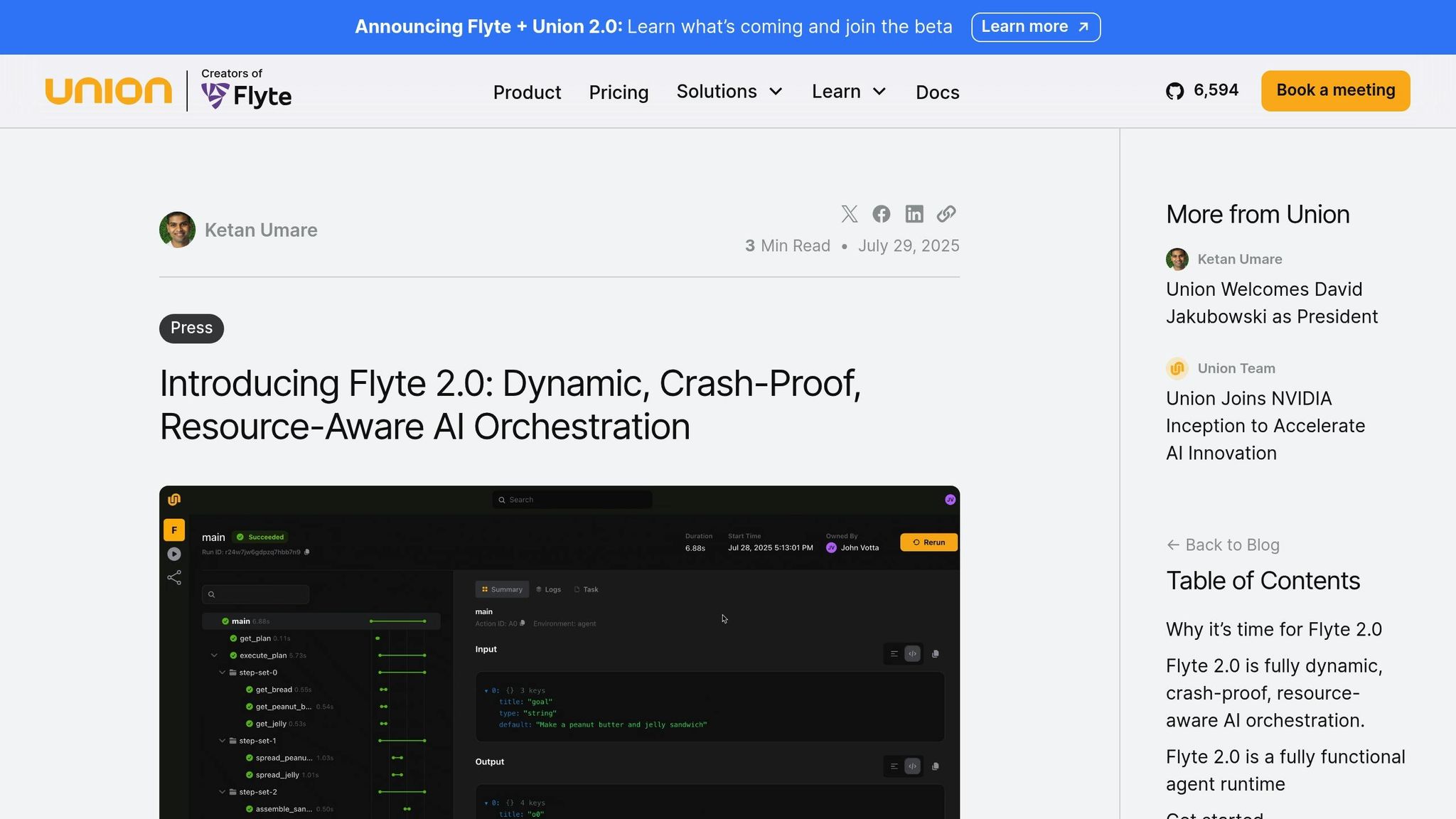

One of Flight 2.0's standout features is its ability to dynamically adjust workflows, making it ideal for agent-based architectures. For example, an LLM tasked with creating a peanut butter and jelly sandwich might produce a plan that includes multiple parallel steps: fetching bread, peanut butter, and jelly. Flight 2.0 can execute these steps simultaneously, significantly reducing execution time.

2. Efficient Distributed Training

Flight 2.0 simplifies distributed computing by allowing developers to partition data and train models across multiple machines. For instance, in a random forest training scenario, each decision tree can be trained on a separate data subset, with results combined seamlessly into a final model.

3. Resilient Pipelines with Error Recovery

Unlike brittle pipelines that fail entirely upon encountering an error, Flight 2.0 can recover from failures mid-execution. For example, if a task runs out of memory, Flight 2.0 retries the task with increased memory allocation, ensuring the pipeline continues without manual intervention.

A Vision for the Future: Flight 2.0’s Roadmap

While already a powerful orchestration tool, Flight 2.0's roadmap promises continuous innovation:

- Rust Core: A high-performance engine for faster execution.

- Distributed Training Plugins: Support for advanced ML scenarios.

- Local Sandbox: A Kubernetes-like environment that allows users to test workflows locally.

- Full Open Source Release: Making the platform universally accessible for developers and enterprises.

- Enterprise-grade Deployment: Compatibility with major cloud providers (AWS, Azure, GCP).

These features align with the evolving needs of businesses seeking to integrate cutting-edge AI solutions at scale.

Key Takeaways

- Dynamic Orchestration Redefined: Flight 2.0 introduces a dynamic, resource-aware approach to AI workflow management, replacing static DAGs with adaptive pipelines.

- Pythonic Simplicity: Familiar Python constructs, including

async/awaitand decorators, make Flight 2.0 accessible to developers. - Fault Tolerance as a Core Principle: The platform automatically recovers from runtime errors, significantly improving reliability and reducing downtime.

- Scalability and Resource Awareness: Flight 2.0 optimizes distributed execution and allows declarative resource allocation for tasks, such as requesting GPUs or increasing memory.

- Agent-ready Infrastructure: Built to support the dynamism of agent-based architectures, enabling real-time decision-making workflows.

- Observability and Debugging: Provides intuitive tools for tracking, logging, and debugging, ensuring transparent and efficient development.

For business leaders, Flight 2.0 offers an opportunity to bridge the gap between experimental AI research and large-scale production systems. For AI enthusiasts, it provides a powerful framework to explore the next generation of AI orchestration.

Conclusion

The next era of AI demands orchestration tools that are not only flexible but also resilient, efficient, and resource-aware. Flight 2.0 exemplifies this shift, enabling organizations to scale their AI initiatives while embracing the dynamism of modern workflows. Whether you're deploying AI agents, training models, or orchestrating complex workflows, Flight 2.0 offers a robust foundation to accelerate innovation. By addressing the pain points of traditional orchestrators and embracing the needs of the future, it sets a new standard for AI orchestration in 2025 and beyond.

Source: "Why Agentic AI Requires A New Approach to Orchestration - Niels Bantilan" - Toronto Machine Learning Society (TMLS), YouTube, Sep 29, 2025 - https://www.youtube.com/watch?v=u4lAQ5TeuN8